This page is for printing out all of the briefing papers. The briefing papers are given in numerical order. Note that some of the internal links may not work.

Compliance with HTML standards is needed for a number of reasons:

The World Wide Web Consortium, W3C, recommend use of the XHTML 1.0 (or higher) standard. This has the advantage of being an XML application (allowing use of XML tools) and can be rendered by most browsers. However authoring tools in use may not yet produce XHTML. Therefore HTML 4.0 may be used.

Cascading style sheets (CSS) should be used in conjunction with XHTML/HTML to describe the appearance of Web resources.

Web resources may be created in a number of ways. Often HTML authoring tools such as DreamWeaver, FrontPage, etc. are used, although experienced HTML authors may prefer to use a simple editing tool. Another approach is to make use of a Content Management System. An alternative approach is to convert proprietary file formats (e.g. MS Word or PowerPoint). In addition sometimes proprietary formats are not converted but are stored in their native format.

A number of approaches may be taken to monitoring compliance with HTML standards. For example you can make use of validation features provided by modern HTML authoring tools, use desktop compliance tools or Web-based compliance tools.

The different types of tools can be used in different ways. Tools which are integrated with a HTML authoring tool should be used by the page author. It is important that the author is trained to use such tools on a regular basis. It should be noted that it may be difficult to address systematic errors (e.g. all files missing the DOCTYPE declaration) with this approach.

A popular approach is to make use of SSIs (server-side includes) to retrieve common features (such as headers, footers, navigation bars, etc.). This can be useful for storing HTML elements (such as the DOCTYPE declaration) in a manageable form. However this may cause validation problems if the SSI is not processed.

Another approach is to make use of a Content Management System (CMS) or similar server-side technique, such as retrieving resources from a database. In this case it is essential that the template used by the CMS complies with standards.

It may be felt necessary to separate the compliance process from the page authoring. In such cases use of a dedicated HTML checker may be needed. Such tools are often used in batch, to validate multiple files. In many cases voluminous warnings and error messages may be provided. This information may provide indications of systematic errors which should be addressed in workflow processes.

An alternative approach is to use Web-based checking services. An advantage with this approach is that the service may be used in a number of ways: the service may be used directly by entering the URL of a resource to be validated or live access to the checking service may be provided by including a link from a validation icon as used at <http://www.ukoln.ac.uk/qa-focus/> as shown in Figure 1 (this approach could be combined with use of cookies or other techniques so that the icon is only displayed to an administrator).

Another approach is to configure your Web server so that users can access the validation service by appending an option to the URL. For further information on this technique see the QA Focus briefing document A URI Interface To Web Testing Tools> at <http://www.ukoln.ac.uk/qa-focus/documents/briefings/briefing-59/>. This technique can be deployed with a simple option on your Web server's configuration file.

It is desirable to maximise the accessibility of Web sites in order to ensure that Web resources can be accessed by people who may suffer from a range of disabilities and who may need to use specialist browsers (such as speaking browsers) or configure their browser to enhance the usability of Web sites (e.g. change font sizes, colours, etc.).

Web sites which are designed to maximise accessibility should also be more usable generally, (e.g. for use with PDAs) and are likely to be more easily processed by automated tools.

Although the development of accessible Web sites will be helped by use of appropriate templates and can be managed by Content Management Systems (CMSs), there will still be a need to test the accessibility of Web sites.

Full testing of accessibility with require manual testing, ideally making use of users who have disabilities. The testing should also address the usability of Web sites as well as its accessibility.

Manual testing can however be complemented with use of automated accessibility checking tools. This document covers the use of automated accessibility checking tools.

The W3C WAI (Web Accessibility Initiative) have developed guidelines on the accessibility of Web resources. Many institutions are making use of the WAI guidelines and will seek to achieve compliance with the guidelines to A, AA or AAA standards. Many testing tools will measure the compliance of resources with these guidelines.

The best-known accessibility checking tool was Bobby which was renamed as WebXact, a Web-based tool for reporting on the accessibility of a Web page and its compliance with W3C's WAI guidelines. However this tool is no longer available.

HiSoft's Cynthia Says provides an alternative accessibility and Web site checking facility - see <http://www.contentquality.com/>.

Note that it can be useful to make use of a multiple checking tools. W3C WAI provides a list of accessibility testing tools at <http://www.w3.org/WAI/ER/tools/>.

When you use testing tools warnings and errors will be provided about the accessibility of your Web site. A summary of the most common messages is given below.

As mentioned previously, automated testing tools cannot confirm that a resource is accessible by itself - manual testing will be required to complement an automated approach. However automated tools can be used to provide an overall picture, to identify areas in which manual testing many be required and to identify problems in templates or in the workflow process for producing HTML resources and areas in which training and education may be needed.

Note that automated tools may sometimes give inaccurate or misleading results. In particular:

Although it is desirable to make use of open standards such as HTML when providing access to resources on Web sites there may be occasions when it is felt necessary to use proprietary formats. For example:

If it is necessary to provide access to a proprietary file format you should not cite the URL of the proprietary file format directly. Instead you should give the URL of a native Web resource, typically a HTML page. The HTML page can provide additional information about the proprietary format, such as the format type, version details, file size, etc. If the resource is made available in an open format at a later date, the HTML page can be updated to provide access to the open format - this would not be possible if the URL of the proprietary file was used.

An example of this approach is illustrated. In this case access to MS PowerPoint slides are available from a HTML page. The link to the file contains information on the PowerPoint version details.

Various tools may be available to convert resources from a proprietary format to HTML. Many authoring tools nowadays will enable resources to be exported to HTML format. However the HTML may not comply with HTML standards or use CSS and it may not be possible to control the look-and-feel of the generated resource.

Another approach is to use a specialist conversion tool which may provide greater control over the appearance of the output, ensure compliance with HTML standards, make use of CSS, etc.

If you use a tool to convert a resource to HTML it is advisable to store the generated resource in its own directory in order to be able to manage the master resource and its surrogate separately.

You should also note that some conversion tools can be used dynamically, allowing a proprietary format to be converted to HTML on-the-fly.

MS Word files can be saved as HTML from within MS Word itself. However the HTML that is created is of poor quality, often including proprietary or deprecated HTML elements and using CSS in a form which is difficult to reuse.

MS PowerPoint files can be saved as HTML from within MS PowerPoint itself. However the Save As option provides little control over the output. The recommended approach is to use the Save As Web Page option and then to chose the Publish button. You should then ensure that the HTML can be read by all browsers (and not just IE 4.0 or later). You should also ensure that the file has a meaningful title and the output is stored in its own directory.

In some circumstances it may be possible to provide a link to an online conversion service. Use of Adobe's online conversion service for converting files from PDF is illustrated.

It should be noted that this approach may result in a loss of quality from the original resource and is dependent on the availability of the remote service. However in certain circumstances it may be useful.

When the funding for a project finishes it is normally expected that the project's Web site will continue to be available in order to ensure that information about the project, the project's findings, reports, deliverables, etc. are still available.

This document provides advice on "mothballing" a project Web site.

The entry point for the project Web site should make it clear that the project has finished and that there is no guarantee that the Web site will be maintained.

You should seek to ensure that dates on the Web site include the year - avoid content which says, for example, "The next project meeting will be held on 22 May".

You may also find it useful to make use of cascading style sheets (CSS) which could be used to, say, provide a watermark on all resources which indicate that the Web site is no longer being maintained.

Although software is not subject to deterioration due to aging, overuse, etc. software products can cease to work over time. Operating systems upgrades, upgrades to software libraries, conflicts with newly installed software, etc. can all result in software products used on a project Web site to cease working.

It is advisable to adopt a defensive approach to software used on a Web site.

There are a number of areas to be aware of:

We have outlined a number of areas in which a project Web site may degrade in quality once the project Web site has been "mothballed".

In order to minimise the likelihood of this happening and to ensure that problems can be addressed with the minimum of effort it can be useful to adopt a systematic set of procedures when mothballing a Web site.

It can be helpful to run a link checker across your Web site. You should seek to ensure that all internal links (links to resources on your own Web site) work correctly. Ideally links to external resources will also work, but it is recognised that this may be difficult to achieve. It may be useful to provide a link to a report of the link check on your Web site.

It would be helpful to provide documentation on the technical architecture of your Web site, which describes the server software used (including use of any unusual features), use of server-side scripting technologies, content management systems, etc.

It may also be useful to provide a mirror of your Web site by using a mirroring package or off-line browser. This will ensure that there is a static version of your Web site available which is not dependent on server-side technologies.

You should give some thought to contact details provided on the Web site. You will probably wish to include details of the project staff, partners, etc. However you may wish to give an indication if staff have left the organisation.

Ideally you will provide contact details which are not tied down to a particular person. This may be needed if, for example, your project Web site has been hacked and the CERT security team need to make contact.

Ideally you will ensure that your plans for mothballing your Web site are developed when you are preparing to launch your Web site!

With the growing popularity in use of mobile devices and pervasive networking on the horizon we can expect to see greater use of PDAs (Personal Digital Assistants) for accessing Web resources.

This document describes a method for accessing a Web site on a PDA. In addition this document highlights issues which may make access on a PDA more difficult.

AvantGo is a well-known Web based service which provides access to Web resources on a PDA such as a Palm or Pocket PC.

The AvantGo service is freely available from <http://www.avantgo.com/>.

Once you have registered on the service you can provide access to a number of dedicated AvantGo channels. In addition you can use an AvantGo wizard to provide access to any publicly available Web resources on your PDA.

An example of two Web sites showing the interface on a Palm is illustrated.

If you have a PDA you may find it useful to use it to provide access to your Web site, as this will enable you to access resources when you are away from your desktop PC. This may also be useful for your project partners. In addition you may wish to encourage users of your Web site to access it in this way.

AvantGo uses robot software to access your Web site and process it in a format suitable for viewing on a PDA, which typically has more limited functionality, memory, and viewing area than a desktop PC. The robot software may not process a number of features which may be regarded as standard on desktop browsers, such as frames, JavaScript, cookies, plugins, etc.

The ability to access a simplified version of your Web site can provide a useful mechanism for evaluating the ease with which your Web site can be repurposed and for testing the user interface under non-standard environments.

You should be aware of the following potential problem areas:

As well as providing enhanced access to your Web site use of tools such as AvantGo can assist in testing access to your Web site. If your Web site makes use of open standards and follows best practices it is more likely that it will be usable on a PDA and by other specialist devices.

You should note, however, that use of open standards and best practices will not guarantee that a Web site will be accessible on a PDA.

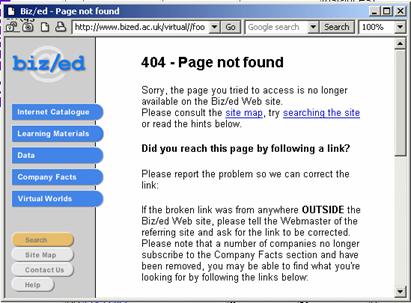

A Web sites 404 error page can be one of the most widely accessed pages on a Web site. The 404 error page can also act as an important navigational tool, helping users to quickly find the resource they were looking for. It is therefore important that 404 error pages provide adequate navigational facilities. In addition, since the page is likely to be accessed by many users, it is desirable that the page has an attractive design which reflects the Web sites look-and-feel.

Web servers will be configured with a default 404 error page. This default is typically very basic.

In the example shown the 404 page provides no branding, help information, navigational bars, etc.

Figure 1: A Basic 404 Error Message

An example of a richer 404 error page is illustrated. In this example the 404 page is branded with the Web site's colour scheme, contains the Web site's standard navigational facility and provide help information.

Figure 2: A Richer 404 Error Message

It is possible to define a number of types of 404 error pages:

An article on 404 error pages, based on a survey of 404 pages in UK Universities is available at <http://www.ariadne.ac.uk/issue20/404/>. An update is available at <http://www.ariadne.ac.uk/issue32/web-watch/>.

There are several reasons why it is important to ensure that links on Web sites work correctly:

However there are resource implications in maintaining link integrity.

A number of approaches can be taken to checking broken links.

Note that these approaches are not exclusive: Web site maintainers may choose to make use of several approaches.

There is a need to implement a policy on link checking. The policy could be that links will not be checked or fixed - this policy might be implemented for a project Web site once the funding has finished. For a small-scale project Web site the policy may be to check links when resources are added or updated or if broken links are brought to the project's attention, but not to check existing resources - this is likely to be an implicit policy for some projects.

For a Web site one which has a high visibility or gives a high priority to the effectiveness of the Web site, a pro-active link checking policy will be needed. Such a policy is likely to document the frequency of link checking, and the procedures for fixing broken links. As an example of approaches taken to link checking by a JISC service, see the article about the SOSIG subject gateway [1].

Experienced Web developers will be familiar with desktop link-checking tools, and many lists of such tools are available [2] [3]. However desktop tools normally need to be used manually. An alternative approach is to use server-based link-checking software which send email notification of broken links.

Externally-hosted link-checking tools may also be used. Tools such as LinkValet [4] can be used interactively or in batch. Such tools may provide limited checking for free, with a licence fee for more comprehensive checking.

A popular approach is to make use of SSIs (server-side includes) to retrieve common features (such as headers, footers, navigation bars, etc.). This can be useful for storing HTML elements (such as the DOCTYPE declaration) in a manageable form. However this may cause validation problems if the SSI is not processed.

Another approach is to use a browser interface to tools, possibly using a Bookmarklet [5] although UKOLN's server-based ,tools approach [6] is more manageable.

It is important to ensure that link checkers check for links other than <a href=""...> and <img src="...">. There is a need to check external JavaScript, CSS, etc. files (linked to by the <link> tag) and that checks are carried out on personalised interfaces to resources.

It should also be noted that erroneous link error reports may sometimes be produced (e.g. due to misconfigured Web servers).

Web sites which contain more than a handful of pages should provide a search facility. This is important for several reasons:

The two main approaches to the provision of search engines on a Web site are to host a search engine locally or to make use of an externally-hosted search engine.

The traditional approach is to install search engine software locally. The software may be open source (such as ht://Dig [1]) or licensed software (such as Inktomi [2]). It should be noted that the search engine software does not have to be installed on the same system as the Web server. This means that you are not constrained to using the same operating system environment for your search engine as your Web server.

Because the search engine software can hosted separately from the main Web server it may be possible to make use of an existing search engine service within the organisation which can be extended to index a new Web site.

An alternative approach is to allow a third party to index your Web site. There are a number of companies which provide such services. Some of these services are free: they may be funded by advertising revenue. Such services include Google [3], Atomz [4] and FreeFind [5].

Using a locally-installed search engine gives you control over the software. You can control the resources to be indexed and those to be excluded, the indexing frequency, the user interface, etc. However such control may have a price: you may need to have technical expertise in order to install, configure and maintain the software.

Using an externally-hosted search engine can remove the need for technical expertise: installing an externally-hosted search engine typically requires simply completing a Web form and then adding some HTML code to your Web site. However this ease-of-use has its disadvantages: typically you will lose the control over the resources to be indexed, the indexing frequency, the user interfaces, etc. In addition there is the dependency on a third party, and the dangers of a loss of service if the organisation changes its usage conditions, goes out of business, etc.

Surveys of search facilities used on UK University Web sites have been carried out since 1998 [6]. This provides information not only on the search engines tools used, but also to spot trends.

Since the surveys began the most widely used tool has been ht://Dig - an open source product. In recent years the licensed product Inktomi has shown a growth in usage. Interestingly, use of home-grown software and specialist products has decreased - search engine software appears now to be a commodity product.

Another interesting trend appears to be in the provision of two search facilities; a locally-hosted search engine and a remote one - e.g. see the University of Lancaster [7].

Producing an archive of high-quality images with a server full of associated delivery images is not an easy task. The workflow consists of many interwoven stages, each building on the foundations laid before. If, at any stage, image quality is compromised within the workflow, it has been totally lost and can never be redeemed.

It is therefore important that image quality is given paramount consideration at all stages of a project from initial project planning through to exit strategy.

Once the workflow is underway, quality can only be lost and the workflow must be designed to capture the required quality right from the start and then safeguard it.

Image QA within a digitisation project's workflow can be considered a 4-stage process.

Strategic QA is undertaken in the initial planning stages of the project when the best methodology to create and support your images, now and into the future will be established. This will include:

Process QA is establishing quality control methods within the image production workflow that support the highest quality of capture and image processing, including:

Sign-off QA is implementing an audited system to assure that all images and their associated metadata are created to the established quality standard. A QA audit history is made to record all actions undertaken on the image files.

On-going QA is implementing a system to safeguard the value and reliability of the images into the future. However good the initial QA, it will be necessary to have a system that can report, check and fix any faults found within the images and associated metadata after the project has finished. This system should include:

Much of the final quality of a delivered image will be decided, long before, in the initial 'Strategic' and 'Process' QA stages where the digitisation methodology is planned and equipment sourced. However, once the process and infrastructure are in place it will be the operator who needs to manually evaluate each image within the 'Sign-off' QA stage. This evaluation will have a largely subjective nature and can only be as good as the operator doing it. The project team is the first and last line of defence against any drop in quality. All operators must be encouraged to take pride in their work and be aware of their responsibility for its quality.

It is however impossible for any operator to work at 100% accuracy for 100% of the time and faults are always present within a productive workflow. What is more important is that the system is able to accurately find the faults before it moves away from the operator. This will enable the operator to work at full speed without having to worry that they have made a mistake that might not be noticed.

The image digitisation workflow diagram in this document shows one possible answer to this problem.

This document was written by TASI, the Technical Advisory Service For Images.

This document provides advice on how the HTML <link> element can be used to improve the navigation of Web sites.

The purpose of the HTML <link> element is to specify relationships with other documents. Although not widely used the <link> element provides a mechanism for improving the navigation of Web sites.

The <link> element should be included in the <head> of HTML documents. The syntax of the element is: <link rel=”relation” href=”url”>. The key relationships which can improve navigation are listed below.

| Relation | Function |

| next | Refers to the next document in a linear sequence of documents. |

| prev | Refers to the previous document in a linear sequence of documents. |

| home | Refers to the home page or the top of some hierarchy. |

| first | Refers to the first document in a collection of documents. |

| contents | Refers to a document serving as a table of contents. |

| help | Refers to a document offering help. |

| glossary | Refers to 1 document providing a glossary of terms that pertain to the current document. |

Use of the <link> element enables navigation to be provided in a consistent manner as part of the browser navigation area rather than being located in an arbitrary location in the Web page. This has accessibility benefits. In addition browsers can potential enhance the performance by pre-fetching the next page in a sequence.

A reason why <link> is not widely used has been the lack of browser support. This has changed recently and support is now provided in the latest versions of the Opera and Netscape/Mozilla browsers and by specialist browsers (e.g. iCab and Lynx).

Since the <link> element degrades gracefully (it does not cause problems for old browser) use of the <link> element will cause no problems for users of old browsers.

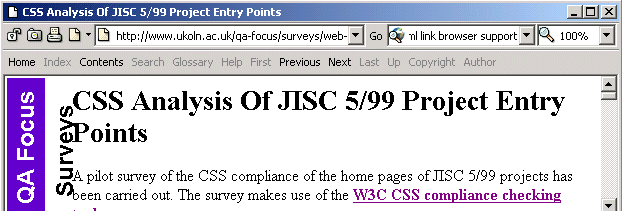

An illustration of how the <link> element is implemented in Opera is shown below.

Figure 1: Browser Support For The <link> Element

In Figure 1 a menu of navigational aids is available. The highlighted options (Home, Contents, Previous and Next) are based on the relationships which have been defined in the document. Users can use these navigational options to access the appropriate pages, even though there may be no corresponding links provided in the HTML document.

It is important that the link relationships are provided in a manageable way. It would not be advisable to create link relationships by manually embedding them in HTML pages if the information is liable to change.

It is advisable to spend time in defining the on key navigational locations, such as the Home page (is it the Web site entry point, or the top of a sub-area of the Web site). Such relationships may be added to templates included in SSIs. Server-side scripts are a useful mechanism for exploiting other relationships, such as Next and Previous - for example in search results pages.

Additional information is provided at

<http://www.ukoln.ac.uk/qa-focus/documents/briefings/briefing-10/>.

The use of open standards can help provide interoperability and maximise access to resources and services. However this raises two questions: "Why open standards?" and "What are open standards?".

Open standards can provide several benefits:

The term "open standards" is ambiguous. As described in Wikipedia "There is no single definition and interpretations vary with usage" [1]. The EU's definition is [2]:

Some examples of recognised open standards bodies are given in Table 1.

| Standards Body | Comments |

|---|---|

| W3C | World Wide Web Consortium (W3C). Responsible for the development of Web standards (recommendations). See <http://www.w3.org/TR/>. Relevant standards include HTML, XML, CSS, SMIL, SVG, etc. |

| IETF | Internet Engineering Task Force (IETF). Responsible for the development of Internet standards (known as IETF RFCs). See list of IETF RFCs at <http://www.ietf.org/rfc.html>. Relevant standards include HTTP, MIME, etc. |

| ISO | International Organisation For Standardization (ISO). See <http://www.iso.org/iso/en/stdsdevelopment/whowhenhow/how.html>. Relevant standards areas include character sets, networking, etc. |

| NISO | National Information Standards Organization (NISO). See <http://www.niso.org/>. Relevant standards include Z39.50. |

| IEEE | Institute of Electrical and Electronics Engineers (IEEE). See <http://www.ieee.org/>. |

| ECMA | ECMA International. Association responsible for standardisation of Information and Communication Technology Systems (such as JavaScript). See <http://www.ecma-international.org/>. |

The term proprietary refers to formats which are owned by an organisation, group, etc. The term industry standard is often used to refer to a widely used proprietary standard. For example, the proprietary Microsoft Excel format is sometimes referred to as an industry standard for spreadsheets. To make matters even more confusing, the prefix is sometime omitted and MS Excel can be referred to as a standard.

To further confuse matters, companies which own proprietary formats may choose to make the specification freely available. Alternatively third parties may reverse engineer the specification and publish the specification. In addition tools which can view or create proprietary formats may be available on multiple platforms or as open source.

In all these cases, although there may appear to be no obvious barriers to use of the proprietary format, such formats should not be classed as open standards as they have not been approved by a neutral standards body. The organisation owning the format may chose to change the format or the usage conditions at any time. File formats in this category include Microsoft Office formats, Macromedia Flash and Java.

Many Web developers and administrators are conscious of the need to ensure that their Web sites reach as high a level of accessibility as possible. But how do you actually find out if a site has accessibility problems? Certainly, you cannot assume that if no complaints have been received through the site feedback facility (assuming you have one), there are no problems. Many people affected by accessibility problems will just give up and go somewhere else.

So you must be proactive in rooting out any problems as soon as possible. Fortunately there are a number of handy ways to help you get an idea of the level of accessibility of the site which do not require an in-depth understanding of Web design or accessibility issues. It may be impractical to test every page, but try to make sure you check the Home page plus as many high traffic pages as possible.

If you have a disability, you have no doubt already discovered whether your site has accessibility problems which affect you. If you know someone with a disability which might prevent them accessing information in the site, then ask them to browse the site, and tell you of any problems. Particularly affected groups include visually impaired people (blind, colour blind, short or long sighted), dyslexic people and people with motor disabilities (who may not be able to use a mouse). If you are in Higher Education your local Access Centre [1] may be able to help.

Get hold of a text browser such as Lynx [2] and use it to browse your site. Problems you might uncover include those caused by images with no, or misleading, alternative text, confusing navigation systems, reliance on scripting or poor use of frames.

You can get a free evaluation version of IBM's Homepage Reader [3] or pwWebSpeak [4], speech browsers used by many visually impaired users of the Web. The browsers "speak" the page to you, so shut your eyes and try to comprehend what you are hearing.

Alternatively, try asking a colleague to read you the Web page out loud. Without seeing the page, can you understand what you're hearing?

As suggested by the World Wide Web Consortium (W3C) Web Accessibility Initiative (WAI) [5], you should test your site under various conditions to see if there are any problems including (a) graphics not loaded (b) frames, scripts and style sheets turned off and (c) browsing without using a mouse. Also, try using bookmarklets or favelets to test your Web site under different conditions: further information on accessibility bookmarklets can be found at [6].

There are a number of Web-based tools which can provide valuable information on potential accessibility problems such as Rational Policy Tester Accessibility [7] and The Wave tools [8]. You should also check whether the underlying HTML of your site validates to accepted standards using the World Wide Web Consortium's MarkUp Validation Service [9] as non-standard HTML can also cause accessibility problems

Details of any problems found should be noted: the effect of the problem, which page was affected, plus why you think the problem was caused. You are unlikely to catch all accessibility problems in the site, but the tests described here will give you an indication of whether the site requires immediate attention to raise accessibility. Remember that improving accessibility for specific groups, such as visually impaired people, will often have usability benefits for all users.

Since it is unlikely you will catch all accessibility problems and the learning curve is steep, it may be advisable to commission an expert accessibility audit. In this way, you can receive a comprehensive audit of the subject site, complete with detailed prioritised recommendations for upgrading the level of accessibility of the site. Groups which provide such audits include the Digital Media Access Group, based at the University of Dundee or the RNIB, who also audit Web sites for access to the blind.

Additional information is provided at

<http://www.ukoln.ac.uk/qa-focus/documents/briefings/briefing-12/>.

This document was written by David Sloan, DMAG, University of Dundee and originally published at by the JISC TechDis service We are grateful for permission to republish this document.

This document gives high-level advice for people who develop software for use either internally within a project or for use externally as a project deliverable.

Each computer programming language has its own coding conventions. However there are a number of general points that you can follow to ensure that your code is well organised and can be easily understood by others. These guidelines are not in any way mandatory but attempt to formalise code so that reading, reusing and maintaining code is easier. Most coding standards are arbitrary but adopting some level of consistency will help create better software.

The key point to remember is that good QA practice involves deciding on and recording a number of factors with your programming team before the onset of your project. Having such a record will allow you to be consistent.

In order for programmers to use your software it is important that you include clear documentation. This documentation will take the form of internal and external documentation.

Naming conventions of files, procedures and variables etc. should be sensible and meaningful and agreed on before the projects starts. Use of capitalisation may vary in different programming languages but it is sensible to avoid names that differ only in case or look very similar. Also avoid names that conflict with standard library names.

There are a number of key points to remember when writing your code:

Standards are "documented agreements containing technical specifications or other precise criteria to be used consistently as rules, guidelines, or definitions of characteristics, to ensure that materials, products, processes and services are fit for their purpose" (ISO 1997). The aim of international standards is to encapsulate most appropriate current practice. The International Organization for Standardization (ISO) [1] is the head of all national standardisation bodies. The most relevant ISO standard for software code development is ISO 9000-3: 1997 (QA for the development, supply, installation and maintenance of computer software). For other relevant standards also check the Institute of Electrical and Electronics Engineers [2] and the American National Standards Institute [3].

At the start of development it may help to ask your team the following questions:

Further information on Software QA at Sticky Minds

<http://www.stickyminds.com/>

A Web form is not dissimilar in appearance and use to a paper form. It appears on a Web page and contains special elements called controls along with normal text and mark up. These controls can take the form of checkboxes, text boxes, radio buttons, menus, etc. Users generally fill in the form by entering text and selecting menu items and then submit the form for processing. The agent could be an email or Web server.

Web forms have a variety of uses and are a way to get specific pieces of information from the user. Web sites with forms have their own specific set of problems and need vigorous testing to ensure that they work.

Some of the key things to consider when designing your form are:

Making fields compulsory can cause problems. Occasionally a user may feel that the question you have asked is inappropriate in context or they just can't provide the information. You need to decide if the information needed is absolutely necessary. Users will be more likely to give information if you explain why the data that you're asking for is needed. It is acceptable to ask the user if they meant to leave out a piece of information and then accept their answer.

Validation of forms can be carried out either client side or by processing on the server. Client side validation requires the use of a scripting language like JavaScript and can be problematic if the user's browser disallows scripting. However server side validation can be more complicated to set up.

Sometimes the categories you offer in a drop down list do not match the answer that the user wants to give you. Sites from the USA often ask for states, which UK users cannot provide. If you want to use a drop down list make sure that your error messages are helpful rather than negative and allow users to select an 'other' option. If you have given a good selection of categories then you should rarely get users picking this.

Also consider if the categories you have provided are subjective enough. There may be issues over the terms used to refer to particular countries (for example if a land area is disputed. If you have to provide a long drop down list then it might be worth offering the common categories first. You could also try subdividing the categories into two-drop downs where the selection from the first dynamically creates the options in the second.

You may wish to have the user see a new page or sidebar when filling in a form. A new page may be easier to look at but can be annoying if it is perceived as a diversion or, even worse, an advertisement. It may also be prevented from opening by window blocking software available on newer browsers.

Users will often make typing or transcription errors when filling a form in. These errors can occur in any free text fields on the form.

Occasionally users will press the submit or send button either deliberately or inadvertently when only part-way through the form. Make sure that you have an appropriate error message for this and allow users to go back to the unfinished form. Users also often fill in part of a form and then click on the back button. They may be doing this to lose the data they have filled in, to check previous data or because they think they have finished. These activities suggest poor user interface design.

It is important to provide a helpful message on the submission screen explaining the form has been submitted successfully. You could also give replicate the details inputted for users to print out as hard copy.

Once you have created your Web form you need to test it thoroughly before release. There are a number of different free software products available that will help you with your testing. Tools such as Roboform [1] are freely available and can be used to store test data in and automatically fill in your forms with data.

When testing your form it is worth bearing in mind some problem areas:

Before creating a Web site for your project you should give some thought to the purpose of the Web site, including the aims of the Web site, the target audiences, the lifetime, resources available to develop and maintain the Web site and the technical architecture to be used. You should also think about what will happen to the Web site once project funding has finished.

Your project Web site could have a number of purposes. For example:

Your Web site could, of course, fulfill more than a single role. Alternatively you may choose to provide more than one Web site.

You should have an idea of the purposes of your project Web site before creating it for a number of reasons:

Once funding has been approved for your project Web site you may wish to provide information about the project, often prior to the official launch of the project and before project staff are in post. There is a potential danger that this information will be indexed by search engines or treated as the official project page. You should therefore ensure that the page is updated once an official project Web site is launched so that a link is provided to the official project page. You may also wish to consider stopping search engines from indexing such pages by use of the Standard For Robot Exclusion [1].

Many projects will have an official project Web site. This is likely to provide information about the project such as details of funding, project timescales and deliverables, contact addresses, etc. The Web site may also provide access to project deliverables, or provide links to project deliverables if they are deployed elsewhere or are available from a repository. Usually you will be proactive in ensuring that the official project Web site is easily found. You may wish to submit the project Web site to search engines.

Projects with several partners may have a Web site which is used to support communications with project partners. The Web site may provide access to mailing lists, realtime communications, decision-making support, etc. The JISCMail service may be used or commercial equivalents such as YahooGroups. Alternatively this function may be provided by a Web site which also provides a repository for project resources.

Projects with several partners may have a Web site which is used to provide a repository for project resources. The Web site may contain project plans, specifications, minutes of meetings, reports to funders, financial information, etc. The Web site may be part of the main project Web site, may be a separate Web site (possibly hosted by one of the project partners) or may be provided by a third party. You will need to think about the mechanisms for allowing access to authorised users, especially if the Web site contains confidential or sensitive information.

Once you have agreed on the purpose(s) of your project Web site(s) [1] you will need to choose a domain name for your Web site and conventions for URIs. It is necessary to do this since this can affect (a) The memorability of the Web site and the ease with which it can be cited; (b) The ease with which resources can be indexed by search engines and (c) The ease with which resources can be managed and repurposed.

You may wish to make use of a separate domain name for your project Web site. If you wish to use a .ac.uk domain name you will need to ask UKERNA. You should first check the UKERNA rules [2]. A separate domain name has advantages (memorability, ease of indexing and repurposing, etc) but t his may not be appropriate, especially for short-term projects. Your organisation may prefer to use an existing Web site domain.

You should develop a policy for URIs for your Web site which may include:

It is strongly recommended that you make use of directories to group related

resources. This is particularly important for the project Web site itself and for

key areas of the Web site. The entry point for the Web site and key areas should

be contained in the directory itself: e.g. use http://www.foo.ac.uk/bar/

to refer to project BAR and not http://www.foo.ac.uk/bar.html) as

this allows the bar/ directory to be processed in its entirety, independently or

other directories. Without this approach automated tools such as indexing software,

and tools for auditing, mirroring, preservation, etc. would process other directories.

You should seek to ensure that URIs are persistent. If you reorganise your Web site you are likely to find that internal links may be broken, that external links and bookmarks to your resources are broken, that citations to resources case to work. Y ou way wish to provide a policy on the persistency of URIs on your Web site.

Ideally the address of a resource (the URI) will be independent of the format of the resource. Using appropriate Web server configuration options it is possible to cite resources in a way which is independent of the format of the resource. This should allow easy of migration to new formats (e.g. HTML to XHTML) and, using a technology known as Transparent Content Negotiation [3] provide access to alternative formats (e.g. HTML or PDF) or even alternative language versions.

Ideally URIs will be independent of the technology used to provide access to

the resource. If server-side scripting technologies are given in the file extension

for URIs (e.g. use of .asp, .jsp, .php, .cfm, etc. extensions) changing

the server-side scripting technology would probably require changing URIs. This

may also make mirroring and repurposing of resources more difficult.

Ideally URIs will be memorable and allow resources to be easily indexed and repurposed. However use of Content Management Systems or databases to store resources often necessitates use of URIs which contain query strings containing input parameters to server-side applications. As described above this can cause problems.

You should consider the following approaches which address some of the concerns:

It is desirable to measure usage of your project Web site as this can give an indication of its effectiveness. Measuring how the Web site is being used can also help in identifying the usability of the Web site. Monitoring errors when users access your Web site can also help in identifying problem areas which need to be fixed.

However, as described in this document, usage statistics can be misleading. Care must be taken in interpreting statistics. As well as usage statistics there are a number of other types of performance indicators which can be measured.

It is also important that consistent approaches are taken in measuring performance indicators in order to ensure that valid comparisons can be made with other Web sites.

Web statistics are produced by the Web server software. The raw data will normally be produced by default - no additional configuration will be needed to produce the server's default set of usage data.

The server log file records information on requests (normally referred to as a "hit") for a resource on the web server. Information included in the server log file includes the name of the resource, the IP address (or domain name) of the user making the request, the name of the browser (more correctly, referred to as the "user agent") issuing the request, the size of the resource, date and time information and whether the request was successful or not (and an error code if it was not). In addition many servers will be configured to store additional information, such as the "referer" (sic) field, the URL of the page the user was viewing before clicking on a link to get to the resource.

A wide range of Web statistical analysis packages are available to analyse Web server log files [1]. A widely used package in the UK HE sector is WebTrends [2].

An alternative approach to using Web statistical analysis packages is to make use of externally-hosted statistical analysis services [3]. This approach may be worth considering for projects which have limited access to server log files and to Web statistical analysis software.

In order to ensure that Web usage figures are consistent it is necessary to ensure that Web servers are configured in a consistent manner, that Web statistical analysis packages process the data consistently and that the project Web site is clearly defined.

You should ensure that (a) the Web server is configured so that appropriate information is recorded and (b) that changes to relevant server options or data processing are documented.

You should be aware that the Web usage data does not necessarily give a true indication of usage due to several factors:

Despite these reservations collecting and analysing usage data can provide valuable information.

Web usage statistics are not the only type of performance indicator which can be used. You may also wish to consider:

With all of the indicators periodic reporting will allow trends to be detected.

It may be useful to determine a policy on collection and analysis of performance indicators for your Web site prior to its launch.

The creation of CAD (Computer Aided Design) models is an often complex and confusing procedure. To reduce long-term manageability and interoperability problems, the designer should establish procedures that will monitor and guide system checks.

Interoperability problems are often caused by poorly understood or non-existent operating procedures for CAD. It is wise to establish and document your own CAD procedures, or adopt one of the national standards developed by the BSI (British Standards Institution) or NIBS (National Institute of Building Sciences). These may be used to train new members in the house-style of a project, provide essential information when sharing CAD data among different users, or provide background material when depositing the designs with a preservation repository. Particular areas to standardize include:

Procedures on constructing your own CAD standard can be found in the Construct IT guidelines (see references).

When creating CAD data models, a consistent approach to layer creation and naming conventions is useful. This will avoid confusion and increases the likelihood that the designer will be able to manipulate and search the data model at a later date.

The designer has two options to ensure interoperability:

When exporting designs between different CAD applications it is common for model relationships to disintegrate, causing entities to appear disconnected or disappear from the design altogether. A common cause is the use of different tolerance levels - a method of placing limits on gaps between geometric entities. The method of calculating tolerance often varies in different applications: some use absolute tolerance levels (e.g. 0.005mm), others work to a tolerance level relative to the model size (e.g. 10-4 the size), while others have different tolerances according to the units used. When considering moving a design between different applications it is useful to ensure the tolerance level can be set to the same value and identify potential problem areas that may be corrupted when the data model is reopened.

Interoperability problems are also caused by differences in how the system identifies invalid geometry definitions, such as the three-sided degenerate NURBS surfaces. Some systems allow the creation of such entities, others will reject them, whereas some systems know that they are not permissible and in an effort to prevent them from being created, generate twisted four sided surfaces.

It is desirable to minimise the time a Web site is unavailable. However it may be necessary to bring a Web server down in order to carry out essential maintenance. This document lists some areas to consider if you wish to minimum down time.

The key to any form of critical path situation is planning. Planning involves being sure about what needs to be done and being clear about how it can be done. Quality Assurance is vital at this stage and final 'quality' checking is often the last act before a site goes live or a new release is launched.

Advance preparation is vital if you want to minimise time your site downtime and avoid confusion when installing new releases.

Digitisation is a production process. Large numbers of analogue items, such as documents, images, audio and video recordings, are captured and transformed into the digital masters that a project will subsequently work with. Understanding the many variables and tasks in this process - for example the method of capturing digital images in a collection (scanning or digital photography) and the conversion processes performed (resizing, decreasing bit depth, convert file formats, etc.) - is vital if the results are to remain consistent and reliable.

By documenting the workflow of digitisation, a life history can be built-up for each digitised item. This information is an important way of recording decisions, tracking problems and helping to maintain consistency and give users confidence in the quality of your work.

Workflow documentation should enable us to tell what the current status of an item is, and how it has reached that point. To do this the documentation needs to include important details about each stage in the digitisation process and its outcome.

By recording the answers to these five questions at each stage of the digitisation process, the progress of each item can be tracked, providing a detailed breakdown of its history. This is particularly useful for tracking errors and locating similar problems in other items.

The actual digitisation of an item is clearly the key point in the workflow and therefore formal capture metadata (metadata about the actual digitisation of the item) is particularly important.

Where possible, select an existing schema with a binding to XML:

To check your XML document for errors, QA techniques should be applied:

Quality assurance is essential to ensure GIS (Geographic Information System) data is accurate and can be manipulated easily. To ensure data is interoperable, the designer should audit the GIS records and check them for incompatibilities and errors.

Interoperability between GIS standards is encouraged, enabling complex data types to be compared in unexpected methods. However, the varying standards can limit the potential uses of the data. Designers are often limited by the formats available in different tools. When possible, it is advisable to use OpenGIS - an open, multi-subject standard constructed by an international standard consortium.

To integrate data from multiple databases, the data must be stored in a compatible field structure. Complementary fields in the source and target databases must be of a compatible type (Integer, Floating Point, Date, a Character field of an appropriate length etc.) to avoid the loss of data during the integration process. Checks should also be made that specific fields that are incompatible with similar products (e.g. dBase memo fields) are exported correctly. Specialist advice should be taken to ensure the memo information is not lost.

Databases are often created in an ad hoc manner without consideration of later requirements. To improve interoperability the designer should ensure data complies with relevant standards. Examples include the BS7666 standard for British postal addresses and the RCHME Thesauri of Architectural Types, Monument Types, and Building Materials.

The merging of two different data sources is likely to present specific problems. When combining two GIS tables, the designer should consider the possibility that they have been constructed using different projection measurement systems (a method of representing the Earth's three-dimensional form on a two-dimensional plane and locate landmarks by a set of co-ordinates). The projection co-ordinate systems vary across nations and through time: the US has five primary co-ordinate systems in use that significantly differ with each other. The British National Grid removes this confusion by using a single co-ordinate, but can cause problems when merging contemporary with pre-1940 maps that were based upon Cassini projection. This may produce incompatibilities and unexpected results when plotted, such as moving boundaries and landmarks to different locations that will need to be rectified before any real benefits can be gained. The designer should understand the project system used for each layer to compensate for inaccuracies.

When recreating real-world objects created by two different people, the designer should note the degree of accuracy. One person may measure to the nearest millimetre, while the other measures to the centimetre. To check this, the designer should answer the following questions:

These subtle differences may influence the resulting model, particularly when designing smaller objects.

Digital Rights Management (DRM) refers to any method for a software developer to monitor, control, and protect digital content. It was developed primarily as an advanced anti-piracy method to prevent illegal or unauthorised distribution of content. Common examples of DRM include watermarks, licensing, and user registration. It is in use by Microsoft and other businesses to prevent unauthorised copying and use of their software (obviously, the different protection methods do not always work!).

For institutions, DRM can have limited application. Academia actively encourages free dissemination of work, so stringent restrictive measures are unnecessary. However, it will have use in limiting plagiarism of work. An institution is able to distribute lecture notes or software without allowing the user to reuse text or images within their own work. Usage of software packages or site can also be tracked, enabling specific content to be displayed to different users. To achieve these goals different methodologies are available.

As stated above, Digital Rights Management is not appropriate for all organisations. It can introduce additional complexity into the development process, limit use and cause unforeseen problems at a later date. The following questions will assess your needs:

Do you trust your users to use your work without plagiarising or stealing it?

If the answer to question 1 is yes, do you wish to track unauthorised distribution or impose rules to prevent it?

Will you be financially affected if your work is distributed without permission?

Will digital rights restrictions interfere with the project goals and legitimate usage?

In terms of cost and time management, can you afford to implement DRM restrictions?

If the answer to question 5 is yes, can you afford a strong and costly level of protection (restrictive digital rights) or weak protection (supportive) that costs significantly less?

Digital Rights methodologies can be divided into two types supportive and restrictive. The first relies upon the user's honesty to register or acquire a license for specific functionality. In contrast, the restrictive method assumes the user is untrustworthy, placing barriers (e.g. encryption and other preventive methods) that will thwart casual users who attempt to infringe their work.

The simplest and most cost effective DRM strategy is supportive digital rights. This requires the user to register for data before they are allowed access, blocking all non-authorised users. This assumes that individuals will be less likely to distribute content if they can be identified as the source of the leak. Web sites are the most common use of this protection method. For example, Athens, the NYTimes and other portals provide registration forms or license agreement that the user must complete before access is allowed. The disadvantage of this protection method is the individual can easily copy or distribute data once they have it. Support digital rights is suited to organisations that want to place restrictions upon who can access specific content, but do not wish to restrict content being used by legitimate users.

Restrictive digital rights are more costly, but place more stringent controls over the user. It operates by checking if the user is authorised to perform a specific action and, if not, prevents them from doing it. Unlike supportive rights management, it ensures that content cannot be used at a later date, even if it has been saved to hard disk. This is achieved by incorporating watermarks and other identification methods into the content.

Restrictive digital can be divided into two sub-categories:

The requirements for Digital rights implementations are costly and time-consuming, making them potentially unobtainable by the majority of service providers. For a data archive it is easier to prevent unauthorised access to resources than it is to limit use when they actually possess the information.

Digital rights is reliant upon the need to record information and store it in a standard layout format that others can use to identify copyrighted work. Current digital rights establish a standard metadata schema to identify ownership

Two options are available to achieve this goal: create a bespoke solution or use an established rights schema. An established rights schema provides a detailed list of identification criteria that can be used to catalogue a collection and establish copyright holders at different stages. Two possible choices for multiple media types are:

Digital rights are an important issue that allows an institution to establish intellectual property rights. However, it can be costly for small organizations that simply wish to protect their image collection. Therefore the choice of supportive or restrictive digital rights is likely to be influenced by value of data in relation to the implementation cost.

The digitisation of digital audio can be a complex process. This document contains quality assurance techniques for producing effective audio content, taking into consideration the impact of sample rate, bit-rate and file format.

Sample rate defines the number of samples that are recorded per second. It is measured in Hertz (cycles per second) or Kilohertz (thousand cycles per second). The following table describes four common benchmarks for audio quality. These offer gradually improving quality, at the expense of file size.

| Samples per second | Description |

|---|---|

| 8kHz | Telephone quality |

| 11kHz | At 8 bits, mono produces passable voice at a reasonable size. |

| 22kHz | 22k, half of the CD sampling rate. At 8 bits, mono, good for a mix of speech and music. |

| 44.1kHz | Standard audio CD sampling rate. A standard for 16-bit linear signed mono and stereo file formats. |

The audio quality will improve as the number of samples per second increases. A higher sample rate enables a more accurate reconstruction of a complex sound wave to be created from the digital audio file. To record high quality audio a sample rate of 44.1kHz should be used.

Bit-rate indicates the amount of audio data being transferred at a given time. The bit-rate can be recorded in two ways - variable or constant. A variable bit-rate creates smaller files by removing inaudible sound. It is therefore suited to Internet distribution in which bandwidth is a consideration. A constant bit-rate, in comparison, records audio data at a set rate irrespective of the content. This produces a replica of an analogue recording, even reproducing potentially unnecessary sounds. As a result, file size is significantly larger than those encoded with variable bit-rates.

Table 2 indicates how a constant bit-rate affects the quality and file size of an audio file.

| Bit rate | Quality | MB/min |

|---|---|---|

| 1411 | CD quality | 10.584 |

| 192 | Good CD quality | 1.440 |

| 128 | Near CD quality | 0.960 |

| 112 | Near CD quality | 0.840 |

| 64 | FM quality | 0.480 |

| 32 | AM quality | 0.240 |

| 16 | Short-wave quality | 0.120 |

The majority of audio formats use lossy compression to reduce file size by removing superfluous audio data. Master audio files should ideally be stored in a lossless format to preserve all audio data.

| Format | Compression | Streaming support | Bit-rate | Popularity |

|---|---|---|---|---|

| MPEG Audio Layer III (MP3) | Lossy | Yes | Variable | Common on all platforms |

| Mp3PRO (MP3) | Lossy | Yes | Variable | Limited support |

| Ogg Vorbis (OGG) | Lossy | Yes | Variable | Limited support |

| RealAudio (RA) | Lossy | Yes | Variable | Popular for streaming |

| Microsoft wave (WAV) | Lossless | Yes | Constant | Primarily for Windows |

| Windows Media (WMA) | Lossy | Yes | Variable | Primarily for Windows |

Conversion between digital audio formats can be complex. If you are producing audio content for Internet distribution, a lossless-to-lossy (e.g. WAV to MP3) conversion will significantly reduce bandwidth usage. Only lossless-to-lossy conversion is advised. The conversion process of lossy-to-lossy will further degrade audio quality by removing additional data, producing unexpected results.

Whether digitising analogue recordings or converting digital sound into another format, sample rate, bit rate and format compression will affect the resulting output. Quality assurance processes should compare the technical and subjective quality of the digital audio against the requirements of its intended purpose.

A simple suite of subjective criteria should be developed to check the quality of the digital audio. Specific checks may include the following questions:

Objective technical criteria should also be measured to ensure each digital audio file is of consistent or appropriate quality:

Digital text is one of the oldest description methods, but remains divided by differing file format, encoding methods, schemas, and encoding methods. When choosing a digital text format it is necessary to establish the project needs. Is plain text suitable for the task and are text markup and formatting required? How will the information be displayed and where? This document describes these issues and provides some guidelines for their use.

Digital text has existed in one form or another since the 1960s. Many computer users take for granted that they can quickly write a letter without restriction or technical considerations. A commercial project, however, requires consideration of long-term needs and goals. To avoid complications at a later date, the developer must ensure the tools in use are the most appropriate for the task and, if not, what can be used in their place. To achieve this three questions should be answered:

It is often assumed that everyone can read text. However, this is not always the case. Digital text imposes restrictions upon the content that can have a significant impact upon the project.

In particular, there are two main issues:

The choice of format will be dependent upon the following factors:

For allowing universal information access, plain text remains useful. It has the advantage of being simple to interpret and small in file size. However, there are some differences in the method that is used to encode text characters. The most common variations are ASCII (American Standard Code for Information Interchange) and Unicode.

Several problems may be encountered when storing textual information. For text files it is a simple process to convert the file to Unicode. However, for more complex data, such as databases, the conversion process will become more difficult. Problems may include:

Although ASCII and Unicode are useful for storing information, they are only able describe each character, not the method they should be displayed or organized. Structural mark-up languages enable the designer to dictate how information will appear and establish a structure to its layout. For example, the user can define a tag to store book author information and publication date.

The use of structural mark-up can provide many organizational benefits:

The most common markup languages are SGML and XML. Based upon these languages, several schemas have been developed to organize and define data relationships. This allows certain elements to have specific attributes that define its method of use (see Digital Rights document for more information). To ensure interoperability, XML is advised due to its support for contemporary Internet standards (such as Unicode).

Digital video can have a dramatic impact upon the user. It can reflect information that is difficult to describe in words alone, and can be used within an interactive learning process. This document contains guidelines to best practice when manipulating video. When considering the recording of digital video, the digitiser should be aware of the influence of file format, bit-depth, bit-rate and frame size upon the quality of the resulting video.

Digital video consists of a series of images played in rapid succession to create the illusion of movement. It is commonly accompanied by an audio track. Unlike graphics and sound that are relatively small in size, video data can be hundreds of megabytes, or even gigabytes, in size.

The visual and audio information are individually stored within a digital 'wrapper' an umbrella structure consisting of the video and audio data, as well as information to playback and resynchronise the data.

Digital video remains a complex area that combines the problems of audio and graphic data. When choosing to encode video the designer must consider several issues:

The distribution method will have a significant influence upon the file format, encoding type and compression used in the project.

Removeable media - Video distributed on CD-ROM or DVD are suited to progressive encoding methods that do not conduct extensive error checking. Although file size is not as critical in comparison to Internet streaming, it continues to have some influence.

The compression type is dependent upon the need of the user and the type of removeable media:

| NAME | PURPOSE OF MEDIA | Compression | ||

| Streaming | Progressive | Media | ||

| Advanced Streaming Format (ASF) | Y | Temporal | ||

| Audio Video Interleave (AVI) | Y | Temporal | ||

| MPEG-1 | Y | VideoCD | Temporal | |

| MPEG-2 | Y | DVD | Temporal | |

| QuickTime (QT) | Y | Y | Temporal | |

| QuickTime Pro | Y | Y | Temporal | |

| RealMedia (RM) | Y | Y | Temporal | |

| Windows Media Video (WMV) | Y | Y | Temporal | |

| DivX | Y | Amateur CD distribution | Temporal | |

| MJPEG | Y | Spatial | ||

Table 1: A comparison list of the different file formats, highlighting their intended purpose and compression method.

The provision of video data for an Internet-based audience places specific restrictions upon the content. Quality of the video output is dependent upon three factors:

| Screen Size | Pixels per frame | Bit depth (bits) | Frames per second | Bandwidth required (megabits) |

| 640 x 480 | 307,200 | 24 | 30 | 221.184 |

| 320 x 240 | 76,800 | 16 | 25 | 30.72 |

| 320 x 240 | 76,800 | 8 | 15 | 9.216 |

| 160 x 120 | 19,200 | 8 | 10 | 1.536 |

| 160 x 120 | 19,200 | 8 | 5 | 0.768 |

Table 2: Indication of the influence of screen size, bit-depth and frames per second has upon required bandwidth

When creating video, the designer must balance the video quality with the facilities available to the end user. As an example, an 8-bit screen of 160 x 120 pixels, and 10-15 frames per second is used for the majority of content found on the Internet.

Video presents numerous problems for the designer caused by the complexity of formats and structure. Problems may include:

Temporal Compression - Reduces the amount of data stored over a sequence of frames. Rather than describing every pixel in each frame, temporal compression stores a key frame, followed by descriptive information on changes.

Spatial Compression - Condenses each frame independently by mapping similar pixels within a frame. For example, two shades of red will be merged. This results in a reduction in image quality, but enables the file to be edited in its original form.

Progressive Encoding - Refers to any format where the user is required to download the entire video before they are allowed to watch it.

Internet Streaming - Enables the viewer to watch sections of video without downloading the entire thing, allowing users to evaluate video content after just a few seconds. Quality is significantly lower than progressive formats due to compression being used.

Internet IPR is inherently complex, breaking across geographical boundaries, creating situations that are illegal in one country, yet not in another, or contradict existing laws on Intellectual Property. Copyright is a subset of IPR, which applies to all artistic works. It is automatically assigned to the creator of original material, allowing them to control all public usage (copying, adaptation, performance and broadcasting).

Ensuring that your organization complies with Intellectual Property rights requires a detailed understanding of two processes:

Unless indicated, copyright is assigned to the author of an original work. When producing work it is essential that it be established who will own the resulting product the individual or the institution. Objects produced at work or university may belong to the institution, depending upon the contract signed by the author. For example, the copyright for this document belongs to the AHDS, not the author. When approaching the subject, the author should consider several issues:

When producing work as an individual that is intended for later publication, the author should establish ownership rights to indicate how work can be used after initial publication:

Copyright is an automatically assigned right. It is therefore likely that the majority of works in a digital collection will be covered by copyright, unless explicitly stated. The copyright clearance process requires the digitiser to check the copyright status of:

Copyright clearance should be established at the beginning of a project. If clearance is denied after the work has been included in the collection, it will require additional effort to remove it and may result in legal action from the author.

In the event that an author, or authors, is unobtainable, the project is required to demonstrate they have taken steps to contact them. Digital preservation projects are particularly difficult in this aspect, separating the researcher and the copyright owner by many years. In many cases, more recently the 1986 Domesday project, it has proven difficult to trace authorship of 1000+ pieces of work to individuals. In this project, the designers created a method of establishing permission and registering objections by providing contact details that an author could use to identify their work.

If permission has been granted to reproduce copyright work, the institution is required by law to indicate intellectual property status. Metadata is commonly used for this purpose, storing and distributing IP data for online content. Several metadata bodies provide standardized schemas for copyright information. For example, IP information for a book could be stored in the following format.

|

Access inhibitors can also be set to identify copyright limitations and the methods necessary to overcome them. For example, limiting e-book use to IP addresses within a university environment.

Digitisation often involves working with hundreds or thousands of images, documents, audio clips or other types of source material. Ensuring these objects are consistently digitised and to a standard that ensures they are suitable for their intended purpose can be complex. Rather than being considered as an afterthought, quality assurance should be considered as an integral part of the digitisation process, and used to monitor progress against quality benchmarks.

The majority of formal quality assurance standards, such as ISO9001, are intended for large organisations with complex structures. A smaller project will benefit from establishing its own quality assurance procedures, using these standards as a guide. The key is to understand how work is performed and identify key points at which quality checks should be made. A simple quality assurance system can then be implemented that will enable you to monitor the quality of your work, spot problems and ensure the final digitised object is suitable for its intended use.

The ISO 9001 identifies three steps to the introduction of a quality assurance system:

First and foremost, any system for assuring quality in the digitisation process should be straightforward and not impede the actual digitisation work. Effective quality assurance can be achieved by performing four processes during the digitisation lifecycle:

The ISO 9001 system is particularly useful in identifying clear guidelines for quality management.

Digitisation projects should implement a simple quality assurance system. Implementing internal quality assurance checks within the workflow allows mistakes to be spotted and corrected early-on, and also provides points at which work can be reviewed, and improvements to the digitisation process implemented.

Any image that is to be archived for future use requires specific storage considerations. However, the choice of file format is diverse, offering advantages and disadvantages that make them better suited to a specific environment. When digitising images a standards-based and best practice approach should be taken, using images that are appropriate to the medium they are used within. For disseminating the work to others, a multi-tier approach is necessary, to enable the storing of a preservation and dissemination copy. This document will discuss the formats available, highlighting the different compression types, advantages and limitations of raster images.