|

|

NOF-digitise Technical Advisory Service |

|

Monitoring the performance of your Web site is an important task in understanding the level of its success. NOF-digitise projects are expected to use performance indicators to provide an objective measure of the usage of their Web service. This should be done through the monitoring of Web server log files, and by providing regular reports to NOF. Web StatisticsWeb site statistics are an important part of any Web site. They can illustrate that your site is increasing in popularity or show that further dissemination is needed. They can also confirm who your users are and where they come from; which in turn helps you to bring in more users. Some statistical analysis programmes can even help resolve problem areas such as broken links. However it is worth noting that statistics can be misleading and should always be viewed as objectively as possible. There are quite often influencing factors that can cause unexpected results. One way of getting clearer figures is by using more than one statistical analysis system. About Web StatisticsWeb statistics are produced by the Web server software. The raw data will be produced by default - no additional configuration will be needed to produce the server's default set of usage data. The server log file records information on requests (normally referred to as a "hit") for a resource on the Web server. Information included in the server log file includes the name of the resource, the IP address (or domain name) of the user making the request, the name of the browser (more correctly, referred to as the "user agent") issuing the request, the size of the resource, date and time information and whether the request was successful or not (and an error code if it was not). In addition many servers will be configured to store additional information, such as the "referer" (sic) field, the URL of the page the user was viewing before clicking on a link to get to the resource. An example of a server log file is shown below. #Software: Microsoft Internet Information Server 4.0 #Version: 1.0 #Date: 1999-12-25 00:00:21 #Fields: date time c-ip cs-username cs-method cs-uri-stem cs-uri-query sc-status sc-bytes cs(User-Agent) cs(Cookie) cs(Referer) 1999-12-25 00:00:21 194.237.174.119 - GET /issue1/jobs/Default.asp - 200 20407 AltaVista-Intranet/V2.3A+(www.altavista.co.uk+jan.gelin@av.com) - - 1999-12-25 00:03:39 194.237.174.119 - GET /statistics/ExpIntHits1.asp - 200 10519 AltaVista-Intranet/V2.3A+(www.altavista.co.uk+jan.gelin@av.com) - - 1999-12-25 00:26:54 209.67.247.158 - GET

/robots.txt - 200 303

FAST-WebCrawler/2.0.9+ 1999-12-25 00:32:47 194.237.174.119 - GET /issue2/default.asp - 200 5332 AltaVista-Intranet/V2.3A+(www.altavista.co.uk+jan.gelin@av.com) - - 1999-12-25 01:49:54 206.186.25.7 - GET

/resources/_img/main/bg.gif - 200 300

Mozilla/2.0+(compatible;+MSIE+3.02;+AK;+Windows+NT)

ASPSESSIONIDGQQGQGAD=IIHCBIFDIECKPAPGICDEOJII;+ 1999-12-25 01:49:54 206.186.25.7 - GET /issue1/Webtechs/Default.asp - 200 24659 Mozilla/2.0+(compatible;+MSIE+3.02;+AK;+Windows+NT) - http://www.statslab.cam.ac.uk/%7Esret1/analog/Webtechs.html 1999-12-25 01:49:54 206.186.25.7 - GET

/resources/_img/main/global_home_h.gif - 200 487

Mozilla/2.0+(compatible;+MSIE+3.02;+AK;+Windows+NT)

ASPSESSIONIDGQQGQGAD=IIHCBIFDIECKPAPGICDEOJII;+ 1999-12-25 01:49:54 206.186.25.7 - GET

/resources/_img/main/global_search.gif - 200 534

Mozilla/2.0+(compatible;+MSIE+3.02;+AK;+Windows+NT)

ASPSESSIONIDGQQGQGAD=IIHCBIFDIECKPAPGICDEOJII;+ 1999-12-25 01:49:56 206.186.25.7 - GET

/resources/_img/main/local_home01.gif - 200 663

Mozilla/2.0+(compatible;+MSIE+3.02;+AK;+Windows+NT)

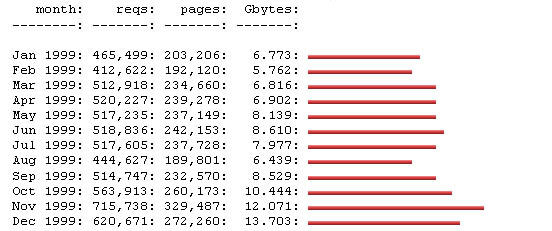

ASPSESSIONIDGQQGQGAD=IIHCBIFDIECKPAPGICDEOJII;+ Sample Web Server Log File Above you can see the first few records of the server log file for the Exploit Interactive Web magazine [1] for Christmas Day, 1999. The first four lines are comments. The first line of data shows that at 21 seconds past midnight on Christmas Day a computer with the IP address 194.237.174.119 issued a GET request (the normal method for requesting a resource) for the resource http://www.exploit-lib.org/issue1/jobs/Default.asp. The resource was 20,407 bytes and the resource was transferred successfully (a 200 error code). The resource was requested by the AltaVista-Intranet user agent (a robot which indexes Web sites). The first four records are from a Web robot from AltaVista. However at 01:49 there is a request for http://www.exploit-lib.org/issue1/Webtechs/Default.asp resource. This request is issued by a Mozilla/2.0 browser (the code for Netscape) and the user was following a link from http://www.statslab.cam.ac.uk/%7Esret1/analog/Webtechs.html. It will be noticed that this request is accompanied by requests for a number of images (.gif files). It is not too difficult to see how this raw data can be used to provide graphical displays showing growth in the numbers of hits, profiles of the browsers used to access the Web site, etc. An example of a simple display of changes in the number of hits during 1999 for the University of Cambridge Statistical Laboratory Web site is shown below.

Web Server Statistics for the Statistical Laboratory, University of Cambridge Interpreting Web StatisticsThe Meaning of "Hits" If the numbers of hits shows a steady growth over an extended period, is this a clear indication of a growth in the popularity of the service? The answer, quite simply, is no. If the numbers of hits on your Web site grows by, say 50% over a year, the number of visitors could actually be decreasing. On the other hand, the numbers of visitors could be growing at a far greater rate. The numbers of hits received by a Web site is influenced by several factors:

As a consequence of the points mentioned above usage summaries will overestimate the numbers of visitors who make use of the information of services provided by a Web site. However it should be pointed out that there are other factors which will result in an underestimation of the numbers of visitors:

The Meaning of "User sessions" A number of Web statistics analysis packages provided information on the number of user sessions (visitors). Is this information more meaningful than "hits"? We must first define the term. A user session can be defined as a series of requests from a unique IP address within a specified period of time (often 30 minutes). So a growth in the number of user sessions will not be affected by changes in the architecture of the Web site (i.e. more images added). So are user sessions a more relevant indicator? The answer is yes, but user sessions can still be misleading. User sessions will still be distorted by robots, one-off visitors and caching. They will also be affected by multi-user machines, so that if a PC is used by several people, it will be regarded as the same user. More worryingly, institutional caches or firewalls, will be treated as a single user. So if you are pleased to notice a growth in the average time spent by users at your Web site, this could be the result of many one-off visitors who are behind the same firewall or cache who are accessing your Web site independently of each other. Are Web Statistics Worthless?Jeff Goldberg from the University of Cranfield Computer Centre has written a document entitled "On Interpreting Web Statistics" which argues that Web usage statistics are (worse than) meaningless [4]. Although this document is now quite old, we have seen that Web statistics can be misleading. Does this mean that we should forget about Web statistics as a performance indicator? We at the NOF-digitise Technical Advisory Service feel that the answer to this is no. Although, as Susan Haigh and Janette Megarity point out in their report on "Measuring Web Site Usage: Log File Analysis" "log file analysis is perhaps best viewed as an art disguised as a science." [5] Web statistics do provide valuable information. However it may be necessary to carry out data mining, in order to detect patterns which may be hidden from simple analyses. Resource ImplicationsSo what are the resource implications in providing meaningful summaries of Web usage statistics? Initially the Web server must be configured appropriately. For example, what information should be recorded? Should IP addresses be resolved (so that domain names such as bath.ac.uk will be stored in the log files) or, in order to maximise the performance of the Web server, should the resolution take place when the statistics are being analysed? It may be necessary to develop automated processes for managing server log files. Server log files can be very large. The large size of log files will have implications for disk storage and the processing power of the computer which will carry out the analysis. It is desirable that automated processes are implemented for analysing server log files. This will normally require use of a server system (often a Unix or NT server). Recent versions of log analysis software will enable automated analyses to be initiated from within the application. However this may not be possible in entry level packages. Although several analysis packages are freely-available, the requirements for data-mining or automation may necessitate the purchase of an expensive package, or significant software development / system configuration effort if a solution based on free tools is required. It should also be pointed out that a powerful computer may be needed to analyse log files. Producing the StatisticsA wide range of Web statistical analysis packages are available, including free packages such as Analog [6], Analog's companion package Report Magic [7], Webaliser [8] and aWebVisit [9]. Licensed products include WebTrends [10] and Accrue's HitList [11]. A more complete listing is available at Internet Product Watch [12] and Yahoo! [13]. An alternative approach to using Web statistical analysis packages is to make use of externally-hosted statistical analysis services. Services such as NedStat [14], SiteMeter [15], SuperStats [16] and Stats4All [17]. As described in an Ariadne article [18], there appears to be a growing market for a range of externally-hosted services. They have the advantage of being easy to set up. In many cases they are free, and are funded by advertising, although there may be a licensed version which is free of advertising. These services typically work by providing a small icon which is included on pages on the Web site. When a visitor accesses a page containing the icon, a request for the image is sent to the company hosting the service. The request is logged. The log file may store not only the date and time of the request, but also a variety of additional information, such as the referrer (sic) field (how did the visitor get to the page) and the search engine query string (if the visitor used a search engine to get to the page, what search term did they enter). In addition to information which will be recorded on the local Web server's log file, the externally-hosted services often include Javascript code which can interrogate the visitor's client machine and obtain information on the operating system, browser, screen resolution, etc). Arguably one of the advantages of externally-hosted Web statistical analysis services is the ease of access to the reports. Typical clicking an icon (such as the icon to be found near the bottom of the page) will provide access to the information. Externally-hosted Web Statistical Analysis ServicesBelow is a review of two externally-hosted Web statistical analysis services. The review is not intended as an endorsement. SiteMeterSiteMeter is used to provide site (as opposed to page) statistics. Features of SiteMeter include:

In addition to the graphical display a weekly email summary can be received. SiteMeter also provides a management interface which can be used to customise the display and allows the public display of the information to be suppressed. NedStatWith NedStat a unique id is used to enable statistics for individual pages to be provided. Details such as Total number of pageview and top day so far are given. Examples of the use of Nedstat can be seen in Cultivate Interactive Web magazine [19]. DiscussionThere is growing interest in the use of externally-hosted Web services. But are remote services reliable? Some issues that you will need to consider include:

These services naturally have their limitations. They will typically only provide detailed information for a short period, for example, the last month, and it is not normally possible (with the free versions of the services) to carry out "data-mining". They will also not provide information about, say, errors which will be available in server log files. The services also have a dependency of the availability of the remote service and the network. This may be a concern to some, although as network performance and reliability increases the dangers should be reduced. An important point to be born in mind is that meaningful Web statistics can be difficult to obtain. Externally-hosted Web statistics services are dependent on the use of graphical icons. Text browsers, browsers with images switched off and robot software will not be recorded by these services. However, since the images are non-cachable objects, these service should overcome the problem with missing hits which affects server log files. These issues are discussed further in an article on Externally-Hosted Web Statistics Services in Exploit Interactive [20]. Other Performance IndicatorsLinks to Your Web SiteAs described in an article appearing in Ariadne [21] and in Exploit Interactive [22] it is possible to obtain details on links to your Web site using services such as LinkPopularity.com [23]. As the article mentions, the quality of the information provided may be questionable. However, as with Web usage statistics, as long as the reservations are born in mind, information and trends in the numbers of links to a Web site may provide a valuable insight into how useful the community finds the Web site. Search Engine CoverageDepending of the role of the Web service, it may be desirable for its content to be available through use of search engines such as AltaVista. As described in the dissemination section of the Programme Manual there are a number of approaches to using the Web to promote your Web site. The approaches discussed in the section on recording the number of links to your Web sites can also be used to record the number of resources held in a search engine. Further information is available in the article on Promoting Your Project Web Site [24]. Assessing the Quality of your ServiceAs well as indicators of access to a Web site, it is also desirable to provide indicators of access problems. This may include information on broken links and server availability statistics. Broken LinksWe've all come across 404 error messages which indicate a broken link. So we know how irritating they are. It is desirable that Web services should minimise the numbers of broken links. This should include internal links and links to external resources. Link checkers can be used to detect broken links. Many authoring packages will provide link-checking capabilities. In addition there are a number of dedicated link checking applications available, some of which are listed at Yahoo! [25]. It should be noted that simple link-checking software will typically only report on simple hypertext links (the <A HREF=".."> element) and inline images (the <IMG SRC=".."> element). In the current ganeration of Web sites there are likely to be several other types of links, including links to style sheet files, links to images within style sheets, links in JavaScript, links in HTML FORMs, etc. If link-checkng software which can report on such links is not available it will be necessary to analyse the server log files in order to detect user requests for unavailable resources. Server AvailabilityInformation on the server availability is important. The server may be managed by an the service's technical staff or by a central IT department. Procedures for detecting when the server is unavailable and systematically recording the down time may be available. It this is not the case third-party services are available which can monitor services and provide automated notification in a variety of ways, such as email or by pager. An example of a third-party which provides this type of service is WatchDog [26]. It should be noted that the professional version of Web statistcal software such as WebTrends [10] will also provide monitoring functions. ConclusionProcuring figures on usage of your Web site is very important for your project and for NOF. This information paper has shown that there are a number of ways available of analysing these statistics. It has also shown that there are numerous other approaches to understanding your site?s performance and quality. References

|

AcknowledgementsThis paper was commissioned from Brian Kelly by UKOLN on behalf of the New Opportunities Fund in association with the People"s Network and is one of a series of Information Papers that will be produced by the NOF Technical Advisory Service. Queries about the Information Papers should be addressed to:

Marieke Napier UKOLN is funded by Resource: The Council for Museums, Archives & Libraries, the Joint Information Systems Committee (JISC) of the Higher and Further Education Funding Councils, as well as by project funding from the JISC and the European Union. UKOLN also receives support from the University of Bath where it is based. |

|

|