This page is for printing out all of the case studies. Note that some of the internal links may not work.

The Exploit Interactive e-journal [1] was funded by the EU's Telematics For Libraries programme to disseminate information about projects funded by the programme. The e-journal was produced by UKOLN, University of Bath.

Exploit Interactive made use of Dublin Core metadata in order to provide enhanced local search facilities. This case study describes the approaches taken to the management and use of the metadata, difficulties experienced and lessons which have been learnt.

Metadata needed to be provided in order to provide richer searching than would be possible using standard free-text indexing. In particular it was desirable to allow users to search on a number of fields including Author, Title and Description

In addition it was felt desirable to allow users to restrict searches by issues by article type (e.g. feature article, regular article, news, etc.) and by funding body (e.g. EU, national, etc.) These facilities would be useful not only for end users but also by the editorial team in order to collate statistics needed for reports to the funders.

The metadata was stored in a article_defaults.ssi file which was held in the directory containing an article. The metadata was held as a VBscript assignment. For example, the metadata for the The XHTML Interview article [2] was stored as:

doc_title = "The XHTML Interview"

author="Kelly, B."

title="WebWatching National Node Sites"

description = "In this issue's Web Technologies column we ask Brian Kelly to tell us more about XHTML."

article_type = "regular"

This file was included into the article and converted into HTML <META> tags using a server-side include file.

Storing the metadata in a neutral format and then converting it into HTML <META> tags using a server-side script meant that the metadata could be converted into other formats (such as XHTML) by making a single alteration to the script.

It was possible to index the contents of the <META> tags using Microsoft's SiteServer software in order to provide enhanced search facilities, as illustrated below.

Figure 1: Standard Search Interface (click for enlarged view)

As illustrated in Figure 1 it is possible to search by issue, article type, project category, etc.

Alternative approaches to providing the search interface can be provided. An interface which uses a Windows-explorer style of interface is shown in Figure 2.

Figure 2: Alternative Search Interface (click for enlarged view)

Initially when we attempted to index the metadata we discovered that it was not possible to index <META> tags with values containing a full stop, such as <meta name="DC.Title" content="The XHTML Interview">.

However we found a procedure which allowed the <META> tags to be indexed correctly. We have documented this solution [3] and have also published an article describing this approach [4].

During the two year lifetime of the Exploit Interactive e-journal three editors were responsible for its publication. The different editors are likely to have taken slightly different approaches to the creation of the metadata. Although the format for the author's name was standardised (surname, initial) the approaches to creation of keywords, description, etc. metadata was not formally documented and so, inevitably, different approaches will have been adopted. In addition there was no systematic checking for the existence of all necessary metadata fields and so some may have been left blank.

The approaches which were taken provided a rich search service for our readers and enabled the editorial team to easily obtain management statistics. However if we were to start over again there are a number of changes we would consider making.

Although the metadata is stored in a neutral format which allows the format in which it is represented to be changed by updating a single server-side script, the metadata is closely linked with each individual article. The metadata cannot easily be processed independently of the article. It is desirable, for example, to be able to process the metadata for every article in a single operation - in order to, for example, make the metadata available in OAI format for processing by an OAI harvester.

In order to do this it is desirable to store the metadata in a database. This would also have the advantage of allowing the metadata to be managed and allow errors (e.g. variations of author's names, etc.) to be cleaned.

Use of a database as part of the workflow process would enable greater control to be applied for the metadata: for example, it would enable metadata such as keywords, article type, etc. to be chosen from a fixed vocabulary, thus removing the danger of the editor misspelling such entries.

Brian Kelly

UKOLN

University of Bath

BATH

Email: b.kelly@ukoln.ac.uk

The FAILTE project [1] was funded by JISC to provide a service which engineering lecturers in higher education could use to identify and locate electronic learning resources for use in their teaching. The project started in August 2000. One of the first tasks was to set up a project Web site describing the aims, progress and findings of the project and the people involved.

Figure 1: The FAILTE home page

As an experienced Web author I decided to use this opportunity to experiment with two specifications which at that time were relatively new, namely cascading style sheets (CSS) and HTML. At the same time I also wanted to create pages which looked reasonably attractive on the Web browsers in common use (including Netscape 4.7 which has poor support for CSS) and which would at least display intelligible text no matter what browser was used.

Here is not the place for a detailed discussion of the merits of separating logical content markup from formatting, but I will say that I think that, since this is how HTML was envisaged by its creators, it works best when used in this way. Some of the reasons at the time of starting the Web site were:

A quick investigation of the Web server log files from a related server which dealt with the same user community as our project targeted lead us to the decision that we should worry about how the Web site looked on Netscape 4.7, but not browsers with poorer support of XHTML and CSS (e.g. Netscape 4.5 and Internet Explorer 3).

The Web site was a small one, and there would be one contributor: me. This meant that I did not have to worry about the lack of authoring tools for XHTML at the time of setting up the Web site. I used HomeSite version 4.5, a text editor for raw HTML code, mainly because I was familiar with it. Divisions (<div> tags) were used in place of tables to create areas on the page (a banner at the top, a side bar for providing a summary of the page content), graphics were used sparingly, and colour was used to create a consistent and recognisable look across the site. It is also worth noting that I approached the design of the Web site with the attitude that it I could not assume that it would be possible to control layout down to the nearest point.

While writing the pages I tested mainly against Netscape 4.7, since this had the poorest support for XHTML and CSS . I also made heavy use of the W3C XHMTL and CSS validation service [2], and against Bobby [3] to check for accessibility issues. Once the code validated and achieved the desired effect in Netscape 4.7 I checked the pages against a variety of browser platforms.

While it was never my aim to comply with a particular level of accessibility, the feedback from Bobby allowed me to enhance accessibility while building the pages.

Most of the problems stemmed from the need to support Netscape 4.7, which only partially implements the CSS specification. This cost time while I tried approaches which didn't work and then looked for work-around solutions to achieve the desired effect. For example, Netscape 4.7 would render pages with text from adjacent columns overlapping unless the divisions which defined the columns had borders. Thus the <div> tags have styles which specify borders with border-style: none; which creates a border but doesn't display it.

The key problem here is the partial support which this version of Netscape has for CSS: older versions have no support, and so the style sheet has no effect on the layout, and it is relatively easy to ensure that the HTML without the style sheet makes sense.

Another problem was limiting the amount of white space around headings. On one page in particular there were lots of headings and only short paragraphs of text. Using the HTML <h1>, <h2>, <h3>, etc. tags left a lot of gaps and led to a page which was difficult to interpret. What I wanted to do was to have a vertical space above the headings but not below. I found no satisfactory way of achieving this using the standard heading tags which worked in Netscape 4.7 and didn't cause problems in other browsers. In the end, I created class styles which could be applied to a <span> to give the effect I wanted e.g.:

<p><span class="h2">Subheading</span><br />

Short paragraph</p>

This was not entirely satisfactory since any indication that the text was a heading is lost if the browser does not support CSS.

The Web site is now two years old and in that time I have started using two new browsers. I now use Mozilla as my main browser and was pleasantly surprised that the site looks better on that than on the browsers which I used while designing it. The second browser is an off-line Web page viewer which can be used to view pages on a PDA, and which makes a reasonable job rendering the FAILTE Web site - a direct result of the accessibility of the pages, notably the decision not to use a table to control the layout of the page. This is the first time that the exhortation to write Web sites which are device-independent has been anything other than a theoretical possibility for me (remember WebTV?)

I think that it is now much easier to use XHTML and CSS since the support offered by authoring tools is now better. I would also reconsider whether Netscape 4.7 was still a major browser: my feeling is that while it still needs supporting in the sense that pages should be readable using it, I do not think that it is necessary to go to the effort of making pages look attractive. In particular I would not create styles which imitated <Hn> in order to modify the appearance of headings. I look forward to the time when it is possible to write a page using standard HTML repertoire of tags without any styling so that it makes sense as formatted text, with clear headings, bullet lists etc., and then to use a style sheet to achieve the graphical effect which was desired.

Phil Barker

Phil Barker

ICBL

MACS

Heriot-Watt University

Edinburgh

Email: philb@icbl.hw.ac.uk

URL: http://www.icbl.hw.ac.uk/~philb/

Citation Details:

"Standards and Accessibility Compliance for the FAILTE Project Web Site",

by Phil Barker, Heriot-Watt University.

Published by QA Focus, the JISC-funded

advisory service, on 4th November 2002.

Available at http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-02/

The FAILTE project was funded by the JISC's 5/99 programme.

In a number of surveys of JISC 5/99 project Web sites carried out in October / November 2002 the FAILTE Web site was found to (a) comply with XHTML standards, (b) comply with CSS standards and (c) comply with WAI AA accessibility guidelines.

Brian Kelly, QA Focus, 4 November 2002

Standards and Accessibility Compliance for the FAILTE Project Web Site,

Barker, P., QA Focus case study 02, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-02/>

First published November 2002.

The RDN's Subject Portals Project

(SPP) is funded under the

JISC's DNER Development Programme.

There were two proposals, SAD I

(Subject Access to the DNER) and SAD II.

The original SAD I proposal was part of a closed JISC DNER call, 'Enhancing JISC

Services to take part in the DNER'. The SAD II proposal was successful under

the JISC 5/99 call, 'Enhancing the DNER for Teaching and Learning'.

The original project proposals are available [1].

The RDN's Subject Portals Project

(SPP) is funded under the

JISC's DNER Development Programme.

There were two proposals, SAD I

(Subject Access to the DNER) and SAD II.

The original SAD I proposal was part of a closed JISC DNER call, 'Enhancing JISC

Services to take part in the DNER'. The SAD II proposal was successful under

the JISC 5/99 call, 'Enhancing the DNER for Teaching and Learning'.

The original project proposals are available [1].

The aim of the project is to improve the functionality of five of the RDN hub sites to develop them into subject portals. Subject portals are filters of Web content that present end users with a tailored view of the Web within a particular subject area. In order to design software tools that simultaneously satisfy the needs of a variety of different sites and make it easier for institutional portals to embed our services in the future, we are designing a series of Web "portlets". One portlet will be built for each of the key portal functions required, focussing initially on authorisation and authentication (account management); cross-searching; and user profiling; but including eventually a range of "additional services" such as news feeds, jobs information, and details of courses and conferences. The project is committed to using open source software wherever possible.

The hub sites involved in the SPP are EEVL (based at Heriot Watt University, Edinburgh), SOSIG (University of Bristol), HUMBUL (University of Oxford); BIOME (University of Nottingham) and PSIGate (University of Manchester). The project is managed from UKOLN based at the University of Bath, and the technical development is led from ILRT at the University of Bristol.

The fact that the SPP partners are geographically dispersed has posed a number of challenges. Since the objective of the SPP is the enhancement of the existing hub sites, hub representatives have naturally wished to be closely involved, both on the technical and on the content management sides of the project. At the last count, 38 people are involved in the project, devoting to it varying percentages of their time. But this means that physical meetings are difficult to organise and costly: since work began in December 1999 on the SAD II project, only two full project meetings have been held, with another planned for the beginning of 2003. Smaller physical meetings have been held by the technical developers at ILRT and the five hubs, but these again are extremely time-consuming.

We also faced the problem that many of the project partners had never worked together before. Not only was this a challenge on a social level, it was also likely to prove difficult to find where the skills and experience (and software preferences) of the developers overlapped, and at the beginning of 2002, the then project manager Julie Stuckes commissioned a skills audit to discover the range and extent of these skills and where the disparities lay. It was also likely to be hard to keep track at the project centre of the different development activities taking place in order to produce a single product, and to reduce the risk of duplicating effort, or worse, producing incompatible work. We also thought moreover that it was desirable to develop a method of describing the technical work involved in the project in a way easily understood by the content managers and non-technical people outside of the project.

We tackled the problem of communication across the project by the use of a project JISCmail mailing list [2]. The list is archived on the private version of the SPP Web site [3] where other internal documents are also posted.

The developers have their own list (spp-dev@dev.portal.ac.uk) and their own private Web site [4] which is stored in a versioning system (CVS - Concurrent Version System [5]) which gives any authenticated user the ability to update the site remotely.

In addition the developers hold weekly live chat meetings using IRC (Internet Relay Chat [6]) software (as shown in Figure 1), the transcripts of which are logged and archived on the developers' Web site.

Using IRC means the developers are able to keep each other informed of their activities in a relaxed and informal manner; this has aided closer working relationships.

Figure 1: Example IRC Session

As well as holding the developers' Web site, CVS also contains the project's source code and build environment. This takes the form of a central repository into and out of which developers check code remotely, ensuring that their local development environments are kept in step. A Web interface also provides the option of browsing the code, as well as reviewing change histories. Automatic e-mail notification alerts the developers to updates checked into the CVS repository, and all changes are also logged. This has proved an essential tool when co-ordinating distributed code development.

The other part of the software development infrastructure is providing a build environment that takes care of standard tasks, allowing the team members to concentrate on their coding. Using a combination of open-source tools (e.g. ant [7] and junit [8]) a system has been created that allows the developer to build their code automatically, run tests against it, and then configure and deploy it into their test server. As well as this, the build system will also check for new versions of third-party packages used by the project, updating them automatically if necessary. This system is also managed by current project down from the central repository, build, configure and deploy it, having it running in a matter of minutes.

Because of the widely dispersed team, the difference in software preferences and the mixed technical ability across the project, we looked around for a design process that would best record and standardise our requirements. UML (Unified Modelling Language [9]) is now a widely accepted standard for object oriented modeling, and we chose it because we felt it produced a design that is clear and precise, so making it easy to understand for technical and non-technical minds alike. UML gave us a means to visualise and integrate use cases, integration diagrams and class models. Moreover using UML modelling tools, it was possible to generate code from the model or update the model whenever the code was further developed.

Figure 2: Example UML Diagram

Finding UML software that had all the features needed was a problem: there are plenty of products available but none quite met all our requirements, especially when it came to synchronising the work being done by different authors. Eventually we opted to use the ICONIX process [10]. This is a simplified approach to UML modeling, which uses a core subset of diagrams. This enabled us to move from use cases to code quickly and efficiently using a minimum number of steps, thus giving the technical side of the project a manageable coding cycle.

Additional funding was obtained from the JISC in order to bring one of the authors of the ICONIX process (Doug Rosenberg) over from California to run a three day UML training course. Although this course was specially designed for SPP, places were offered to other 5/99 projects in order to promote wider use of this methodology across the JISC community. Unfortunately, despite early interest, no other project was represented at the training, although Andy Powell, the technical co-ordinator for the RDN, attended the course. Additional funding was also received from the JISC to purchase licences for Rational Rose [11], which we had identified as the most effective software available to produce the design diagrams

Finally, to provide greater structure to the project, a timetable of activities produced using MS Project is posted on the private project Web site and is kept continually up to date. A message is posted to the project mailing list to alert partners of any major changes to the timetable.

It would have been sensible for us to have adopted a process for software development at an earlier stage in the project: it was perhaps a need that we could have anticipated during the SAD I project phase. Also, it is worth noting from our experiences that getting the communications and technical support infrastructure in place is a job in itself, and should be built into the initial planning stage of any large and dispersed project.

Electronic communication is still no substitute for face-to-face meetings so the SPP development team continue to try to meet as regularly as possible. Time is inevitably a major problem wherever project partners have other work commitments: all the project partners based at the hub sites have to juggle SPP work which is for the project as a whole, with that which relates particularly to their own hub's adoption of the project's outcomes. Increasingly, as the project develops, less work will be required from the project "centre" and more at the hubs, leading to an eventual handover of the subject portal developments to the hubs for future management.

It is our plan to make use of UML diagrams in the final project documentation to describe the design and development process. They will offer a detailed explanation of our decision making throughout the project and will give future projects an insight into our methodology. Andy Powell was also so impressed with UML that he is planning to use it across development work for the RDN in the future.

The future development of the SPP beyond the end of the project is likely to be led by the technical development partners, for instance in the continued development of the portlets to enable them to be installed into alternative open source software platforms to make the technology as compatible with existing systems as possible. It is therefore greatly to the benefit of the project that they have become such an effective and close working team.

Ruth Martin, SPP Project Manager

UKOLN

University of Bath

Bath

BA2 7AY

Email: r.martin@ukoln.ac.uk

Jasper Tredgold, SPP Technical Co-ordinator

ILRT

University of Bristol

10 Berkeley Square

Bristol

BS8 1HH

Email: jasper.tredgold@bris.ac.uk

The SPP project (initially known as SAD I and then SAD II) was funded by the JISC's 5/99 programme.

Brian Kelly, QA Focus, 4 November 2002

Managing a Distributed Development Project: The Subject Portals Project,

Martin, R. and Tredgold, J., QA Focus case study 03, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-03/>

First published 4th November 2002.

e-MapScholar, a JISC 5/99 funded project, aims to develop tools and learning and teaching materials to enhance and support the use of geo-spatial data currently available within tertiary education in learning and teaching, including digital map data available from the EDINA Digimap service. The project is developing:

The Disability Discrimination Act (1995) (DDA) aimed to end discrimination faced by many disabled people. While the DDA focused mainly on the employment and access to goods and services, the Special Education Needs and Disability Act (2001) (SENDA) amended the DDA to include education. The learning and teaching components of SENDA came into force in September 2002. SENDA has repercussions for all projects producing online learning and teaching materials for use in UK education because creating accessible materials is now a requirement of the JISC rather than a desirable project deliverable.

This case study describes how the e-MapScholar team has addressed accessibility in creating the user interfaces for the learning resource centre, case studies, content management system and virtual placement.

Figure 1: Screenshot of the case study index |

Figure 2: Screenshot of the learning resource centre resource selection page |

Figure 3: Screenshot of the content management system |

Figure 4: Screenshot of the Virtual Placement (temporary - still under development) |

An accessible Web site is a Web site that has been designed so that virtually everyone can navigate and understand the site. A Web site should be informative, easy to navigate, easy to access, quick to download and written in a valid hypertext mark up language. Designing accessible Web sites benefits all users, not just disabled users.

Under SENDA the e-MapScholar team must ensure that the project deliverables are accessible to users with disabilities including mobility, visual or audio impairments or cognitive/learning issues. These users may need to use specialist browsers (such as speech browsers) or configure their browser to enhance the usability of Web sites (e.g. change font sizes). It is also a requirement of the JISC funding that the 5/99 projects should reach at least priority 1 and 2 of the Web Accessibility Initiative (WAI) standards and where appropriate, priority 3.

The project has been split into four major phases:

While the CMS and learning units are inter-connected, the other components can exist separately from one another.

The project has employed a simple and consistent design in order to promote coherence and also to ease navigation of the site. Each part employs similar headings, navigation and design.

The basic Web design was developed within the context of the learning units and was then adapted for the case studies and virtual placement.

Summaries, learning objectives and pre-requisites are provided where necessary.

Links to help page are provided. The help page will eventually provide information on navigation, how to use the interactive tools and FAQ in the case of learning units and details of any plug-ins/software used in the case studies.

Font size will be set to be resizable in all browsers.

Verdana font has been used as this is considered the most legible font face.

CSS (Cascading style sheets) have been used to control the formatting of text; this cuts out the use of non-functional elements, which could interfere with text reader software.

Navigation has been used in a consistent manner.

All navigational links are in standard blue text.

All navigation links are text links apart from the learning unit progress bar, which is made up of clickable images. These images include an ALT tag describing the text alternative.

The progress bar provides a non-linear pathway through the learning units, as well as providing the user with an indication of their progress through the unit.

The link text used can be easily understood when out of context, e.g. "Back to Resource" rather than "click here".

'Prev' and 'Next' text links provide a simple linear pathway through both the learning units and the case studies.

All links are keyboard accessible for non-mouse users and can be reached by using the tab key.

Where possible the user is offered a choice of pathway through the materials e.g. the learning units can be viewed as a long scrolling page or page-by-page chunks.

Web safe colours have been used in the student interface.

The interface uses a white background ensuring maximum contrast between the black text, and blue navigational links.

Very few graphics have been used in the interface design to minimise download time.

Content graphics and the project logo have ALT tags providing a textual description.

Long descriptions will be incorporated where necessary.

Graphics for layout will contain "" (i.e. null) ALT tags so they will be ignored by text reader software.

Tables have been used for layout purposes complying with W3C standards; not using structural mark-up for visual formatting.

HTML 4.0 standards have been complied with.

JavaScript has been used for the pop-up help menu, complying with JavaScript 1.2 standards.

The user is explicitly informed that a new window is to be opened.

The new window is set to be smaller so that it is easily recognised as a new page.

Layout is compatible with early version 4 browsers, both in Netscape, Internet Explorer and Opera.

Specific software or plug-ins are required to view some of the case study materials e.g. GIS or AutoCAD software has been used in some of the case studies. Users will be advised of these and where possible will be guided to free viewers. Where possible the material will be provided in an alternative format such as screen shots, which can be saved as an image file.

Users are warned when non-HTML documents (e.g. PDF or MS Word) are included in the case studies and where possible documents are also provided in HTML format.

Problems experienced have generally been minor.

Throughout the project accessibility has been thought of as an integral part of the project and this approach has generally worked well. Use of templates and CSS have helped in minimising workload when a problem has been noted and the materials updated.

It is important that time and thought goes into the planning stage of the project as it is easier and less time consuming to adopt accessible Web design in an early stage of the project than it is to retrospectively adapt features to make them accessible.

User feedback from evaluations, user workshops and demonstrations has been extremely useful in identifying potential problems.

Deborah Kent

EDINA National Data Centre

St Helens Office

ICT Centre

St Helens College

Water St

ST HELENS

WA10 1PZ

Email: dkent@ed.ac.uk

Lynne Robertson

Geography

School of Earth, Environmental and Geographical Sciences

The University of Edinburgh

Drummond Street

Edinburgh EH8 9XP

Email: lr@geo.ed.ac.uk

For QA Focus use.

Creating Accessible Learning And Teaching Resources: The e-MapScholar Experience,

Kent, D. and Robertson, L., QA Focus case study 04, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-04/>

First published November 2002.

The e-MapScholar project [1] aims to develop tools and learning and teaching materials to enhance and support the use of geo-spatial data currently available within tertiary education in learning and teaching, including digital map data available from the EDINA Digimap service [2]. These tools and learning materials will be delivered over the Web and will be accessed from a repository of materials branded the "Learning Resource Centre".

The project is funded by the JISC [3] to form part of the DNER, now called the Information Environment [4] and works closely with other projects funded under the same programme.

From the outset of the e-MapScholar Project it was apparent that various standards needed to be agreed upon and conformed to. The two core issues were:

In reaching a broad community of users, a compromise needed to be found between delivery of service to 'low spec' PCs and high-end functionality that would support interactive tools. A range of technical discussions at the outset of the project discussed these implications in the context of choice of standards and technical evaluation criteria.

The various technical elements and their respective standards were specified at the start of the project. These included the use of Java 1.1 for the interactive applets (see Figure 1), and Javascript 1.2 and HTML 4.01 for the user interface. All learning material content would be stored in XML files which would be transformed using XSLT and Java. Similarly, authors of learning materials would also be provided with a set of guidelines for producing the content.

Figure 1: Screen shots of interactive tools produced by e-MapScholar

All software components were written in Java 1.1. All code has and remains fully compliant with this version. Though Java 1.1 does not have as much functionality as later versions e.g. 1.2 and 1.3, it makes the service compliant with a broader choice of browsers and operating systems. Java code was also written to control the conversion of XML into HTML.

HTML 4.01 is used for the layout of the learning resource centre pages. Using HTML standards ensure that the site is more accessible to a wider range of browsers, and is transformed more quickly and easily. The use of client-side JavaScript has been kept to a minimum and only used for pop-up window items such as the help menu and map legends. These are all written in JavaScript 1.2. Using this version of JavaScript again reflected a compromise between control of graphics and levels of interaction, and compatibility with browsers and operating systems.

Authors providing content to the learning units have been issued with a document containing guidance notes. This document aims to standardise the content of the learning units, by providing procedures on how various elements of the units should be written. These elements include guidelines on the structuring of a unit, wording of learning objectives and quiz tools, and the acceptable formats for images and photographs. Complying with these guidelines will ensure the pedagogical aspect of the units meet teaching requirements and are suitable for students across a range of abilities.

The imposition of all of the above standards increased the chances of interoperability between the various units and modules comprising the service.

The use of Java 1.1 should ensure that users do not have to download the plug-in as the majority of browsers come packaged with this version of Java Virtual Machine installed (or higher). However, last year Microsoft launched their latest version of Internet Explorer (6.0) without a Java VM. This means that users of IE 6 will have to download a Java plug-in to run any Java applets, regardless of what version of Java the applets have been created with.

Web statistics [5] (illustrated in Figure 2) show that Internet Explorer is the most widely used Web browser, with IE 6 already accounting for a considerable portion of this usage. This means that 48% of Internet users will have to download the Java plug-in order to run any Java applets.

Figure 2: Pie chart of browser statistics generated using data from

[6]

With many users upgrading to the new service in the future, one might question the point in using Java 1.1 when the majority of Internet users will still have to download the plug-in? Ideally, standards should be employed with the majority of users in mind.

At the outset we chose Java 1.1 since it was incorporated in Internet Explorer and the majority of other browsers, and would not involve users having to download the plug-in. It is estimated that 48% of web users are using IE6, all of whom will need to download the Java plug-in. Our ambition of 'use without plug-in' has somewhat evaporated, and an argument could now be made that we could ask users to download other plug-ins, notably 'Flash'. Ironically IE6 users would not need to download Flash player as it comes ready packaged in IE6.

If Flash were used (in conjunction with Java 1.1) it would allow greater levels of interaction with the user, and easier development of visualisation tools. However, this would have required access to Flash developers which the project does not have at this stage. Currently no decision has been made on whether it is worth taking advantage of the additional functionality afforded by Flash player, but it does open a door, which was previously thought to be firmly closed.

On the whole, throughout the project, various software engineering problems have arisen. But broad agreement on the use of standards coupled with clarification of system requirements, and requirement specifications has helped to keep the project manageable from a software engineering prospective.

Lynne Robertson

Geography

School of Earth, Environmental and Geographical Sciences

The University of Edinburgh

Drummond Street

Edinburgh EH8 9XP

Email: lr@geo.ed.ac.uk

For QA Focus use.

Standards for e-learning: The e-MapScholar Experience,

Robertson, L., QA Focus case study 05, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-05/>

The document was published in November 2002.

The NMAP project [1] was funded under the JISC 05/99 call for proposals to create the UK's gateway to high quality Internet resources for nurses, midwives and the allied health professions.

NMAP is part of the BIOME Service, the health and life science component of the national Resource Discovery Network (RDN), and closely integrated with the existing OMNI gateway. Internet resources relevant to the NMAP target audiences are identified and evaluated using the BIOME Evaluation Guidelines. If resources meet the criteria they are described and indexed and included in the database.

NMAP is a partnership led by the University of Nottingham with the University of Sheffield and Royal College of Nursing (RCN). Participation has also been encouraged from several professional bodies representing practitioners in these areas. The NMAP team have also been closely involved with the professional portals of the National electronic Library for Health (NeLH).

The NMAP service went live in April 2001 with 500 records. The service was actively promoted in various journal, newsletters, etc. and presentations or demonstrations were given at various conference and meetings. Extensive use was made of electronic communication, including mailing lists and newsgroups for promotion.

Work in the second year of the project included the creation of two VTS tutorials: the Internet for Nursing, Midwifery and Health Visiting, and the Internet for Allied Health.

As one of the indicators of the success, or otherwise, in reaching the target group we wanted to know how often the NMAP service was being used, and ideally who they are and how they are using it.

The idea was to attempt to ensure we were meeting their needs, and also gain data which would help us to obtain further funding for the continuation of the service after the end of project funding.

There seems to be little standardisation of the ways in which this sort of data is collected or reported, and although we could monitor our own Web server, the use of caching and proxy servers makes it very difficult to analyse how many times the information contained within NMAP is being used or where the users are coming from.

These difficulties in the collection and reporting of usage data have been recognised elsewhere, particularly by publishers of electronic journals who may be charging for access. An international group has now been set up to consider these issues under the title of project COUNTER [2] which has issued a "Code of Practice" on Web usage statistics. In addition QA Focus has published a briefing document on this subject [3].

We took a variety of approaches to try to collect some meaningful data. The first and most obvious of these is log files from the server which were produced monthly and gave a mass of data including:

A small section of one of the log files showing the general summary for November 2002 can be seen below. Note that figures in parentheses refer to the 7-day period ending 30-Nov-2002 23:59.

Successful requests: 162,910 (39,771) Average successful requests per day: 5,430 (5,681) Successful requests for pages: 162,222 (39,619) Average successful requests for pages per day: 5,407 (5,659) Failed requests: 2,042 (402) Redirected requests: 16,514 (3,679) Distinct files requested: 3,395 (3,217) Unwanted logfile entries: 51,131 Data transferred: 6.786 Gbytes (1.727 Gbytes) Average data transferred per day: 231.653 Mbytes (252.701 Mbytes)

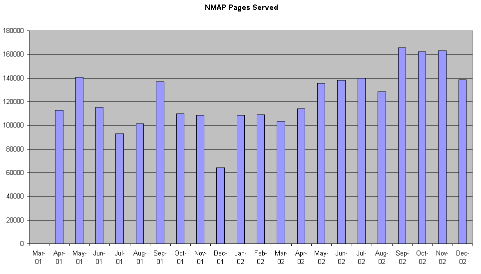

A graph of the pages served can be seen in Figure 1.

Figure 1: Pages served per month

The log files also provided some interesting data on the geographical locations and services used by those accessing the NMAP service.

Listing domains, sorted by the amount of traffic, example from December 2002, showing those over 1%.

| Requests | % bytes | Domain |

| 48237 | 31.59% | .com (Commercial) |

| 40533 | 28.49% | [unresolved numerical addresses] |

| 32325 | 24.75% | .uk (United Kingdom) |

| 14360 | 8.52% | ac.uk |

| 8670 | 7.29% | nhs.uk |

| 8811 | 7.76% | .net (Network) |

| 1511 | 1.15% | .edu (USA Educational) |

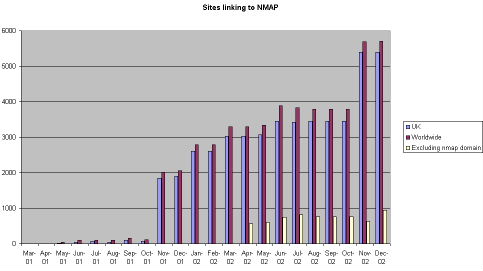

A second approach was to see how many other sites were linking to the NMAP front page URL. AltaVista was used as it probably had the largest collection back in 2000 although this has now been overtaken by Google. A search was conducted each month using the syntax: link:http://nmap.ac.uk and the results can be seen in Figure 2.

Figure 2 - Number of sites linking to NMAP (according to AltaVista)

The free version of the service provided by InternetSeer [4] was also used. This service checks a URL every hour and will send an email to one or more email addresses saying if the site is unavailable. This service also provides a weekly summary by email which, along with the advertising includes a report in the format:

========================================

Weekly Summary Report

========================================

http://nmap.ac.uk

Total Outages: 0.00

Total time on error: 00:00

Percent Uptime: 100.0

Average Connect time*: 0.13

Outages- the number of times we were unable to access this URL

Time on Error- the total time this URL was not available (hr:min)

% Uptime- the percentage this URL was available for the day

Connect Time- the average time in seconds to connect to this URL

During the second year of the project we also conducted an online questionnaire with 671 users providing data about themselves, why they used NMAP and their thoughts on its usefulness or otherwise, however this is beyond the scope of this case study and is being reported elsewhere.

Although these techniques provided some useful trend data about the usage of the NMAP service there are a series of inaccuracies, partly due to the nature of the Internet, and some of the tools used.

The server log files are produced monthly (a couple of days in areas) and initially included requests from the robots used by search engines, these were later removed from the figures. The resolution of the domains was also a problem with 28% listed as "unresolved numerical addresses" which gives no indication where the users is accessing from. In addition it is not possible to tell whether .net or .com users are in the UK or elsewhere. The number of accesses from .uk domains was encouraging and specifically those from .ac & .nhs domains. It is also likely (from data gathered in our user questionnaire) that many of the .net or .com users are students or staff in higher or further education or NHS staff who accessing the NMAP service via a commercial ISP from home.

In addition during the first part of 2002 we wrote two tutorials for the RDN Virtual Training Suite (VTS) [5], which were hosted on the BIOME server and showed up in the number of accesses. These were moved in the later part of 2002 to the server at ILRT in Bristol and therefore no longer appear in the log files. It has not yet been possible to get access figures for the tutorials.

The "caching" of pages by ISPs and within .ac.uk and .nhs.uk servers does mean faster access for users but probably means that the number of users in undercounted in the log files.

The use of AltaVista "reverse lookup" to find out who was linking to the NMAP domain was also problematic. This database is infrequently updated which accounts from the jumps seen in Figure 2. Initially when we saw a large increase in November 2001 we thought this was due to our publicity activity and later realised that this was because it included internal links within the NMAP domain in this figure, therefore from April 2002 we collected another figure which excluded internal links linking to self.

None of these techniques can measure the number of times the records from within NMAP are being used at the BIOME or RDN levels of JISC services. In addition we have not been able to get regular data on the number of searches from within the NeLH professional portals which include an RDNi search box [6] to NMAP.

In the light of our experience with the NMAP project we recommend that there is a clear strategy to attempt to measure usage and gain some sort of profile of users of any similar service.

I would definitely use Google rather than AltaVista and would try to specify what is needed from log files at the outset. Other services have used user registration and therefore profiles and cookies to track usage patterns and all of these are worthy of consideration.

Rod Ward

Rod Ward

Lecturer, School of Nursing and Midwifery,

University of Sheffield

Winter St.

Sheffield

S3 7ND

Email: Rod.Ward@sheffield.ac.uk

Also via the BIOME Office:

Greenfield Medical Library

Queen's Medical Centre,

Nottingham NG7 2UH

Email: rw@biome.ac.uk

Gathering Usage Statistics and Performance Indicators: The NMAP Experience,

Ward, R., QA Focus case study 06, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-06/>

This document was published on 8th January 2003.

Artworld [1] is a consortium project funded by JISC

under the 5/99 funding round. The consortium consists of The Sainsbury Centre

for Visual Arts (SCVA) at

The University of East Anglia (UEA)

and the Oriental Museum at the University of Durham.

Artworld [1] is a consortium project funded by JISC

under the 5/99 funding round. The consortium consists of The Sainsbury Centre

for Visual Arts (SCVA) at

The University of East Anglia (UEA)

and the Oriental Museum at the University of Durham.

The main deliverable for the project is its Web site which will include a combined catalogue of parts of the two collections and a set of teaching resources.

Object images are being captured using digital photography at both sites and some scanning at SCVA. Object data is being researched at both sites independently and is input to concurrent Microsoft Access databases. Image data is captured programmatically from within the Access database. Object and image data are exported from the two independent databases and checked and imported into a Postgres database for use within the catalogue on the Web site.

There are four teaching resources either in development or under discussion. These are African Art and Aesthetics, Egyptian Art and Museology, An Introduction to Chinese art and Japanese Art. These resources are being developed by the department of World Art Studies and Museology at UEA, The department of Archaeology, Durham and East Asian Studies, University of Durham respectively. The Japanese module is currently under negotiation. These resources are stored as simple XML files ready for publication to the Web.

The target audience in the first instance are undergraduate art history, anthropology and archaeology students. However, we have tried to ensure that the underlying material is structured in such a way that re-use at a variety of levels, 16 plus to post graduate is a real possibility. We hope to implement this during the final year of the project by ensuring conformance with IMS specifications.

In the early days of the project we were trying very hard to find an IT solution that would not only fulfill the various JISC requirements but would be relatively inexpensive. After a considerable amount time researching various possibilities we selected Apache's Cocoon system as our Web publishing engine. To help us implement this we contracted a local internet applications provider Luminas [2].

The Cocoon publishing framework gives us an inexpensive solution in that the software is free so we can focus our resources on development.

One area that we had inadvertently missed during early planning was how we represent copyright for the images whilst providing some level of protection. We considered using watermarking however this would have entailed re-processing a considerable number of images at a time when we had little resource to spare.

This issue came up in conversation with Andrew Savory of Luminas as early notification that all of the images already transferred to the server and in use through Cocoon would need to be replaced. As we talked about the issues Andrew presented a possible solution, why not insert copyright notices into the images "on the fly". This would be done using a technology called SVG (Scalable Vector Graphics). What SVG could do for us is to respond to a user request for an image by combining the image with the copyright statement referenced from the database and present the user with this new combined image and copyright statement.

We of course asked Luminas to proceed with this solution. The only potential stumbling block was how we represent copyright from the two institutions in a unified system. The database was based on the VADS/VRA data schema so we were already indicating the originating institution in the database. It was then a relatively simple task to include a new field containing the relevant copyright statements.

It should be noted that a composite JPEG (or PNG, GIF or PDF) image is sent to the end user - there is no requirement for the end user's browser to support the PNG format. The model for this is illustrated in Figure 1.

Figure 1: Process For Creating Dynamic Images

Although in this case we ended up with an excellent solution there are a number of lessons that can be derived from the sequence of events. Firstly the benefits of detailed workflow planning in the digitisation process cannot be understated. If a reasonable solution (such as water marking) had been planed into the processes from the start then a number of additional costs would not have been incurred. These costs include project staff time in discussing solutions to the problem, consultancy costs to implement a new solution. However, there are positive aspects of these events that should be noted. Ensuring that the project has a contingency fund ensures that unexpected additional costs can be met. Close relations with contractors with free flow of information can ensure that potential solutions can be found. Following a standard data schema for database construction can help to ensure important data isn't missed. In this case it expedited the solution.

Cocoon [3] is an XML Publishing Framework that allows the possibility of including logic in XML files. It is provided through the Apache software foundation.

SVG (Scalable Vector Graphics) [4] [5] is non proprietary language for describing two dimensional graphics in XML. It allows for three types of objects: vector graphic shapes; images and text. Features and functions include: grouping, styling, combining, transformations, nested transformations, clipping paths, templates, filter effects and alpha masks.

Paul Child

ARTWORLD Project Manager

Sainsbury Centre for Visual Arts

University of East Anglia

Norwich NR4 7TJ

Tel: 01603 456 161

Fax: 01603 259 401

Email: p.child AT uea.ac.uk

Using SVG in the Artworld Project,

Child, P., QA Focus case study 07, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-07/>

The document was published in January 2003.

The Crafts Study Centre (CSC) [1], established in 1970, has an international standing as a unique collection and archive of twentieth century British Crafts. Included in its collection are textiles, ceramics, calligraphy and wood. Makers represented in the collection include the leading figures of the twentieth century crafts such as Bernard Leach, Lucie Rie and Hans Coper in ceramics; Ethel Mairet, Phyllis Barron and Dorothy Larcher, Edward Johnston, Irene Wellington, Ernest Gimson and Sidney Barnsley. The objects in the collection are supported by a large archive that includes makers' diaries, documents, photographs and craftspeoples' working notes.

The Crafts Study Centre Digitisation Project [2] has been funded by the JISC to digitise 4,000 images of the collection and archive and to produce six learning and teaching modules. Although the resource has been funded to deliver to the higher education community, the project will reach a wide audience and will be of value to researchers, enthusiasts, schools and the wider museum-visiting public. The Digitisation Project has coincided with an important moment in the CSC's future. In 2000 it moved from the Holborne Museum Bath, to the Surrey Institute of Art & Design, University College, Farnham, where a purpose-built museum with exhibition areas and full study facilities, is scheduled to open in spring 2004.

The decision to create 'born digital' data was therefore crucial to the success not only of the project, but also in terms of the reusability of the resource. The high-quality resolutions that have resulted from 'born digital' image, will have a multiplicity of use. Not only will users of the resource on the Internet be able obtain a sense of the scope of the CSC collection and get in-depth knowledge from the six learning and teaching modules that are being authored, but the relatively large file sizes have produced TIFF files that can be used and consulted off-line for other purposes.

These TIFF files contain amazing details of some of the objects photographed from the collection and it will be possible for researchers and students to use this resource to obtain new insights into for example, the techniques used by makers. These TIFF files will be available on site, for consultation when the new CSC opens in 2004. In addition to this, the high-quality print out-put of these images means that they can be used in printed and published material to disseminate the project and to contribute to building the CSC's profile via exhibition catalogues, books and related material.

The project team were faced with a range of challenges from the outset. Many of these were based on the issues common to other digital projects, such as the development of a database to hold the associated records that would be interoperable with the server, in our case the Visual Arts Data Service (VADS), and the need to adopt appropriate metadata standards. Visual Resources Association (VRA) version 3.0 descriptions were used for the image fields. Less straightforward was the deployment of metadata for record descriptions. We aimed for best practice by merging Dublin Core metadata standards with those of the Museum Documentation Association (mda). The end produce is a series of data fields that serve firstly, to make the database compatible with the VADS mapping schema, and secondly to realise the full potential of the resource as a source of information. A materials and technique field for example, has been included to allow for the input of data about how a maker produced a piece. Users of the resource, especially students and researchers in the history of art and design will be able to appreciate how an object in the collection was made. In some records for example, whole 'recipes' have been included to demonstrate how a pot or textile was produced.

Other issues covered the building of terminology controls, so essential for searching databases and for achieving consistency. We consulted the Getty Art and Architecture Thesaurus (AAT) and other thesauri such as the MDA's wordhord, which acts as a portal to thesauri developed by other museums or museum working groups. This was sometimes to no avail because often a word simply did not exist and we had to reply on terminology develop in-house by curators cataloguing the CSC collection, and have the confidence to go with decisions made on this basis. Moreover, the attempt to standardise this kind of specialist collection can sometimes compromise the richness of vocabulary used to describe it.

Other lessons learnt have included the need to establish written image file naming conventions. Ideally, all image file names should tie in with the object and the associated record. This system works well until sub-numbering systems are encountered. Problems arise because different curators when cataloguing different areas of the collection, have used different systems, such as letters of the alphabet, decimal and Roman numerals. This means that if the file name is to match the number marked on the object, then it becomes impossible to achieve a standardised approach. Lessons learnt here, were that we did not establish a written convention early enough in the project, with the result that agreement on how certain types of image file names should be written before being copied onto CD, were forgotten and more than one system was used.

The value of documenting all the processes of the project cannot be overemphasised. This is especially true of records kept relating to items selected for digitisation. A running list has been kept detailing the storage location, accession number, description of the item, when it was photographed and when returned to storage. This has provided an audit trail for every item digitised. A similar method has been adopted with the creation of the learning and teaching modules, and this has enhanced the process of working with authors commissioned to write the modules.

Lastly, but just as importantly, has been the creation of QA forms on the database based on suggestions presented by the Technical Advisory Services for Imaging (TASI) at the JISC Evaluation workshop in April 2002. This has established a framework for checking the quality and accuracy of an image and its associated metadata, from the moment that an object is selected for digitisation, through to the finished product. Divided into two sections, dealing respectively with image and record metadata, this has been developed into an editing tool by the project's documentation officer. The QA forms allows for most of the data field to be checked off by two people before the image and record is signed off. There are comment boxes for any other details, such as faults relating to the image. A post-project fault report/action taken box has been included to allow for the reporting of faults once the project has gone live, and to allow for any item to re-enter the system.

The bank of images created by the Digitisation Project will be of enormous importance to the CSC, not only in terms of widening access to the CSC collection, but in helping to forge its identity when it opens its doors as a new museum in 2004 at the Surrey Institute of Art & Design, University College.

Jean Vacher

Digitisation Project Officer

Crafts Study Centre

Surrey Institute of Art & Design, University College

Crafts Study Centre Digitisation Project - and Why 'Born Digital',

Vacher, J., QA Focus case study 08, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-08/>

Information about the Crafts Study Centre (CSC) [1] and the Crafts Study Centre Digitisation Project [2] is given in another case study [3].

At the outset of The Crafts Study Centre (CSC) Digitisation Project extensive research was undertaken by the project photographer to determine the most appropriate method of image capture. Taking into account the requirements of the project as regards to production costs, image quality and image usage the merits of employing either traditional image capture or digital image capture were carefully considered.

The clear conclusion to this research was that digital image capture creating born digital image data via digital camera provided the best solution to meet the project objectives. The main reasons for reaching this conclusion are shown below:

Items from the CSC collection are identified by members of the project team and passed to the photographer for digitisation. Once the item has been placed in position and the appropriate lighting arranged, it is photographed by using a large format monorail camera (cambo) hosting a Betterlight digital scanning back capable of producing image file sizes of up to 137 megabytes without interpolation.

Initially a prescan is made for appropriate evaluation by the photographer. Any necessary adjustments to exposure, tone, colour, etc are then made via the camera software and then a full scan is carried out with the resulting digital image data being automatically transferred to the photographers image editing program, in this case Photoshop 6.

Final adjustments can then be made, if required and the digital image then saved and written onto CDR for onward delivery to the project database.

The main challenges in setting up this system were mostly related to issues regarding colour management, appropriate image file sizes, and standardisation wherever possible.

To this end a period of trialling was conducted by the photographer at the start of the image digitisation process using a cross section of subject matter from the CSC collection.

Identifying appropriate file sizes for use within the project and areas of the digital imaging process to which a level of standardisation could be applied was fairly straightforward, however colour management issues proved slightly more problematic but were duly resolved by careful cross-platform (Macintosh/MS Windows) adjustments and standardisation within the CSC and the use of external colour management devices.

David Westwood

Project Photographer

Digitisation Project Officer

Crafts Study Centre

Surrey Institute of Art & Design, University College

Image Digitisation Strategy and Technique: Crafts Study Centre Digitisation Project,

Westwood, D., QA Focus case study 09, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-09/>

The document was published in January 2003.

Funded by the Higher Education Funding Council for England under strand three of the initiative 'Improving Provision for Students with Disabilities', the aim of the DEMOS Project was to develop an online learning package aimed at academic staff and to examine the issues faced by disabled students in higher education. The project was a collaboration between the four universities in the Manchester area - the University of Salford [2], the University of Manchester [3], the Manchester Metropolitan University [4] and UMIST [5].

At the start of the project the purpose of the Web site was still unclear, which made it difficult to plan the information structure of the site. Of course, it would serve as a medium to disseminate the project findings, research reports, case studies... but for months the design and the information architecture of this site seemed to be in a neverending state of change.

In the early stage of the project virtual learning environments, such as WebCT, were tested and deemed unsuitable for delivering the course material, due to the fact that they did not satisfy the requirements for accessibility.

Figure 1: The Demos Web Site

At this point it was decided that the Web site should carry the course modules. This changed the focus of the site from delivering information about the progress of the project to delivering the online course material.

In the end we envisioned a publicly accessible resource that people can use in their own time and at their own pace. They can work through the modules in the linear fashion they were written in or they can skip through via the table of contents available on every page. There are also FAQ pages, which point to specific chapters.

Many academic institutions have already added links to the DEMOS materials on their own disability or staff development pages.

To ignore accessibility would have been a strange choice for a site that wants to teach people about disability. Accessibility was therefore the main criteria in the selection of a Web developer.

I have been a Web designer since 1998 and specialised in accessibility from the very beginning. For me it is a matter of ethics. Now it is also the law.

The challenge here was to recreate, at least partially, the feeling of a learning environment with its linear structure and incorporating interactivity in form of quizzes and other learning activities without the use of inaccessible techniques for the creation of dynamic content.

Accessibility techniques were applied from the beginning. But the site also represents an evolution in my own development as a Web designer, it always reflected my own state of knowledge. Where in the beginning accessibility meant eradicating the font tag, it now means standard-compliant code and tableless CSS layout.

This site was designed in compliance with the latest standards developed by the World Wide Web Consortium (W3C) [6] and using the latest accessibility techniques [7] as recommended by the Web Accessibility Initiative (WAI) [8] .

In December 2001 the code base of the site was switched over to XHTML 1.0 Transitional. In November 2002 the site was further improved by changing it to a CSS layout, which is used to position elements on the page without the use of tables. The only layout table left is the one holding the header: logo, search box and top navigation icons.

Stylesheets are also used for all presentational markup and a separate print stylesheet has been supplied.

The code was validated using the W3C Validation Services [9].

With the advent of standard-compliant (version 6 and 7) browsers, the Web developer community started pushing for the adoption of the W3C guidelines as standard practise by all Web designer. Now that version 3 and 4 browsers with all their proprietary mark-up were about to be consigned to the scrap heap of tech history, it was finally possible to apply all the techniques the W3C was recommending. Finally the makers of user agents had started listening to the W3C too and were making browsers that rendered pages designed according to standards correctly. (It turns out things weren't all that rosy but that's the topic for another essay.)

Standards are about accessibility, or, as the W3C phrases it, 'universal design'. They ensure that universal access is possible, i.e. that the information contained on a Web page can be accessed using

The most important reason for designing according to standards is that it gives the user control over how a Web page is presented. The user should be able to increase font sizes, apply preferred colours, change the layout, view headers in a logical structure, etc.

This control can be provided by the Web designer by:

On the DEMOS site, all presentational styles are specified in stylesheets. The site 'transforms gracefully' when stylesheets are ignored by the user agent, which means that the contents of a page linearises logically. The user has control over background and link colours via the browser preferences and can increase or decrease font sizes.

The DEMOS Guide to Accessible Web Design contains a chapter on User Control [10], which describes how these changes can be applied in various browsers.

(The links below lead to pages on the DEMOS site, more precisely: the DEMOS Guide to Accessible Web Design [11] )

Some of the techniques used:

More information and details: Accessibility techniques used on the DEMOS site [12] (listed by WAI checkpoints).

Web developers sometimes believe that accessibility means providing a separate text-only or low-graphics version for the blind. First of all: I have always been on that side of the camp that believes that there should be only one version of a site and that it should be accessible.

"Don't design an alternative text-only version of the site: disabled people are not second class citizens..." (Antonio Volpon, evolt.org [13] )Secondly, accessibility benefits not only blind people [14]. To be truly inclusive we have to consider people with a variety of disabilities, people with a range of visual, auditory, physical or cognitive disabilities, people with learning disabilities, not to forget colour blindness, senior citizens, speakers of foreign languages, et cetera, et cetera.

Surely not all of them are part of the target audience, but you never know, and applying all available accessibility techniques consistently does not take that much more effort.

We tried to provide a satisfactory experience for everyone, providing user control, keyboard access, icons and colour to loosen things up, whitespace and headers to break up text in digestable chunks. And we encourage people to provide feedback, especially if they experience any problems.

To ensure accessibility the site was tested across a variety of user agents and on different platforms. A number of screenshots from these tests [15] can be found at the DEMOS site.

The site has also been tested using the Bobby [16] and the Wave [17] Accessibility Checker. It is AAA compliant, which means that it meets all three levels of accessibility.

One of the last things we finally solved to our satisfaction was the problem of creating interactive quizzes and learning activities for the course modules without the use of inaccessible techniques. Many of the techniques for the creation of dynamic and multimedia content (JavaScript, Java, Flash...) are not accessible.

Eventually we discovered that PHP, a scripting language, was perfect for the job. PHP is processed server-side and delivers simple HTML pages to the browser without the need for plug-ins or JavaScript being enabled.

As mentioned before, the Web site started without a clear focus and without a clear structure. Therefore there wasn't much planning and structured development. In the first months content was added as it was created (in the beginning mainly information about the project) and the site structure grew organically. This caused some problems later when main sections had to be renamed and content restructured. From the Web development point of view this site has been a lesson in building expandability into early versions of Web site architecture.

Since there was so much uncertainty about the information architecture in the beginning, the navigation system is not the best it could be. The site grew organically and navigations items were added as needed. The right-hand navigation was added much later when the site had grown and required more detailed navigation - more detailed than the main section navigation at the top of the page underneath the logo and strapline.

But the right-hand navigation is mainly sub-navigation, section navigation, which might be confusing at times. At the same time, however, it always presents a handy table of contents to the section the visitor is in. This was especially useful in the course modules.

The breadcrumb navigation at the top of the main content was also added at a later date to make it easier for the visitor to know where they are in the subsections of the site.

Already mentioned in Phil Barker's report on the FAILTE Project Web Site [18], Netscape 4 was also my biggest problem.

Netscape 4 users still represent a consistent 12% of visitors in the UK academic environment (or at least of the two academic sites I am responsible for). Since this is the target audience for the DEMOS site, Netscape 4 quirks (i.e. its lack of support for standards) had to be taken into account.

Netscape understands just enough CSS to make a real mess of it. Older browsers (e.g. version 3 browsers) simply ignore stylesheets and display pages in a simpler fashion with presentational extras stripped, while standard-compliant browsers (version 6 and 7) display pages coded according to standards correctly. Netscape 4 is stuck right between those two scenarios, which is the reason why the DEMOS site used tables for layout for a long time.

Tables are not really a huge accessibility problem if used sparingly and wisely. Jeffrey Zeldman wrote in August 2002 in 'Table Layout, Revisited' [19]:

Table layouts are harder to maintain and somewhat less forward compatible than CSS layouts. But the combination of simple tables, sophisticated CSS for modern browsers, and basic CSS for old ones has enabled us to produce marketable work that validates - work that is accessible in every sense of the word.Tables might be accessible these days because screenreader software has become more intelligent but standard-compliance was my aim and layout tables are not part of that.

Luckily techniques have emerged that allow us to deal with the Netscape 4 quirks.

One option is to prevent Netscape 4 from detecting the stylesheet, which means it would deliver the contents in the same way as a text-only browser, linearised: header, navigation, content, footer following each other. No columns, colours, font specifications. But an audience of 12% is too large to show a site to that has lost its 'looks'. The site still had to look good in Netscape 4.

The other option is to use a trick to get Netscape 4 to ignore some of the CSS instructions [20] . Deliver a basic stylesheet to Netscape 4 and an additional stylesheet with extra instructions to modern browsers. This required a lot of tweaking and actually consumed an enormous amount of time but only because I was new to CSS layout. I have converted a number of other sites to CSS layout in the meantime, getting better at it every time.

The DEMOS site now looks good in modern browsers, looks OK but not terrific in Netscape 4, and simply linearises logically in browsers older than that and in text-only browsers.

There are still a few issues that need looking at, e.g. the accessibility of

input forms needs improving (something I'm currently working on) and the structural

mark-up needs improving so that headers are used in logical order starting with

<h1>

There are also a few clashes of forms with the CSS layout. All forms used on the DEMOS site are still in the old table layout. I haven't had the time to figure out what the problem is.

Eventually I also plan to move the code to XHTML Strict and get rid of the remains of deprecated markup [21] , which XHTML Transitional, the doctype [22] used at the moment, still forgives.

Of course it is important to keep the materials produced over the last two and a half years accessible to the public after the funding has run out. This will happen at the end of March 2003. This site will then become part of the Access Summit Web site (at time of writing still under constructions). Access Summit is the Joint Universities Disability Resource Centre that was set up in 1997 to develop provision for and support students with disabilities in higher education in Manchester and Salford.

We currently don't know whether we will be able to keep the domain name, so keep in mind that the URL of the DEMOS site might change. I will do my best to make it possible to find the site easily.

DEMOS Web site

<http://www.demos.ac.uk/>

Jarmin.com Guide to Accessible Web Design:

<http://jarmin.com/accessibility/>

A collation of tips, guidelines and resources by the author of this case study.

Focuses on techniques but includes chapters on barriers to access, evaluation,

legislation, usability, writing for the Web and more. Includes a huge

resources section

<http://jarmin.com/accessibility/resources/>

where you can find links to W3C

guidelines, accessibility and usability articles, disability statistics,

browser resources, validation tools, etc.

This section also contains a list of resources that helped me understand the

power of stylesheets

<http://jarmin.com/accessibility/resources/css_layout.html>.

DEMOS Accessibility Guide:

<http://jarmin.com/demos/access/>

Consists of the Techniques section from the above Guide to Accessible Web Design

<http://jarmin.com/accessibility/>,

plus includes extra information on accessibility techniques used on the

DEMOS site <http://jarmin.com/demos/access/demos.html>

(listed by WAI checkpoints)

and a number of demonstrations

<http://jarmin.com/demos/access/demos06.html>

on how the site looks under a variety of circumstances.

Please contact me if you have feedback, suggestions or questions about the DEMOS site, my design choices, accessible web design, web standards or the new location of the site.

Iris Manhold

14 February 2003

Email: iris@manhold.net

URL: http://jarmin.com/

Citation Details:

"Standards and Accessibility Compliance for the DEMOS Project Web Site", by Iris Manhold.

Published by QA Focus, the JISC-funded advisory service, on 3 March 2002

Available at

http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-10/

This case study describes a project funded by HEFCE (the Higher Education Funding Council for England). Although the project has not been funded by the JISC, the approaches described in the case study may be of interest to JISC projects.

The DEMOS Web site was moved from its original location (<http://www.demos.ac.uk/>) in March 2003. It is now available at <http://jarmin.com/demos/>.

It was noticed that the definition of XHTML and CSS given in the <acronym> element was incorrect. This was fixed on 7 October 2003.

Standards and Accessibility Compliance for the DEMOS Project Web Site,

Manhold, I., QA Focus case study 10, UKOLN,

<http://www.ukoln.ac.uk/qa-focus/documents/case-studies/case-study-10/>

The document was published in February 2003.

The Non-Visual Access to the Digital Library (NoVA) project was concerned with countering exclusion from access to information, which can all too easily occur when individuals do not have so-called 'normal' vision. Usability tests undertaken for the NoVA project provided an insight to the types of problems faced by users and interestingly, although the focus of the project was on the information seeking behaviour of blind and visually impaired people (generally using assistive technologies), the control group of sighted users also highlighted usability problems. This showed that although awareness of Web accessibility is increasing, all types of user could be faced with navigational problems, thus reinforcing the importance of involving a variety of different users in any design and development project.