|

Search Options | Help | Site Map | Cultivate Web Site | |

| Home | Current Issue | Index of Back Issues |

| Issue 4 Home | Editorial | Features | Regular Columns | News & Events | Misc. | ||

This page is intended for printing purposes.

-------------------------------------------------------------

Welcome to the fourth issue of Cultivate Interactive!

Since our last publication there have been a fair number of changes in the DIGICULT world. In March fifteen new projects were added to the 'IST projects in the cultural heritage area' Web page from the last call for proposals [1]. From these new editions the ARION and AMITCITA projects are both dealt with in this issue, more projects are to come. Cultivate Interactive is also pleased to welcome the recently established Cultivate CEE which will be carrying on the good work of Cultivate throughout Eastern Europe. The kick-off meeting for Cultivate CEE is taking place in Torun, Poland during the launch weekend of Cultivate Interactive issue 4, so we wish them luck. Hopefully the establishment of Cultivate CEE will mean more articles from Eastern Europe in the future.

This issue as usual has lots to offer. Our first feature article is an important piece on the new dot-museum top level domain that was approved by the Internet Corporation for Assigned Names and Numbers (ICANN) in November last year. It is written by Cary Karp, Director of Internet Strategy and Technology at the Swedish Museum of Natural History, and the President of the Museum Domain Management Association, and considers what the dot-museum's implementation will mean to those working in memory institutes in Europe.

Emma Wagner of the European Commission's Translation Service contributes an interesting article on the origins of the Euro-English that plagues the EC and offers some practical advice to those struggling to fight the Eurospeak disease.

Roger Smith, the founder and director of Global Museum, a highly successful international Webzine, offers us some insight into how he has established a museum-based compendium site. Global Museum boasts current readership in 88 countries and maintains a weekly mailbase of more then six thousand museum professionals; something for Cultivate Interactive to aspire to!

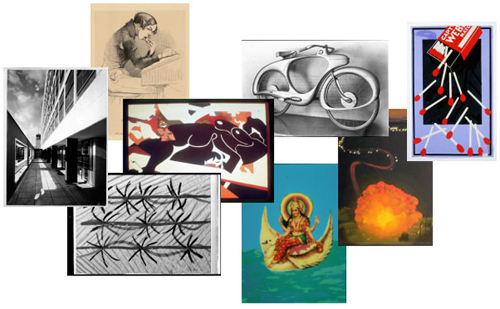

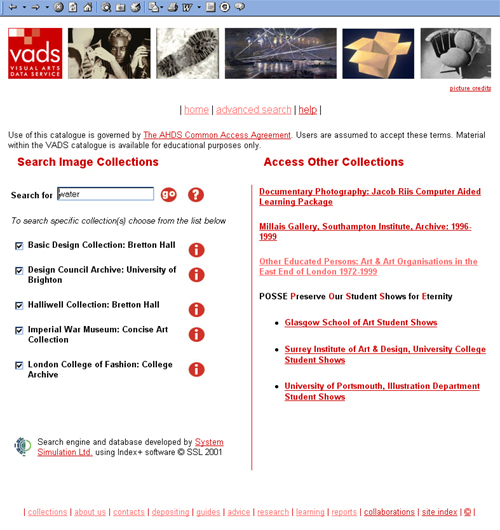

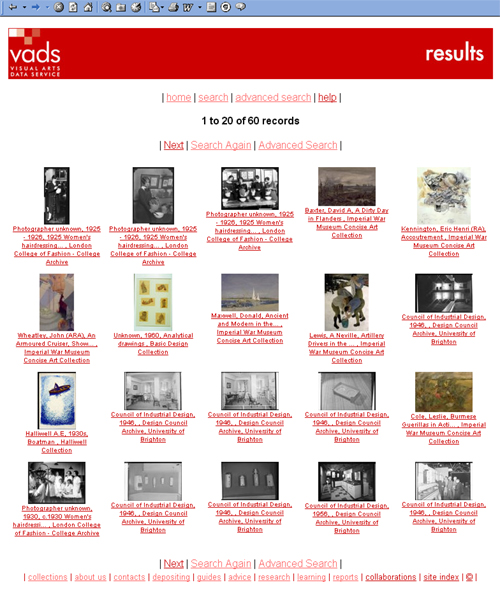

Other feature articles include an outline of the work carried out by the European Museums' Information Institute, a consortium of key organisations in the cultural heritage field, by Rosa Botterill. An introduction to the Visual Arts Data Service, an outfit that have a role in the preservation of high quality digital materials for Higher and Further Education, by Phill Purdy. And a report by Steve Glangé of LIFT (Linking Innovation Finance and Technology) on how you can turn your Research and Development results into successful ventures.

In the regular column section this issue's National Node column has been written by Pascale Van Dinter, the Belgium national node. The At the Event column covers the Internet Librarian International conference held in London in March and the Open Archives Initiative (OAI) open meeting held in Berlin.

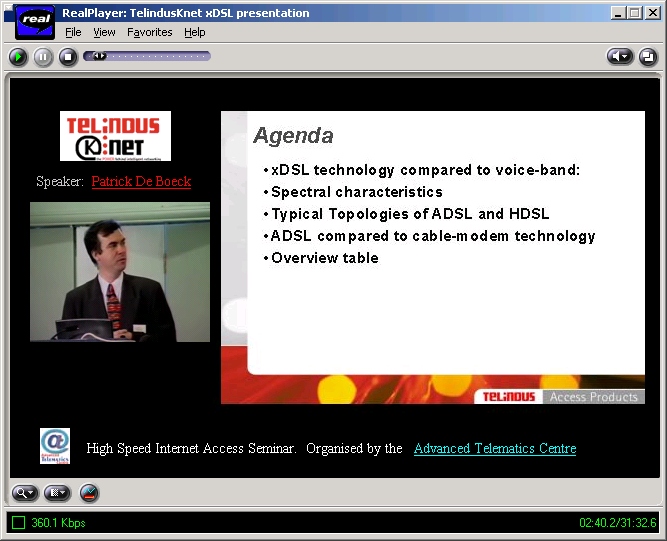

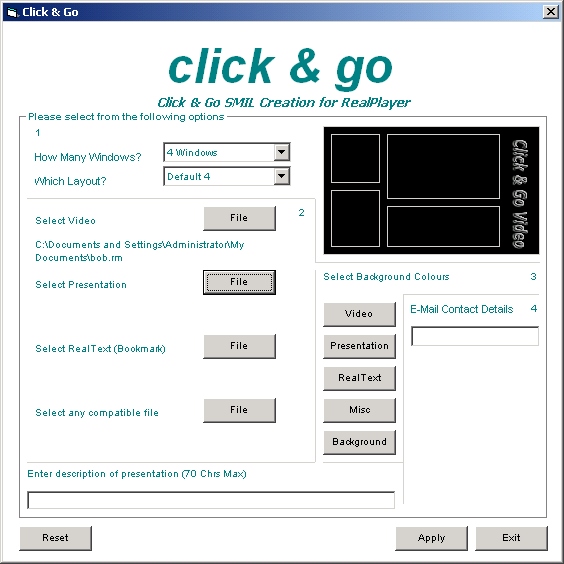

We are also pleased to introduce a new regular column called 'Praxis'. (Thanks to Philip Hunter, editor of Ariadne, who contributed the name). Praxis aims to give advice on how to put various applications and theories into practice. The first two offerings give some insight into streaming video. Streaming video is the art of sending moving images in a compressed form over the Internet. The benefits are that the user does not have to wait to download a large file before seeing the video or hearing the sound; the media is sent in a continuous stream. Neil Francis and David Cunningham's article offers an introduction to the technologies available and some of the problems encountered, while Jim Strom provides a number of examples and case studies to learn from.

Finally, In the metadata column, continuing our coverage of digitisation projects, Elhanan Adler and Orly Simon talk about the Jewish National and University Library's Ketubbot project which aims to put a unique collection of some 1200 ketubbot (Jewish marriage contracts) online.

And of course don't forget our 'Spot the European City' Competition, which gets more popular and difficult to find photos for with every issue!

Thanks to everyone who carried on reading and supporting Cultivate Interactive during my absence. I was lucky enough to spend a month in Australia bushwalking round the Northern Territory and snorkelling on the Great Barrier reef!

Marieke Napier (Editor)

|

-------------------------------------------------------------

-------------------------------------------------------------

By Catherine Houstis and Spyros Lalis - May 2001

Catherine Houstis and Spyros Lalis describe the work of Project Arion. ARION, an advanced lightweight architecture for accessing scientific collections, aims to provide a new generation of Digital Library services for the searching and retrieval of digital scientific collections that reside within research and consultancy organisations.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Scientific data and programs have long been treated as ‘private’ resources to be used only by the people/organisation who created/developed them. This ‘private ownership’ however is usually a situation arising from inaction rather than from policies restricting data reuse. Data and models are uniquely collected/developed as part of a scientific study, but post-study it is not a priori clear what should happen to the data used. There are literally thousands of scientific collections/data sets that are getting lost at the end of a study that produced them. This is tremendously valuable information, which is getting lost because of non-existent cataloguing (metadata), unreachable because of heterogeneity of software/hardware it is stored, poor documentation and etc, all at a great expense of the taxpayer’s money. Research is very expensive, as it requires specialised expertise to be carried through, thus a poor return on this investment can be prohibited if scientific collections could be shared and reused. This is the premise of a digital library, making such resources electronically available to a large number of –possibly remote– users.

Internet-based techniques have been developed to make scientific resources available to the wider scientific community and improve this situation. However, even state of the art systems typically come with four main flaws, which make them unattractive both to resource providers and users. First, the scientific data resource export procedure remains complicated involving programming effort and expertise that is alien to the data providers. Second, users are offered a simple search interface with little guidance on how to track down or create specific information. Thirdly, once a resource is found there is little support for flexible reuse, i.e. one can either take/use the resource as is or not at all. Thus, dynamic combination of several resources belonging to different providers to create new resources is virtually impossible. Last but not least, current solutions do not work with existing practices and financing methods used in the organisations that produce data and as such they are regarded as a ‘burden’ rather than as an ‘assistance’.

ARION, a recently funded international research and development project, is aiming to provide a new generation of Digital Library services for the searching and retrieval of digital scientific collections that reside within research and consultancy organisations. This functionality will be achieved via an appropriate distributed system that can be easily installed and administered by the various participants.

ARION advances the findings of previous studies in areas, such as, management of networked scientific repositories, metacomputing, intelligent information integration and digital libraries. ARION is a federated open system and is developed in association with national data providers, scientific researchers and SME’s to ensure that the project meets their needs. The ARION consortium is composed of research organisations: the Institute of Computer Science-Foundation for Research and Technology (GR) as the leader, the National Technical University of Athens (GR), the Consiglio Nazionale delle Ricerche CNR-IMA (IT), the Commission of the European Communities, Joint Research Centre (IT), the University of Crete (GR); and the SMEs HR Wallingford Ltd (UK), the Oceanographic Company of Norway ASA and the Enterprise LSE Limited (UK). The ARION started in January 2001 and will be completed in 3 years.

The rapid development of distributed computing infrastructures and the growth of the Internet and the WWW have revolutionised the management, processing, and dissemination of scientific information. Repositories that have traditionally evolved in isolation are now connected to global networks. In addition, with common data exchange formats, standard database access interfaces, information mediation and brokering technologies in the context of Digital Libraries Initiatives and I3, Intelligent Information Integration, emerging data repositories can be accessed without knowledge of their internal syntax and storage structure. Furthermore, search engines, are enabling users to locate distributed resources by indexing appropriate metadata descriptions. Open communication architectures provide support for language-independent remote invocation of legacy code thereby paving the way towards a globally distributed library of scientific programs. Finally, workflow management systems exist for coordinating and monitoring the execution of scientific computations. The standardisation and interoperability is pursued by the W3C.

This technology has been so far successfully used to address system, syntactic, and structural interoperability of distributed heterogeneous scientific repositories [1]. However, interoperability at the semantic level is needed to overcome the problem of identifying the scientific resources that can be combined in a meaningful way to produce new data [2]. This is of key importance for providing widely diversified user groups with advanced, value-added information services.

Another body of work addresses integration of heterogeneous information over a number of networked distributed repositories [3]. In this context the aim has been in building global environmental systems. Integration has also benefited from workflow technology, which has been used originally in business processes.

A Digital Library of scientific collections is a new and unprecedented concept. It encompasses the characteristics of a traditional library and in addition, it creates new content on line. In traditional libraries humans create new knowledge after having used the library content. In the case of scientific content (and in the ARION digital library), new content is created continuously upon user demand. Any scientific area is represented not only by means of multimedia document information but also in terms of data sets, programs and tools which can produce new information, interactively, either by analyzing data or by predicting physical phenomena, in terms of simulation of physical processes. Data analysis can be statistical analysis or extraction of information from satellite pictures for instance, or data acquisition from databases belonging to the library content via data mining tools, etc.

Another difference with traditional libraries is that the content of such a library is not within the walls of a building, nor can be stored using a single centralised computer system. Scientific objects such as programs for instance, are in general not portable and in addition they may need specialised software/hardware to execute. In ARION they reside in the provider’s organisation servers and are remotely invoked via the ARION system. Thus, the content of the digital library is distributed over the provider’s servers. In addition, the library documents not only the scientific object descriptions (metadata), but also scientific expertise in terms of data production rules (workflows), to make their reuse possible to the users. Visualisation tools are used to convey information to the users, statistical tools, and any other tools scientists use with their data sets and programs all supplied by the provider’s organisation. A WWW interface makes the library services accessible from anywhere via a web browser and an Internet connection. Thus, it provides an international collaborative environment. This adds tremendous value to a worldwide community of users.

ARION has the potential of becoming an international forum of scientific content and lead the effort of creating digital libraries of scientific objects worldwide. To the best or our knowledge the generalisation of ideas presented in ARION have not been put forward previously. Previous work has addressed management of scientific information for specific scientific areas and as such in all cases is a much simpler or very specific context. In the case of ARION, the scalability of the problem, the generality of the content, and the automated thus attractive ways to add new content are dealt within the architecture.

The ARION Digital Library provides lightweight and straightforward tools to the repository providers, to automate the publication and export of their repository collections. It provides to the user an automated fast and accurate system to locate, retrieve and visualise data on demand. In scientific collections, the existence of scientific programs provide the possibility of computing data on demand by making complex combinations of data and programs existing in various heterogeneous geographically distributed and autonomous collections. The ARION advanced architecture supports these functions. Support is based on the coupling of ontologies with metadata and workflows to be able to address the needs of multiple scientific collections.

This functionality yields several technical innovations, which are indicated below:

ARION promotes advanced features of Digital Library technology and in addition it promotes features that take into account the content and characteristics of scientific collections. Specifically, it is based on an advanced middleware architecture that seamlessly integrates Digital Library, Intelligent Information Integration, and Workflow technologies. It is comprised of three main modules: the Metadata Search Engine, the Knowledge Base System, and the Workflow Runtime System, which co-operate to provide the user with the desired functionality. The architecture is shown in Fiure 1. The functionality of each component is briefly described in the following.

The Metadata Search Engine is responsible for locating external resources, either data sets or programs. It may also retrieve complementary information stored in the repositories, e.g. user documentation on the available resources. The Search Engine accepts metadata queries on the properties of resources and returns a list of metadata descriptions and references. References point to repository wrappers, which provide an access and invocation interface to the underlying legacy systems (repositories) where the data and programs reside. The Knowledge Base System accepts queries regarding the availability of ontology concepts. It generates and returns the corresponding data productions based on the available resources and the constraints imposed by the ontology rules. These productions provide all the information that is needed to construct workflow specifications. The KBS regularly communicates with the Metadata Search Engine to update its database. The Workflow Runtime System monitors and coordinates the execution of workflows. It executes each intermediate step of a workflow specification, accessing data and invoking programs through the repository wrappers. Checkpoint and recovery techniques are employed to enhance fault tolerance.

In addition, a user interface designed to work on a web browser at the user computer (with Internet access), is reached via a web address and provides access to the ARION system. A number of tools are developed for the provider in order to publish and install scientific collections into a scientific digital library in a provider friendly manner. These tools are part of the ARION architecture.

This architecture ensures the scalability and extensibility required in large, scientific collections systems. It allows operationally autonomous and geographically dispersed organisations to selectively “export” their resources. Publishing/installing a new resource with the system requires merely supplying appropriate metadata/ontology, workflow descriptions and wrappers.

|

| Figure 1: A middleware architecture for

distributed scientific repositories. The system consists of interoperable Knowledge Base, Metadata Search, and Workflow Runtime components. |

To enhance performance and fault tolerance, the Metadata Search Engine can be distributed across several machines. Also, several knowledge units adhering to different domains of scientific knowledge can be plugged into the Knowledge Base System to support a wide variety of scientific applications and user groups.

Efficient execution and administration of the system are achieved via special data and program export wizards for wrapper generation, automated use of filters to transform data between different formats, and use of mobile code that is downloaded and used at the user’s request.

The ARION architecture has been presented, forming a library of data sets programs and tools, all components of scientific collections. This library is a federation of heterogeneous systems, which interoperate to provide data services to its users. These services are access of data sets when they are stored into the system archives or dynamic production of data sets when they can be produced on the fly, upon user demand. Retrieval occurs via special tools to either visualise or statistically analyze the data sets.

Due to its modularity, participants may install only parts of the system on their premises, depending on their needs and limitations, both organisational and commercial. A provider may include the entire system architecture in order to organise his in house collection or various down scale versions of it, like only search engine or metadata storage. The system architecture supports different versions with a variety of capabilities at the provider’s end, in addition to a system-wide server featuring all architectural components for everyone’s use. This important architectural feature of the ARION system addresses the scalability problem of global (Internet accessible) digital libraries of scientific collections.

This work is supported by the EU 5th framework program. IST-2000-25289

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Catherine Houstis

Institute of Computer

Science-Foundation for Research and Technology

Heraklion Greece

Catherine Houstis received her Ph.D. from the Electrical Engineering Department of Purdue University, USA, in 1977. In 1978 she was a Postdoctoral associate at the EE Dept. of Purdue University. In 1979 she joined the National Cashier Register (NCR) corporation as a research scientist in the Advanced System Research and Development department. From 1980 to 1983 Catherine worked as an assistant professor at the Electrical and Computer Engineering Department of the University of South Carolina. In 1984 she became an associate professor. From 1984-1987 she was a visiting associate professor at the EE Dept. of Purdue University.

In 1987 Catherine joined the Computer Science Department of the University of Crete. She was also a research associate at the Institute of Computer Science of FORTH. She is now a full professor and the Leader of the Distributed Systems Laboratory at the Institute of Computer Science FORTH. She has lead and participated in research projects funded by NSF in the USA, and ESPRIT, AIM, RACE, Telematics and Digital Libraries for scientific data collections in the EC. Her main research interests are in Internet based scientific information systems, Metacomputing, commercial aspects of scientific information systems and performance evaluation of global distributed systems.

Spyros Lalis

Institute of Computer Science-Foundation for

Research and Technology

Heraklion Greece

Spyros Lalis received a doctorate in Technical Sciences and a Diploma in Computer Engineering from the Swiss Federal Institute of Technology Zurich, in 1989 and 1994 respectively. Since 1997 he has been a Research Associate of the Institute for Computer Science at the Foundation for Research and Technology Hellas and an Adjunct Professor of Computer Science at the University of Crete.

Currently Spyros is Visiting Assistant Professor at the Computer and Communications Engineering department at the University of Thessaly. He is actively involved in the design of distributed systems, two of them developed through funded European projects. He is also leading a European research project in the area of ubiquitous computing. His interests include Programming Languages and Systems, Software Engineering, Distributed and Parallel Systems, Metacomputing, Ubiquitous and Pervasive Computing, and Economies of Electronic Services.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

For citation purposes:

Houstis, C and Lalis, S. "ARION:

An Advanced Lightweight Architecture for accessing Scientific Collections",

Cultivate Interactive, issue 4, 7 May 2001

URL:

<http://www.cultivate-int.org/issue4/arion/>

-------------------------------------------------------------

By Stephan Schneider - May 2001

Stephan Schneider reports on the 'Asset Management Integration of Cultural Heritage In The Interexchange of Archives' (AMICITIA) project (IST1999-20215). AMICITIA is a demonstrator project within the key action III (Multimedia content and tools), action line III.2.4: “Digital preservation of cultural heritage”. AMICITIA started on 1st of October, 2000 and will run for 2 years.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Audiovisual archives are one of the most valuable cultural heritage resources of modern times. By providing living images, music and speech they preserve a picture of life in the past. Like other archives, such as library or art collections, they are in need of protection. Modern archiving not only serves future generations but also allows us to turn the unique wealth of European cultural heritage into value for the today's people.

The AMICITIA project [1] aims to build the base for a continued and viable digital preservation of, and access to, television and video content. It will achieve this through the construction of various vital components enabling a digital archiving system to serve all required roles in ingest, management, access and distribution of audiovisual material. Special focus has been placed on enabling remote, multilingual access to archival content stored in a distributed environment. The system is being designed to serve both the needs of professional users (regarding preservation, quality, access flexibility and usability) and the needs of public access (regarding simplicity of use, security and availability). As a demonstration project AMICITIA aims at getting its results into practical, marketable use as fast as possible.

Partners have been chosen to both develop innovative solutions and to test these solutions in real world environments. This has resulted in the consortium consisting of two groups:

i. A technology providing and researching group: Tecmath AG (DE, [2]) and Joanneum Research (AT, [3]).

ii. A strong user group including broadcasting companies and audiovisual archives such as the British Broadcasting Corporation (BBC) (UK, [4]), the Austrian Broadcasting Cooperation (ORF) [5], the South-West Broadcasting Cooperation (SWR) (Germany, [6]). One new partner from the archives sector is currently being integrated in the consortium.

The work is distributed in a straightforward way: The tecmath AG acts as coordinator and provides the basic technology for a content management system. Joanneum Research, an Austrian Research Institution, develops technologies for distributed access (see the Distributed Access section).

The user partners analyse together the current workflow, finding weaknesses and developing a new idealised workflow using digital technologies. This work is led by the SWR. The user partners then define requirements for the new components to be developed in close collaboration with the technology partners. Some of the user partners have responsibility for several components: e.g. the ORF is responsible for the rights management system and for the storytelling interface (see the Access and Exchange Mechanisms section) and contributed to the multilingual (see the Distributed Access section). Extensive testing of the components integrated by the tecmath AG is also a main task of the user partners. The BBC will coordinate the evaluation process.

Most of the AMICITIA project partners were involved in the research project EUROMEDIA (ESPRIT 20636, [7]). Which ran from 1995 to 1998, this project's stated and achieved objective was to design and implement an asset management system for use in a broadcast environment to enable cooperative and efficient television production. The results of EUROMEDIA have been commercialized and are being exploited by TECMATH under the brand name media archive®. This product is currently installed at several European broadcasters and a market expansion into North America and Asia is foreseen for the future.

Two related IST projects have been started by one or more organizations involved in AMICITIA: PRIMAVERA [8] and Preservation Technology for European Broadcast Archives (PRESTO) [9]. These projects supplement each other and will work together closely to ensure that no redundant work is being done. The objectives of the these two concertating projects are:

The areas of collaboration are shown in the graph below:

|

| Figure 1: Areas of collaboration |

Concertation is by no means limited to these projects only. There is for example an exchange of knowledge and experience with the Forum for Metadata Schema implementers (SCHEMA) [10] project, which concerns metadata. This collaboration is also based on personal contacts.

The AMICITIA project aims to develop and demonstrate new solutions in 4 working areas:

|

| Figure 2: 4 working areas |

The challenges and their respective solutions will be described in detail below.

Distributed Access is now a

problem beyond the premises of a company or of an institution. Producing content

is very expensive and there is high pressure to reduce cost. There are several

ways to reduce production costs:

Distributed Access is now a

problem beyond the premises of a company or of an institution. Producing content

is very expensive and there is high pressure to reduce cost. There are several

ways to reduce production costs:

These ways require a distributed access to archives across company premises and across content owners. Many content producers, especially broadcasters, plan to reuse their content via online media. They intend to display and sell contents not only to partners within the same business but also to other professionals and to the general public.

Another challenge within Europe is multilingualism. Content Metadata is predominantly written in the native language of the content producer, which makes it difficult to retrieve for non-native users.

The AMICITIA projects responds to these challenges in two ways. The content management systems will be improved for distributed access across the Internet. For professional users there will be an interface which allows search and retrieval across the Internet, i.e. to get connected to external content management systems. This requires special protocols to ensure an overall system security on the one hand and to collaborate with the existing security mechanisms such as firewalls. To overcome the language barrier a thesaurus is under development, which aids the query in foreign language archives.

A Web interface developed separately will present selected contents to the general public.

The Distributed Access issue

described above raises new challenges to content management systems: while

copyrights can be cleared easily within one company, distributing content

requires much a higher level of accurateness in rights issues. Professionals

won’t purchase content if the rights situation is not clear or if it is very

difficult to get the rights. Rights management is no easy matter and it is

usually done by specialised rights departments e.g. at broadcasting

companies.

The Distributed Access issue

described above raises new challenges to content management systems: while

copyrights can be cleared easily within one company, distributing content

requires much a higher level of accurateness in rights issues. Professionals

won’t purchase content if the rights situation is not clear or if it is very

difficult to get the rights. Rights management is no easy matter and it is

usually done by specialised rights departments e.g. at broadcasting

companies.

Searching distributed archives requires special tools to store, select and sort the search results. Conventional search masks cannot fulfill these needs.

AMICITIA is developing an integrated property rights management system. The existing traffic lights solution (“no problem”, “restricted”, “rights unclear”) will be improved to cover the regional and factual extent of licenses and their timeframe. Existing rights management systems within the partners are analysed and will be interfaced whenever this possible.

To match the needs of searching distributed archives a so-called “story-telling” interface will be developed. The user can sort, select and pre-arrange search results with it. The new tool will support complex research work providing store and recall functionalities for long-term work and a facility to share results to empower collaborative work.

Once digitised content was often

thought to be immortal. This is a popular fallacy: Bits of digitised content are

aging rapidly. Due to its medium digital content is exposed to degradation

mainly because the physical media e.g. disks or tapes are degrading. This is in

contrast to analogue recordings where the degradation may be visible or audible

digital recordings are degrading stealthy. Suddenly bits flip from a “1” to “0”

or vice versa or they drop and are unreadable. If such a bit error hits

vulnerable areas of digital recordings such as file allocation tables a whole

bunch of assets may get lost. Restoration of damaged digital media is very

tedious, expensive and often impossible, because assets, such as video frames,

are coded and compressed using complex algorithms. Most of the digital recording

devices therefore employ error correcting codes and algorithms to overcome and

correct single bit errors. Although they do work, they work invisibly for the

user of this media. The user has no knowledge about the health of his/her

media.

Once digitised content was often

thought to be immortal. This is a popular fallacy: Bits of digitised content are

aging rapidly. Due to its medium digital content is exposed to degradation

mainly because the physical media e.g. disks or tapes are degrading. This is in

contrast to analogue recordings where the degradation may be visible or audible

digital recordings are degrading stealthy. Suddenly bits flip from a “1” to “0”

or vice versa or they drop and are unreadable. If such a bit error hits

vulnerable areas of digital recordings such as file allocation tables a whole

bunch of assets may get lost. Restoration of damaged digital media is very

tedious, expensive and often impossible, because assets, such as video frames,

are coded and compressed using complex algorithms. Most of the digital recording

devices therefore employ error correcting codes and algorithms to overcome and

correct single bit errors. Although they do work, they work invisibly for the

user of this media. The user has no knowledge about the health of his/her

media.

AMICITIA is developing a new strategy to protect digital contents. This strategy has 3 components:

The system alarms the operator before the number of defective bits exceeds the critical threshold where errors can no longer be corrected. To achieve this the system continuously monitors the bit error rate in the recording devices e.g. the tape drives in the tape libraries. Using a statistical approach the bit errors are analysed and compared with past results in order to estimate the threat of the digital content.

Once a real threat is detected the operator is warned and the threatened content is migrated to a new medium. If tapes are used the content of a damaged tape is copied to a new one. Restoring damaged media is not within the scope of the AMICITIA project but within the concertating PRESTO project.

The continuous maintenance tries to avoid bit errors through maintaining drives and media. This is done by periodicly cleaning the drives, monitoring their head adjustments and by caring the media e.g. by rewinding tape cartridges.

Most of the big broadcasting

companies have been in operation for several decades. Their archives contain

some 100.000 hours of analogue video material. This materials is ageing and is

waiting to be digitised and annotated to protected it from further degradation.

Even in the digital age recordings are made on (digital) tapes which have to be

re-read and transferred into the digital domain of an content management

system.

Most of the big broadcasting

companies have been in operation for several decades. Their archives contain

some 100.000 hours of analogue video material. This materials is ageing and is

waiting to be digitised and annotated to protected it from further degradation.

Even in the digital age recordings are made on (digital) tapes which have to be

re-read and transferred into the digital domain of an content management

system.

It is clear that such an amount of work cannot be done manually; especially if it is done in parallel. If the digitising is done automatically the quality of the digitised content needs to be supervised continuously. No human eye can watch these endless streams of digitised video.

The project is developing a robot digitising station based on a tape library system capable of handling mixed media. The operator puts the bunch of tapes into the robot system, defines and starts the batch digitising process. The system will then do the rest while the quality of the digitised video is monitored continuously. A separate module analyses the video quality of the digitised material employing digital signal processing techniques. If the quality of the digitised material is not sufficient a fail-over process starts and this event is logged. The operator can supervise the batch process, get the loggings and can start further actions.

The AMICITIA project is now at the stage of completing the workflow analysis and requirements engineering phase. Coarse system designs have been drafted and the first graphical user interfaces have been discussed. The system architecture will now be refined to prepare the implementation of the components.

The first working prototype modules are expected for the end of this year. These modules will then be integrated into the content management system and extensively tested under real world conditions at our broadcasting partners.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Stephan Schneider

Project Manager

Tecmath

AG

Content Management Systems Division

Sauerwiesen 2

67 659

Kaiserslautern

GERMANY

stephan.schneider@cms.tecmath.com

<http://www.tecmath.de/>

Phone: +49 6301 606 200

Fax: +49 6301 606 209

Stephan Schneider is employed as Project Manager at the Research Department of Tecmath AG. He is responsible for the IST-Projects AMICITIA (IST1999-20215) and PRIMAVERA (IST1999-20408).

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

For citation purposes:

Schneider, S. "AMICITIA – New

Solutions for Today’s Challenges in Digital Audiovisual Archives", Cultivate

Interactive, issue 4, 7 May 2001

URL:

<http://www.cultivate-int.org/issue4/amicitia/>

By Michal Haindl and Josef Kittler - May 2001

Michal Haindl and Josef Kittler provide an overview of the joint research INCO-COPERNICUS project no. 960174 VIRTUOUS (Autonomous Acquisition of Virtual Reality Models from Real World Scenes). The article describes the project objectives, introduces the partners and summarises its main achievements.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

VIRTUOUS [1] (Autonomous Acquisition of Virtual Reality Models from Real World Scenes) was an international research project financed (1997 - 1999) by the Commission of the European Communities in frame of the INCO-COPERNICUS scheme.

Virtual reality systems can be used for a variety of applications in entertainment, medicine and manufacturing. Thus producing detailed models is of generic interest. Unfortunately the customary manual creation of virtual reality models of real world scenes is tedious and error-prone, particularly for scenes of high complexity. Any automation that can substantially reduce the laboriousness and consequently the cost of the whole process would be very beneficial. In this context visual sensors offer the ideal route to automation, especially when range and vision sensors are already common and their mutual registration can be accomplished using either standard photogrammetric techniques or an appropriate sensor setup.

The objectives of this 3-year project were to build detailed texture mapped surface models of complex real world objects, to develop efficient ways of processing colour textures and to use these models in a robot arm trainer and simulator. The core of the project was to capture virtual reality models of real world robot cell scenes automatically, without interaction with a human observer and then to validate these models in a Virtual Reality Robot Arm Trainer application. To get a lifelike simulation of the manufacturing process, it was necessary to capture 3D graphic information about all objects located in the robot workcell and make it available to the trainer in a suitable form. The aim was to automate this process as much as possible and to avoid any errors. The key approach is to combine range and visual sensor data to build object and scene models. The models are processed by a scene properties extractor and used by the trainer.

VIRTUOUS was a joint research project between the University of Surrey, Guildford, United Kingdom, Instituto Superior Tecnico, Lisboa, Portugal, Institute of Information Theory and Automation, Prague, Czech Republic, and the Institute of Control Theory and Robotics, Bratislava, Slovakia.

The University of Surrey (UoS) [2] was the coordinator of the whole project. Apart of the project management the work at UoS was primarily aimed at the building of detailed surface models of complex real world objects from range images.

The Instituto Superior Técnico (IST) [3] used computer vision techniques to acquire scene models from video sequences taken from a mobile platform. Although the objective was the same as that persued by the University of Surrey, this was a more challenging task. The advantage of scene reconstruction from video sequences is the low cost of the sensor. However, the software processing is considerably more complex.

The objectives of the Institute of Information Theory and Automation (UTIA) [4] part in the VIRTUOUS project were to segment colour and range images of a single scene, to develop algorithms for analysis of real textures found in this scene and to resynthesize these textures in an efficient way using appropriate mathematical models. Synthetic textures were finally fused with shape data and mapped to corresponding virtual objects faces.

The Institute of Control Theory and Robotics (ICTR) [5] addressed the main project application - development of a Virtual Reality Robot Arm Trainer, which also provided a mechanism to validate the scene models.

A tool for registering partial surface fragments prior to fusion into a single model was developed [UoS]. Several algorithms [6], were developed for improving quality of registered and fused 3D data based on surface refitting, surface decimation and data recalibration. Further improvements were achieved using newly developed methods for n-views registration [7] and joint centers extraction [8]. This approach significantly decreased error accumulated by the traditional pair wise registration alternative. Because single real world objects have moving parts, work has been done on the extraction of joint centers. A novel technique based solely on the marker measurements was developed [8].

A technique for building 3D models from a sequence of uncalibrated images was developed by [IST]. A correspondence analysis method [9] has been developed based on robust matching criteria. The method has a breakdown point of 50% outliers. It allows both the integration of successive images into a mosaic and 3D reconstruction, which is accomplished either using a novel Maximum likelihood estimation algorithm [10], [11] for recovering jointly the structure, camera motion and camera intrinsic parameters or an approximate method which is much faster. As the approximate reconstruction method is sensitive to missing data, an algorithm has been devised for segmenting input data into subsets in which a set of features is visible in all images. The reconstruction results obtained for the different image subsets are then fussed to obtained a single model. Another method was described in [12] which computes a dense disparity or velocity field between two images captured with different viewpoints.

Three novel range image segmentation algorithms [13], [14], [15] and two algorithms [16], [17] for colour texture segmentation were published [UTIA]. One of range image segmentation algorithms [13], [14] is based on a combination of recursive adaptive regression model prediction for detecting range image step discontinuities and of a region growing on surface lines. The algorithm [14] assumes scene objects with planar surfaces but its segmentation quality is higher on noisy range data while keeping the numerical efficiency of the simpler method [13] published in 1997. This algorithm outperforms most of the existing range image segmentation algorithms of its category.

|

| |

| Figure 1: Range image and its segmentation. | ||

Colour texture segmentation methods are based on underlying Markov random field models. One of them uses uses a novel recursive maximum pseudo-likelihood Gaussian Markov random field parameter estimation method [17]. Due to this new estimator the method is significantly faster then a similar method recently published in IEEE PAMI.

|

|

| Figure 2: Natural texture mosaic (marble, sand, grass, stone) and its segmentation. | |

Several multiscale colour Markov random fields - based texture models [18] were derived in the project. The main advantage of these models is the possibility to synthesize texture data using fast non-iterative computations. At the same time the models are flexible enough to model a large set of natural colour textures. The models assume spectral factorization of the original colour texture data space into an orthogonal Karhunen - Loeve space, where each spectral component can be independently modelled by its dedicated 2D (mono-spectral) multi-scale MRF. Multiple resolution decomposition is based on the Laplacian pyramid technique. The resulting band-pass mono-spectral factors can be efficiently modelled with lower order MRF models.

|

| Figure 3: Natural textures (upper row) and their synthetic counterparts. |

Finally the trainer [19], which consists of a PC family computer running a real-time robot control software, was connected to a workstation used as a scene viewer. Virtual reality models acquired using the above mentioned algorithms are displayed by a dynamic viewer providing a high quality real-time visualization of the robotics scene. The more advanced is the robot workcell or other environment displayed on the workstation monitor, the more realistic impression is experienced by the robot user.

The Virtuous project was concern with the development of the technology for building detailed texture mapped surface models. During the project we have developed an advanced methodology for 3D surface registration, a method for 3D object model acquisition from video sequences, several techniques for colour texture modelling and synthesis, a feedback control strategy for registering 3D surface and texture models and finally a robot trainer has been developed.

|

|

|

|

| Figure 4: Original colour scene, range image, and its virtual model in the original and upsidedown rotated view directions. | |||

The project research resulted in more than 20 publications apart from project research reports. These achievements have been accomplished with EU project funds but also with a significant contribution of funding from complimentary sources made available at each partner home institution.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Dr. Michal

Haindl

Dr. Michal

Haindl

Senior Researcher

Institute of Information Theory and

Automation

Academy of Sciences of the Czech Republic

18208 Prague

Czech

Republic

haindl@utia.cas.cz

<http://www.utia.cas.cz/>

Phone: +420 2 66052350

Dr. Michal

Haindl is employed as a Senior Researcher at UTIA (Institute of Information Theory and

Automation, Prague). From 1990 to 1992, he was visiting researcher at University

of Newcastle, Newcastle; Rutherford Appleton Laboratory, Didcot; Centre for

Mathematics and Computer Science, Amsterdam and Institute National de Recherche

en Informatique et en Automatique, Rocquencourt working on several image

analysis and pattern recognition projects. From 1992 to 1995, he joined the

Centre for Mathematics and Computer Science,Amsterdam to work on a multimedia

ESPRIT project. His present research interest concern random fields applications

in pattern recognition and image processing. He holds Ph.D. and Doctor of

Science degrees and he is the author of about 140 papers published in books,

journals and conference proceedings.

Dr. Michal

Haindl is employed as a Senior Researcher at UTIA (Institute of Information Theory and

Automation, Prague). From 1990 to 1992, he was visiting researcher at University

of Newcastle, Newcastle; Rutherford Appleton Laboratory, Didcot; Centre for

Mathematics and Computer Science, Amsterdam and Institute National de Recherche

en Informatique et en Automatique, Rocquencourt working on several image

analysis and pattern recognition projects. From 1992 to 1995, he joined the

Centre for Mathematics and Computer Science,Amsterdam to work on a multimedia

ESPRIT project. His present research interest concern random fields applications

in pattern recognition and image processing. He holds Ph.D. and Doctor of

Science degrees and he is the author of about 140 papers published in books,

journals and conference proceedings.

Professor Josef Kittler

Director of the Centre for

Vision, Speech, and Signal Processing

University of

Surrey

Guildford

GU2 7XH

United Kingdom

j.kittler@surrey.ac.uk

<http://www.ee.surrey.ac.uk/Research/VSSP/index.html>

Phone: +44 1483 879294

Professor Josef Kittler (Ph.D.,

ScD) is the director of the Centre for Vision, Speech and Signal Processing of

the University of Surrey. He has been a Research Assistant in the Engineering

Department of Cambridge University (1973--75), SERC Research Fellow at the

University of Southampton (1975-77), Royal Society European Research Fellow,

Ecole Nationale Superieure des Telecommuninations, Paris (1977--78), IBM

Research Fellow, Balliol College, Oxford (1978--80), Principal Research

Associate, SERC Rutherford Appleton Laboratory (1980--84) and Principal

Scientific Officer, SERC Rutherford Appleton Laboratory (1985). His current

research interests include Pattern Recognition, Neural Networks, Image

Processing and Computer Vision. He has co-authored a book with the title

`Pattern Recognition: a statistical approach' published by Prentice-Hall. He has

published more than 200 papers. He is a member of the Editorial Boards of IEEE

Transactions on Pattern Analysis and Machine Intelligence, Pattern Recognition

Journal, Image and Vision Computing, Pattern Recognition Letters, Pattern

Recognition and Artificial Intelligence. He has served as the President of the

International Association for Pattern Recognition (IAPR).

Professor Josef Kittler (Ph.D.,

ScD) is the director of the Centre for Vision, Speech and Signal Processing of

the University of Surrey. He has been a Research Assistant in the Engineering

Department of Cambridge University (1973--75), SERC Research Fellow at the

University of Southampton (1975-77), Royal Society European Research Fellow,

Ecole Nationale Superieure des Telecommuninations, Paris (1977--78), IBM

Research Fellow, Balliol College, Oxford (1978--80), Principal Research

Associate, SERC Rutherford Appleton Laboratory (1980--84) and Principal

Scientific Officer, SERC Rutherford Appleton Laboratory (1985). His current

research interests include Pattern Recognition, Neural Networks, Image

Processing and Computer Vision. He has co-authored a book with the title

`Pattern Recognition: a statistical approach' published by Prentice-Hall. He has

published more than 200 papers. He is a member of the Editorial Boards of IEEE

Transactions on Pattern Analysis and Machine Intelligence, Pattern Recognition

Journal, Image and Vision Computing, Pattern Recognition Letters, Pattern

Recognition and Artificial Intelligence. He has served as the President of the

International Association for Pattern Recognition (IAPR).

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

For citation purposes:

Haindl, M. and Kittler, K.

"Autonomous Acquisition of Virtual Reality Models from Real World Scenes",

Cultivate Interactive, issue 4, 7 May 2001

URL:

<http://www.cultivate-int.org/issue4/virtuous/>

-------------------------------------------------------------

By Cary Karp - May 2001

In November 2000 the Museum Domain Management Association (MuseDoma) announced the approval of its proposal to establish dot-museum as a restricted top-level domain name on the Internet. The approval was made by the board of directors of the Internet Corporation for Assigned Names and Numbers (ICANN), the nonprofit organisation that provides oversight for domain names.

In this article Cary Karp, Director of Internet Strategy and Technology at the Swedish Museum of Natural History, and the President of the Museum Domain Management Association, explains why we need this new top level domain, details its evolution and gives the implications for Europe.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

The massive brick-and-mortar edifices that are normally associated with the word "museum" are an easily recognised attribute of the urban landscape. Any such institution housed in quarters that do not correspond to the stereotype can easily reassure the public about its identity with a label near the doorway containing the familiar "museum" string of letters (or, of course, any number of equivalents to it using other languages and character sets). Museums have long since established annexes in the utterly intangible world of the Internet and, although such things as Web sites have an even greater need for clear labels, there is no digital way to convey the authority of the name of a museum that is graven in stone on its grand façade. Translated into technojargon, if on the Internet nobody knows you're a dog, on the Internet nobody knows you are a museum, either.

There is probably little reason to worry about the consequences of an inability to distinguish between, say, Web sites operated by pretend dogs and sites operated by real ones (bona fido canines). There may be greater need to take a less casual approach to material provided by organisations claiming to be museums. The ability to include the letter string "museum" in an Internet domain name can be purchased by anyone having about USD 10 per year to spend on it. There is no requirement, whatsoever, for the activity subsequently conducted in such a domain bearing the slightest relationship to anything that the professional museum community might regard as a legitimate museum purpose. Indeed, any one of the well over ten thousand MUSEUMSOMETHING dot-COMs, dot-ORGs and dot-NETs can as easily be used deliberately to conceal antisocial activity as it can to designate what, indeed, bears the best attributes of museumness.

The Domain Name System (DNS) was never intended to provide more than a convenient means for equating the names that people commonly use to identify the various computers that are connected to the Internet, with the numerical addresses that these computers use to identify each other. There was initially a clear semantic basis for differentiating among what have latterly come to be termed the generic top-level domains (gTLDs) dot-com, dot-org, and dot-edu (with dot-net coming later). This was expressed in rules that have never been more than loosely applied when evaluating requests for registration (with the erratic exception of dot-edu). The traditional response to the concerns expressed in the preceding paragraph would be to dismiss them with reference to their being based on an ascription of significance to domain names that they were never meant to have. A lot has happened, however, since the early days of the DNS.

The removal of all restrictions on commercial participation in the Internet resulted in a staggering inflation in the value of domain names, mostly particularly in dot-com. This gave rise to the identically named and ever so peculiar dotcom economic phenomenon. Domain names were no longer being used as loosely derived ID's for computers; they were being used to "brand" both products and corporate Web sites. Attractive domain names acquired monetary value of lunatic proportion and domain name disputes generated incessant legal action (lucrative, in turn, to specialised legal professionals). The basis for asserting that domain names were devoid of significant meaning eroded utterly despite persistent hard-line assertions to the contrary. At the same time, all pretence at enforcing the original meaning of the three-letter gTLDs was abandoned toward the unmasked end of generating as much revenue as possible from their operation.

These difficulties were seen looming on the horizon fully five years ago by the late Jon Postel, the creator of the DNS, who proposed their mitigation by the establishment of a large number of new gTLDs each intended to serve a clear purpose that could be recognised from the domain's name. This marked the start of an extraordinarily contentious and protracted discussion about the basis for what was termed Internet governance, with clear focus on modes for anchoring this on an international platform rather than leaving it the control of its initial sole guardian, the United States Government.

This process is far from over but it has passed at least two milestones. The first was the creation in 1998 of the Internet Corporation for Assigned Names and Numbers (ICANN) [1] and the second was a decision made by ICANN in November 2000 to introduce seven new gTLDs into the DNS. Although the negotiations necessary to formalise these domains are currently in progress there appears to be little doubt that an expansion of the generic top level of the DNS is imminent.

One of the new seven gTLDs is dot-museum, intended to provide the community of Internet users with a means for recognizing bona fide museums on the basis of their being registered in a gTLD specifically restricted to such use. The dot-museum charter will be on public record and anyone wishing to know the basis for entitlement to registration in the domain can easily find it.

Although this does nothing to provide the DNS with the ability to assist people who are trying to locate Net-based resources, it does allow for the recognition of the desired resources during the course of the search for them. Peering beyond the formal and narrow constraints of the DNS, having a shared name space for the global museum community can provide significant impetus and support for the development of a directory service that could permit unprecedentedly comprehensive searches for museum information in Net-based repositories. Although domain names traditionally designate computers and named services, the dot-museum nomenclature can easily be extended to provide name space for individual objects in museum collections. This would allow for a name such as monalisa.collections.louvre.museum.

One of the primary reasons for ICANN having selected dot-museum in the "first wave" of new TLDs is its suitability as the pioneer initiative in the envisioned creation of a larger number of TLDs, each dedicated to one sector of the cultural community. Taking a leap into a future where other sectors operate such domains, identically structured name spaces could be used in each of them that houses catalogable objects, such as dot-library containing magnacarta.manuscripts.british.library.

The DNS was never intended to be applied to the management of such information structures and it would be egregiously misused by any attempt at incorporating it in the implementation of what is being suggested here. That notwithstanding, the name constructs initially devised for the DNS can be extended in far-reaching regards. Museums have a fundamental mandate to describe and catalogue their holdings. The extreme utility of the various repositories of resulting information being interoperable has long been recognised, and means for implementing this has been the focus of much cost and effort. The availability of a single coherent name space into which every single museum object can be placed has potential for bringing the realisation of this goal immeasurably closer. The potential utility of extending this across the boundaries of adjacent cultural sectors should be apparent.

ICANN has entrusted the establishment and enforcement of dot-museum policy, as well as responsibility for the operation of its registry, to the Museum Domain Management Association (MuseDoma) [2]. Although it currently consists of no more than its founding members, the International Council of Museums (ICOM) [3] and the J. Paul Getty Trust [4], MuseDoma has been incorporated as an open membership organisation providing all interested parties with the ability to participate in the on-going discussion of the refining and development of domain policy. The core elements of this policy are a statement of the basis for entitlement to registration in the domain, and the principles used for the naming of subdomains. The primary normative instrument underlying the first of these concerns is the ICOM Definition of Museum as stated in that organisation's statutes [5]. The naming principles are being devised at the time of present writing. Anyone who is interested may follow this activity as it unfolds via MuseDoma's Web site and its e-mail distribution lists [6]. Relevant developments on ICANN's side of the fence may be followed via their equivalent channels.

Although currently absorbed entirely by the legal, administrative, and technical aspects of getting the new TLD up and running, MuseDoma looks forward to being able as soon as possible to turn its attention to the development of value-added services for the dot-museum registrants. Primary among these is participating in the development of directory services that will allow us to harness the potential residing in the broad name space that is at our disposal.

The Internet architects have clearly indicated that they feel the DNS to be inadequate for many of the requirements that users have and, lacking anything better, are imposing on it. The directory services to be devised for use with dot-museum will need to be coordinated with the central initiative, in turn calling broader attention to the needs and potential of this domain (unique among the New Seven in its belonging to a sector with a centuries-long tradition of devising and managing systematic nomenclatural hierarchies).

Once it is moderately comfortably in business, MuseDoma looks forward to sharing its experience in the manifold aspects of the creation of a TLD with its siblings in the cultural community. One of the more daunting aspects of creating a TLD is the prosaic but vital need for a robust technical infrastructure. This includes the various database servers needed for the DNS, for the internal administration of the domain, and for the public availability of key bits of information about subdomain holders -- the so-called WHOIS data. These servers need absolutely reliable high-speed connections to the Internet and, to avoid "single points of failure", need to be maintained redundantly at separate and distant sites. Establishing this technical infrastructure involves enormous headache and expense. Fortunately, multiple domain registries can be operated on a shared platform with each newcomer necessitating an incremental cost that is a fraction of the initial investment.

It would be inappropriate at the moment of present writing to discuss the various means by which MuseDoma may elicit the support of operators of pre-existing such infrastructure. (The matter is subject to negotiations that are currently in progress.) What can be noted, especially given the nature of this publication, is that the options are all centered in Europe. In fact, the initial four years during which the new TLD process had been tracked toward the end of establishing a museum top-level domain - starting with the Postel Proposal and ending with the creation of MuseDoma - were all centered in Europe. The leg work and lobbying was financed primarily by the Swedish Museum of Natural History (NRM) [7] in Stockholm, in which city ICOM's central Internet host is also located.

This activity is now being formalised by the establishment of the dot-museum network information center at NRM. Every top-level domain has its so-called NIC [8], serving as the central point for the coordination of various aspects of the domain's daily operation. With due pride in the dot-museum NIC being created in the capital city of the current President State of the EU, it is being given an acronym that highlights its European basis - musEnic.

This European connection is probably not as coincidental as it first appears. Europe may well be alone in the world as an area that simultaneously houses rich repositories of cultural property, shares them across many language and cultural borders, and is an extremely sophisticated participant in the technological arena on both the consumer and industrial levels. Europe is thus ideally suited as a development and initial deployment arena for the cross-domain initiatives mentioned above. We hope that we will be able to lash musEnic firmly to European ground and that we may then see the rings on the water radiate outwardly from Europe to the rest of the world as we undertake the exhilarating task of building a cultural sector on the Internet. The cradle of the Internet's technological development was the United States of America, which demonstrated its ability to do massive good work in the process. As the Net embarks on another grand phase of its development it would be entirely fitting for Europe to be at the helm.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Cary Karp

Director of Internet Strategy and Technology

Swedish

Museum of Natural History

ck@nrm.se

<http://www.nrm.se/>

Phone: +46 8 5195 4055

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

For citation purposes:

Karp, C. "The Sign on the Door:

Establishing a Top-level Museum Domain on the Internet", Cultivate Interactive,

issue 4, 7 May 2001

URL:

<http://www.cultivate-int.org/issue4/museum/>

-------------------------------------------------------------

By Emma Wagner - May 2001

One of the key issues when working with the European Commission and in Europe in general is getting to grips with Eurospeak. Eurospeak can be confusing, complicated and sometimes elitist. It could also be avoided.

Emma Wagner discusses what she calls 'the disease of Eurospeak' and details guidelines for improvement, which the European Commission's Translation Service are trying to get across to authors inside the EC.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Eurospeak comes in all languages, believe it or not, and in all cases the symptoms and causes are the same. In this article I'll talk about the English variant, Euro-English.

Linguists love to be tolerant about the way languages grow. Just as we accept all sorts of regional accents, the argument goes, we must accept and even celebrate all variants in written language… Very politically correct, but it overlooks one simple fact: that when speaking, you can see immediately if your listener doesn’t understand, and re-phrase your statement or adjust your accent immediately; when writing, you don’t get that instant feedback. So it is perfectly possible to churn out reams of incomprehensible writing that no-one will understand – or read!

Anyone trying to communicate in writing, and who wants their message to end up in their readers' brains rather than their bins, is well advised to follow a few rules and stay anchored in the reality of a real language.

One linguist of the tolerant school, David Crystal, writes in English as a Global Language: "There is even a suggestion that some of the territories [...] in which English is learned as a foreign language may be bending English to suit their purposes. 'Euro-English' is a label sometimes given these days to the kind of English being used by French, Greek and other diplomats in the corridors of power in the new European Union, for most of who English is a foreign language" [1].

I work in those corridors of power – or in one of the offices at the European Commission, to be precise – and the prospect of Euro-English acquiring special status because it is spoken by a powerful elite fills me with dread. Surely that would spell the end of the European Union, because it would cut us off from the public, who have a right to read Commission documents in real English? In a bid to prevent the spread of Eurospeak, Euro-waffle and plain bad English in Commission documents some fellow-translators and I started the Fight the FOG [2] campaign in 1998. We wanted to encourage Commission writers and translators to write clearly, in real English (and real French, real German, real Finnish, etc.). We also instructed them to KISS - Keep It Short and Simple.

I spend much of my working time trying to eradicate Eurojargon and bad English from texts written in the European Commission. Here’s a sample: a paragraph from the minutes of an important committee meeting, 35 (!!) pages long. This came into my department last week, for translation into the 10 other official languages of the EU.

"Mr A welcomed the participants to the ZZZ meeting, in particular to the Malta delegation, that attended the meeting for the first time. He passed the floor to Mrs B who was going to intervene on behalf the French Presidency of the European Union. […]

Mr A informed about the present stage of the works on the Directive on scaffolding and works in height. He said that in October the Council had agreed a common position. In the other hand, the Parliament had presented comments to the project of Directive. A meeting between the Parliament's reporters and the Presidency of the Council had taken place for establishing a more official position in the agreement. There had been a second meeting between the Commission and the political groups of the Parliament for discussing the contents of some of the amendments. He said that the differences between the Parliament and the Council were small and that the Parliament wished scaffolds below the normal height to be included."

Why does this sort of Euro-English get written? Here are some of the causes of the disease:

Drafting by non-native speakers is unavoidable, for organisational reasons, and some of them do an excellent job. But it inevitably causes problems of interference in vocabulary and syntax. Non-native speakers can't be expected to know what sounds natural in English. Even native speakers lose this sensitivity when working outside their mother-tongue environment. When you've heard words like "eventual" and "payment delays" misused hundreds of times, you can lose touch with their real meaning.

English has taken over from French as the main language used for communication inside the EU institutions. Of course, concessions have to be made for spoken communication in an organisation where fifteen different nationalities work together. But as the above example shows, the standard of "English" is often simply too low for written communication. It is certainly more defective than the French written here by non-natives. Why? Because Brussels is a partly French-speaking city? Because the French have stricter grammar and an Académie to outlaw barbaric imports, whereas English is a very flexible language that belongs to everyone and seems to know no rules? Or maybe (unfashionable view coming up here - sorry, Professor Crystal) because English grammar has not been taught in British schools for the past 40 years, so most native English speakers can't even explain to their non-native colleagues why paragraphs like the one quoted above are not real English? Only those of us who learnt foreign languages were lucky enough to acquire any grammar.

Many authors in the EU institutions come from a tradition or a culture where concision is not a virtue. Recently the French arm of a highly respected firm of management consultants did a study for us on one aspect of the Translation Service's operation. Their report ran to 186 pages and paralysed our e-mail system. When I asked them to produce a summary, they did - 50 pages!

Specialised language, or jargon as it is less politely called, aids communication between specialists. But if it spills over into the wrong context, it is irritating and sounds ridiculous. Acronyms such as CFSP, SANCO, SLIC and PECO are all pregnant with meaning for those who understand them, but alienating for those who don't [3]. We encourage authors to spell them out when first used, or to avoid them completely. Another nasty habit of Eurocrats is to use the names of towns to mean something quite different. "Schengen" is no longer a sleepy village in Luxembourg, but an agreement on a passport-free zone; "Amsterdam" is a Treaty, and "Gymnich" is an informal meeting of foreign ministers.

In the desire to secure agreement at any cost, documents are sometimes inflated - and their logic distorted - by the inclusion of disparate material. The motives are excellent, but the result is a kind of patchwork, which is not. Foggy language helps to achieve an appearance of political consensus. But it invariably creates problems for the future, when foggy Treaties and laws have to be put into effect.

The European Commission has recently started work on several solutions:

Maybe in addition there should be a major cutback in the number and length of publications, perhaps based on reader surveys to see which ones are really useful and which could be dispensed with. In addition we could use the power of the Internet to improve the quality of written communication from the Commission. For example, texts on the Europa server could incorporate:

There is a simple cure for this disease called Eurospeak. Let people speak it, by all means, in the interests of cooperation and in-house communication with each other. But encourage them not to write it, if they want outsiders to get the message.

The Fight the FOG campaigners are trying to highlight these key principles of good writing:

Audience awareness. Remember that the defective language we use when tired and rushed is not good enough for the outside world. We must try to prevent jargon spilling over into general writing.

Honesty. Resist the tendency to be pompous, as if status and dignity could be increased by using long words and convoluted syntax.

Responsibility. Beware of "patchwork drafting". Someone must retain overall responsibility for the structure and logic of a document. This is also called accountability.

Planning ahead. Allow enough time for drafting and translation.

Expert editing. Allow experts to rewrite documents before they are translated into 10 and soon 22 languages. Experts can be outside consultants or editors - or translators can do the rewriting. Don't say "they don't know enough about our field to understand our documents". If intelligent, interested readers don't understand, that proves that the documents need to be rewritten.

KISS: Keep It Short and Simple.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Emma Wagner

Head of Department

Translation

Service

European Commission

Emma Wagner studied Modern Languages at Cambridge received her MA in Translation and Interpreting from Bath University. She has worked for the European Commission since 1972 as a translator and translation manager. She is currently head of a translation department with 250 staff translating into and out of the 11 official languages of the European Union. In 1998 she started the Fight the Fog campaign at the European Commission because "foggy language is alienating for the general public and difficult to translate well".

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

For citation purposes:

Wagner, E. "Eurospeak – Fighting

the Disease", Cultivate Interactive, issue 4, 7 May 2001

URL:

<http://www.cultivate-int.org/issue4/eurospeak/>

-------------------------------------------------------------

By Mike Robbins - May 2001

Mike Robbins gives a further introduction to Asia IT&C, a programme first mentioned in the news and events section of issue 2 of Cultivate Interactive. Asia IT&C is a five-year programme under the European Commission which co-finances projects in the Information Technology and Communications (IT&C) sectors. The projects must be joint activities between non-profit-making partners in at least two EU member states, and at least one of the participating countries/territories, which are: Afghanistan, Bangladesh, Bhutan, Brunei, Cambodia, East Timor, India, Indonesia, Laos, Malaysia, Maldives, Nepal, Pakistan, Philippines, Sri Lanka, Thailand and Vietnam. The lead partner can be either Asian or European. Co-financing of up to 80% and €400,000 is available, depending on the programme component.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Asia IT&C began in late 1999. Its

purpose is to address not only the ‘digital divide’ between rich and poor

nations, but also between knowledge-rich and knowledge-poor members of the same

society. It does this by co-financing co-operation between Asia and Europe. This

is the core philosophy underpinning the programme; its intention is to

strengthen links between the IT&C sectors from both continents, rather than

just handing out grants. Like all EU activities, the programme is intended to

involve more than one EU member country, which is why there must be partners

from at least two different countries. If just one EU country was involved,

bilateral assistance from that country’s Government would be more

appropriate.

Asia IT&C began in late 1999. Its

purpose is to address not only the ‘digital divide’ between rich and poor

nations, but also between knowledge-rich and knowledge-poor members of the same

society. It does this by co-financing co-operation between Asia and Europe. This

is the core philosophy underpinning the programme; its intention is to

strengthen links between the IT&C sectors from both continents, rather than

just handing out grants. Like all EU activities, the programme is intended to

involve more than one EU member country, which is why there must be partners

from at least two different countries. If just one EU country was involved,

bilateral assistance from that country’s Government would be more

appropriate.

The partners must be non-profit-making, typically, they are Government departments, colleges, universities or NGOs (non-government organisations). However, this does not mean that the programme is irrelevant to the private sector, consideration is also given to projects that intend to strengthen the IT infrastructure for business. The programme is also happy to consider proposals from Chambers of Commerce and industrial or commercial associations and federations.

There are currently two Project Management Offices (PMOs); one in Belgium, and the other in Thailand. The Bangkok office is hosted by the Kingdom of Thailand through its National Electronic & Computer Technology Centre (NECTEC), a component of the Ministry of Science, Technology and the Environment (MOSTE).