Deliverable: D1.1 Evaluation report of existing broker models Issue: 0.2 Date of issue: 18 April 2000

|

Project Number: |

IST-1999-10562 |

|

Project Title: |

Reynard - Academic Subject Gateway Service Europe |

|

Deliverable Type: |

Public |

|

Deliverable Number: |

D1.1 |

|

Contractual Date of Delivery: |

31 March 2000 |

|

Actual Date of Delivery: |

18 April 2000 |

|

Title of Deliverable: |

Evaluation report of existing broker models in related projects |

|

Workpackage contributing to the Deliverable: |

WP1 |

|

Nature of the Deliverable: |

Report |

|

URL: |

http://www.renardus.org/deliverables/ |

|

Authors: |

Michael Day, Anders Ardö, Matthew J. Dovey, Martin Hamilton, Risto Heikkinen, Andy Powell and Arthur N. Olsen. |

|

Contact Details: |

Michael Day, UKOLN: the UK Office for Library and Information Networking, University of Bath, Bath BA2 7AY, UK. Email: m.day@ukoln.ac.uk, Phone: +44 1225 323923, Fax: +44 826838, URL: http://www.ukoln.ac.uk/ |

|

Abstract |

Broker services enable the integration of distributed and heterogeneous information resources. The Renardus project will implement a Europe wide Internet information gateway service based on a generic broker architecture and data model that will allow the integrated searching and browsing of distributed resource collections. This report reviews eighteen broker architectures that have been developed for existing services and projects. An attempt has been made to map the function of each of these architectures (or broker models) onto the generic MODELS Information Architecture (MIA) and more specifically the MIA structure developed to describe services known as DNER Portals. The report concludes with some observations on the broker models reviewed and the protocols and software that they use. |

|

Keywords |

Broker models, Broker architectures, Renardus project, MODELS Information Architecture, MIA, Information gateways, Digital libraries |

|

Distribution List: |

Renardus project partners |

|

Issue: |

0.2 |

|

Reference: |

renardus-11-v02.doc |

|

Total Number of Pages: |

88 |

Table of Contents

PART I Title Page

1Introduction 13

1.1Broker services 14

1.2The MODELS Information Architecture (MIA) 14

2The Broker Review 17

2.1Agora 18

2.2Aquarelle 20

2.3ASF Freeware 24

2.4CHIC-Pilot 27

2.5Cooperative Online Resource Catalog (CORC/Mantis) 30

2.6DEF - Denmark's Electronic Research Library 33

2.7Die Digitale Bibliothek Nordrhein-Westfalen (NRW) 37

2.8ETB: the European Schools Treasury Broker 40

2.9EUropean Libraries and Electronic Resources in Mathematical Sciences (EULER) 44

2.10Finnish Virtual Library (FVL) 47

2.11GAIA: Generic Architecture for Information Availability 51

2.12Harvest 56

2.13ht://Dig 59

2.14ISAAC Network 61

2.15Jointly Administered Knowledge Environment (jake) 64

2.16Networked Computer Science Technical Research Library (NCSTRL/Dienst) 66

2.17Resource Discovery Network: Resource Finder 70

2.18ROADS 72

2.19UNIverse 75

3Considerations towards determining an architectural model 78

3.1Introduction 78

3.2Gateway 78

3.3Search Engine 78

3.4User interface 80

4Conclusions 81

4.1Introduction 81

4.2Classification of the brokers reviewed 81

4.3Protocols 81

4.4Software 82

5References 85

|

Issue |

Date of Issue |

Comments |

|

0.1 |

13 April 2000 |

First draft, for initial review by contributors. |

|

0.2 |

17 April 2000 |

Second draft, for review by project partners. |

|

|

|

|

The object of the Renardus project is to establish an academic subject gateway service in Europe. The pilot system will be based on a generic broker-architecture and data-model that will allow the integrated searching and browsing of distributed resource collections.

For Renardus, it is important to ensure that any chosen solution is based on emerging developments rather than being constrained by decisions made by the subset of gateways that are participating in the initial stages of the project. This report, therefore, reviews eighteen broker models that have been developed for a variety of existing services, projects and initiatives. The models were chosen because they were perceived to be relevant to the digital library context of Renardus.

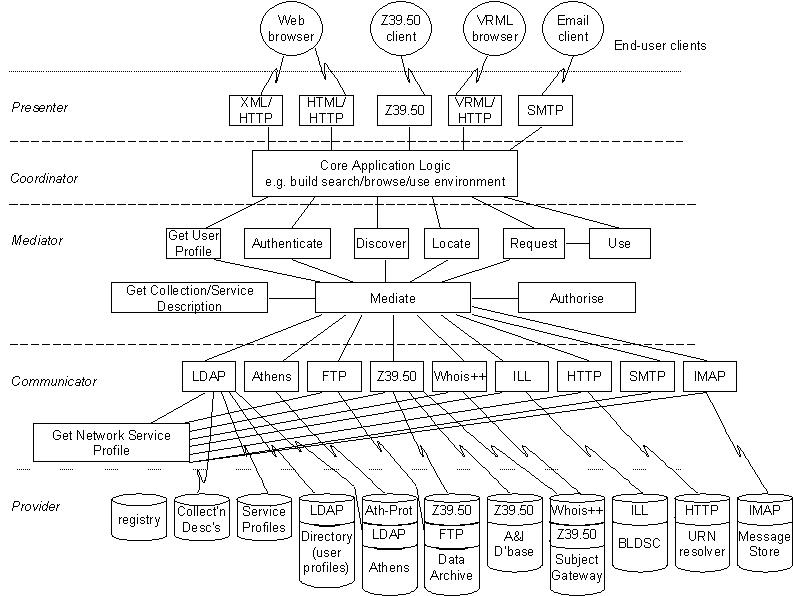

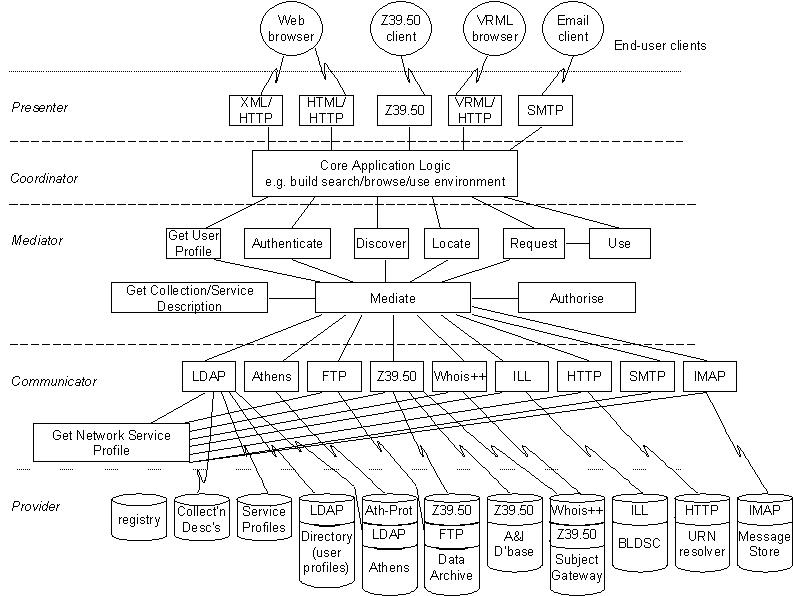

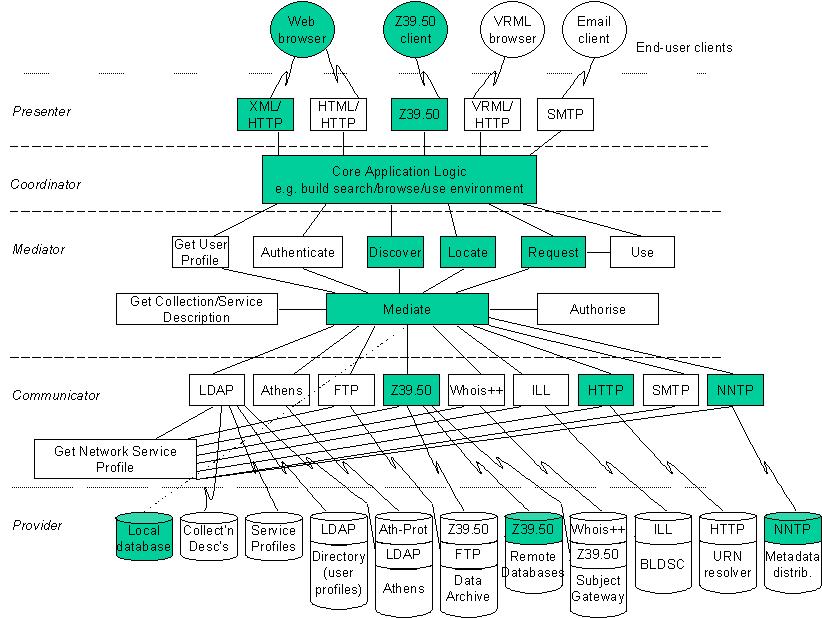

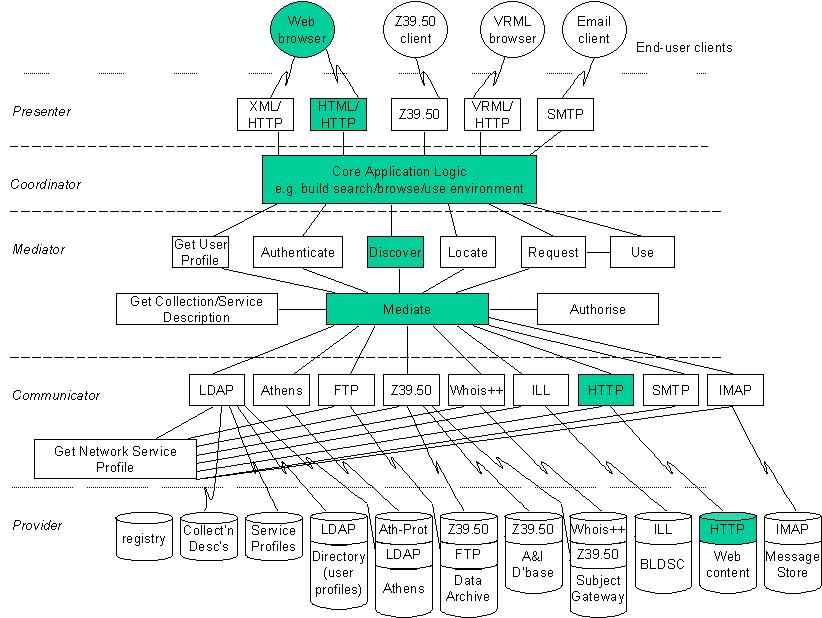

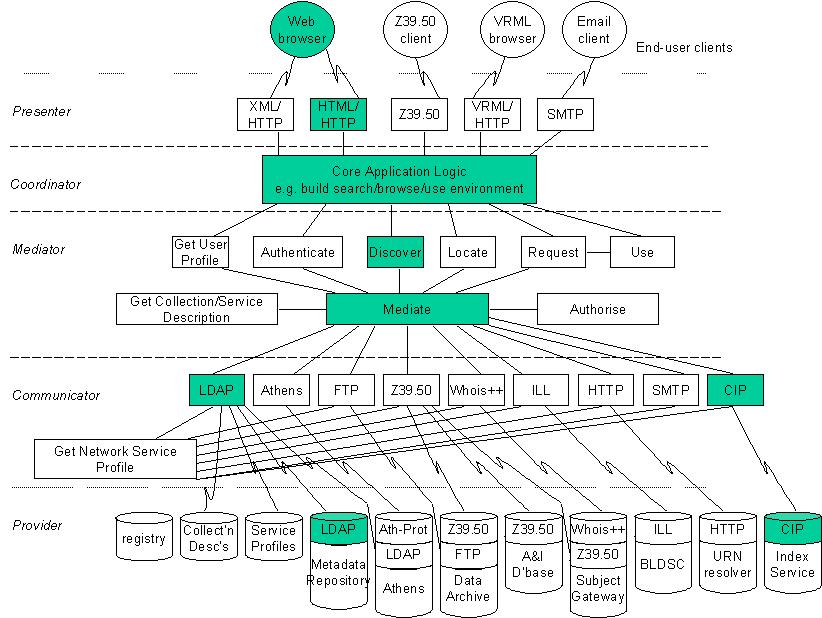

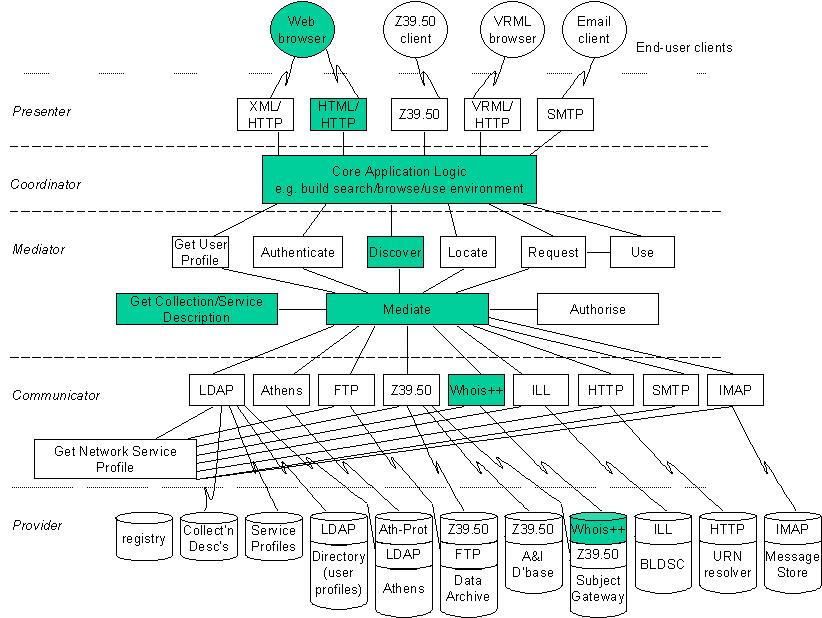

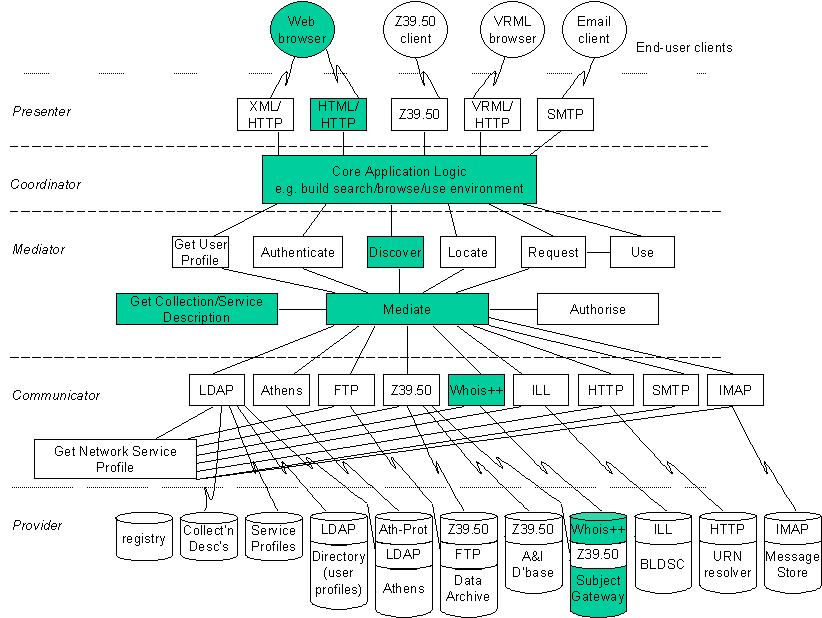

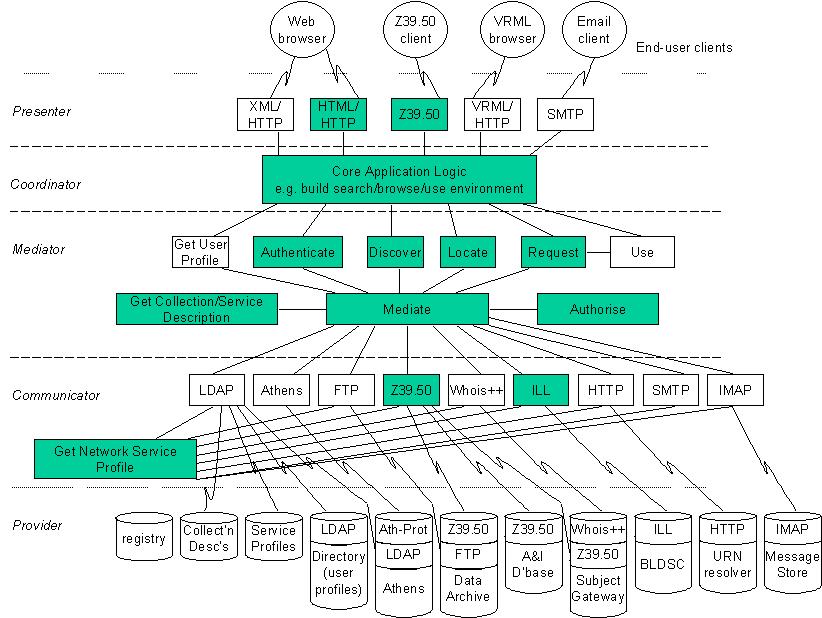

Each broker model is briefly introduced and an attempt made to map its functions onto the generic model known as the MODELS Information Architecture (MIA). The MIA logical architecture is a layered architecture with five layers:

Presenter

Coordinator

Mediator

Communicator

Provider

The functions of each model is analysed in relation to this logical architecture and notes made about the use of standards, protocols and software.

Eighteen broker models are reviewed:

Agora

Aquarelle

Advanced Search Facility (ASF)

CHIC-Pilot

CORC - OCLC's Cooperative Online Resource Catalog

DEF - Denmark's Electronic Research Library

Die Digitale Bibliothek Nordrhein-Westfalen (NRW)

ETB - the European Schools Treasury Broker

EULER project

Finnish Virtual Library (FVL)

GAIA - Generic Architecture for Information Availability

Harvest Indexer

ht://Dig

ISAAC Network

Jointly Administered Knowledge Environment (jake)

Networked Computer Science Technical Research Library (NCSTRL)

RDN ResourceFinder

ROADS toolkit

UNIverse

These can be arranged into the following main categories:

The broker models that underlie open source indexing software toolkits like ASF Freeware, Harvest, ht://Dig, jake and ROADS.

The broker models that underlie the cross searching of distributed Internet information gateways like the Finnish Virtual Library and the Resource Discovery Network (RDN) ResourceFinder. These currently tend to be based on open-source software like the ROADS toolkit and use relatively simple Internet protocols like WHOIS++ or LDAP.

Broker models developed to handle more complex requirements, typically where more than one protocol and data format is in use. Some of those reviewed were based on - where possible - open source software, e.g. the EULER project and the CHIC-Pilot. Some of the other systems are based to some extent on proprietary software and some have some dependence upon commercial products supplied by library software vendors. So, for example, the Agora Hybrid Library Management System (HLMS) is based on Fretwell-Downing Informatics's OLIB VDX system. CORC is based on proprietary software developed at OCLC, but could be licensed for use in a project like Renardus. These more complex models tend to be based on the action of standard protocols like Z39.50 and ISO ILL and sometimes need to interact with authentication services.

A broker model being developed for 'information trading' (GAIA).

The review ends with some considerations towards determining an architectural model in Renardus and some conclusions on the broker review itself.

This report is the first public deliverable to be issued by WP1 (Functional Model) of the Renardus project. The objective of WP1 is to develop the architecture that will underpin the Renardus system. WP1, together with WP6 (Data Model and Data Flow) will provide the functional and data-model specifications of the Renardus broker system. To this end, WP1 has begun to analyse the functional requirements of the Renardus broker system from both service provider and end-user perspectives. These requirements were collected from a survey of Renardus participants and have been published as internal deliverable D1.2.

D1.1 should be able to provide background information for the development of a Renardus broker system based on the best current practice. Its findings will contribute to the specification of functional requirements for the Renardus system (internal deliverable D1.3) and ultimately, to the development of the architectural model for the Renardus system (internal deliverable D1.4 and public deliverable D1.5). D1.1 should also be able to provide background information for the development of the Renardus data model in WP6.

Introduction

This report will provide background information for the development of the Renardus architecture. It reviews 18 broker models that have been developed (or are being developed) for a variety of services, projects and other initiatives. Each broker model is introduced and then its functions are mapped to the generic broker architecture known as the MODELS Information Architecture (MIA) - and specifically to a diagram developed by Powell (1999) to provide an MIA view of a DNER Portal.

At the same time as this deliverable was being produced, Martin Hamilton of the Department of Computer Science at Loughborough University of Technology (LUT) was carrying out a similar review of broker models for a project funded by the NSF/JISC International Digital Libraries Initiative - the IMesh Toolkit project. Some of these reviews (ASF Freeware, CHIC-Pilot, Harvest, ht://Dig, ISAAC Network, jake, ROADS) have - with permission - been adapted and included in this report. Other reviews have been provided by Anders Ardö of the Technical Knowledge Centre and Library of Denmark (DTV), Matthew J. Dovey of the University of Oxford Libraries Automation Service (LAS), Risto Heikkinen of Jyväskylä University Library, Andy Powell of UKOLN and by Arthur N. Olsen of NetLab. Production of the deliverable was co-ordinated by Michael Day of UKOLN.

ADAM

The Art, Design, Architecture & Media Information Gateway - one of the eLib-funded Internet information gateways.

Agora

An UK 'hybrid-library' project funded under Phase 3 of eLib to explore issues of distributed, mixed-media information management.

AHDS

Arts and Humanities Data Service - an UK service, funded by the JISC and the Arts and Humanities Research Board to collect, preserve and promote re-use of the electronic resources which result from research in the arts and humanities.

ANSI

American National Standards Institute.

Apache

An open-source HTTP server.

Aquarelle

An EU-funded project concerned with developing an information network for cultural heritage.

ARPA

Advanced Research Projects Agency.

ART

Proprietary format used by ARTISO - a gateway to the British Library Document Supply Centre's Automated Request Processing System (ARP) being developed by Fretwell-Downing Informatics. The gateway is compliant with the IPIG Profile for the ISO ILL Protocol.

ASF

Advanced Search Facility.

ASN.1 BER

Abstract Syntax Notation 1 Basic Encoding Rules.

ATHENS

An access management (authentication) service developed for and used by the UK higher education community that enables access to a variety of datasets and information services.

BIOME

The RDN Hub for the health and life sciences.

Biz/ed

A Web-based service (including an Internet information gateway) for business and economics resources - one of the eLib-funded Internet information gateways.

Centroids

Index summaries. Used in the context of ROADS-based services to provide forward knowledge in an cross-searching environment.

CGI

Common Gateway Interface.

CHIC

Cooperative Hierarchical Indexing Coordination - TF-CHIC was a TERENA-funded task force concerned with the co-ordination of harvesting and indexing networked resources.

CHIC-Pilot

A project developed by TF-CHIC that set up a pilot distributed indexing service based on WHOIS++, Harvest, ROADS and Z39.50.

CIMI Profile

A Z30.50 profile for cultural heritage information developed by the Consortium for the Computer Interchange of Museum Information (CIMI).

CIP

Common Indexing Protocol.

CNIDR

Center for Networked Information Discovery and Retrieval.

CNRI

Corporation for National Research Initiatives.

Combine

Software for harvesting and Internet resources - developed at NetLab as part of the DESIRE project.

CORBA

Common Object Request Broker Architecture.

CORC

Cooperative Online Resource Catalog. An OCLC initiative to build a union catalogue of Web-based electronic resource descriptions.

DanZig

Danish Z39.50 Implementers Group.

DAVIC

Design Audio Visual Council - an organisation responsible for creating specifications for end-to-end interoperability of broadcast and interactive digital audio-visual information, and of multimedia communication.

DC

Dublin Core.

DCOM

Distributed Component Object Model.

DCMI

Dublin Core Metadata Initiative.

DDC

Dewey Decimal Classification system.

DEF

Danmarks Elektroniske Forskningsbibliotek. Denmark's Electronic Research Library - a virtual library for researchers, students, lecturers and other users of Danish research institutions.

DESIRE

Development of a European Service for Information on Research and Education - a project funded by the European Union.

Dienst

A protocol and architecture for digital libraries that underlies NCSTRL.

DNER

Distributed National Electronic Resource - the JISC's concept of a managed environment for accessing heterogeneous 'quality assured information resources' on the Internet.

Dublin Core

An initiative - sometimes known as the Dublin Core Metadata Initiative (DCMI) - to develop a core metadata element set to facilitate the discovery of digital (networked) resources. Developments in the element set are defined on the basis of international consensus.

EELS

Engineering Electronic Library Sweden.

eLib

The Electronic Libraries Programme - a series of UK higher education-based networking projects, funded by the JISC.

Elki

Internet information gateway edited by the Library of Finnish Parliament.

EULER

European Libraries and Electronic Resources in Mathematical Sciences - a project funded by the European Union.

EUROPAGATE

A European Union-funded (Telematics for Libraries) project that developed a pilot gateway service through which different clients (including Web browsers) are able to access Z39.50 servers.

ETB

European Schools Treasury Broker.

EEVL

Edinburgh Engineering Virtual Library - one of the eLib-funded Internet information gateways. Now part of the EMC RDN Hub.

EMC

The RDN Hub for Engineering, Maths and Computing.

FDI

Fretwell-Downing Informatics.

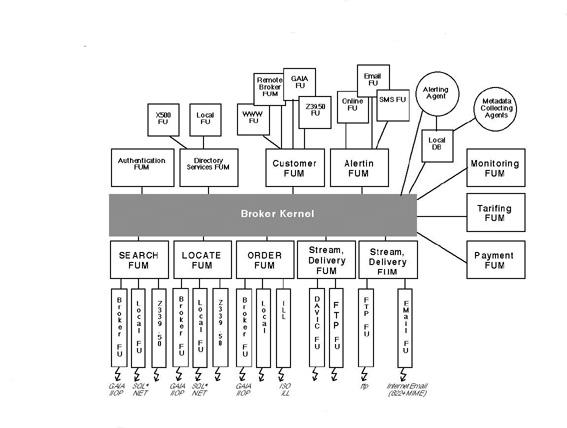

FU

Functional Unit - a concept defined by the GAIA architecture.

FUM

Functional Unit Manager - a concept defined by the GAIA architecture.

FVL

Finnish Virtual Library.

GAIA

Generic Architecture for Information Availability - an EU-funded project aiming to provide a framework for multilateral information trading.

GEDI

Group on Electronic Document Interchange.

GILS

Global Information Locator Service.

Harvest

An open source software initiative offering a distributed solution to the problems of indexing data made available on the Web.

HBZ

The Online Utility and Service Center for Academic Libraries in North-Rhine Westphalia.

HTTP

Hypertext Transfer Protocol.

Ht://Dig

A Web based indexing and searching package being developed as open-source software by a group of volunteers as a community led project.

IAFA

Internet Anonymous FTP Archive.

IETF

Internet Engineering Task Force.

IHR-Info

A gateway giving access to historical resources run by the Institute of Historical Research (IHR) of the University of London (since re-launched as HISTORY) - one of the eLib-funded Internet information gateways.

ILL

The ISO Interlibrary Loan protocols. There are two parts, a service definition (ISO 10160:1997), which defines the ILL services made available to applications using the protocol, and a protocol specification (ISO 10161-1:1997 and ISO 10161-2:1997), which specifies the content of protocol messages and the procedural rules for exchanging them.

IMesh

International Collaboration on Internet Subject Gateways - an international initiative with the aim of supporting communication and collaboration amongst subject gateway providers and related parties.

IMesh Toolkit

A project funded under the NSF/JISC International Digital Libraries Initiative to develop a configurable, reusable and extensible toolkit for subject gateway providers and to consider issues of relevance in the distributed, international subject gateway environment.

InterCat

Internet Cataloging project - OCLC project to test the use of the USMARC format (including the 856 field) and AACR2 cataloguing rules for describing Internet resources.

Internet Scout Project

Project located in the Computer Sciences Department at the University of Wisconsin-Madison providing summaries of selected high-quality Internet resources.

IPIG

ILL Protocol Implementors Group.

ISAAC Network

An initiative of the Internet Scout Project - linking selective collections of high-quality metadata-based Internet resources.

ISAD(G)

General International Standard Archival Description.

Isearch

Software for text indexing and searching - developed by CNIDR.

Isite

An integrated Internet publishing software package (including Isearch and Z39.50 communication tools) to access databases - developed by CNIDR.

ISO

International Organisation for Standardization.

JAKE

Jointly Administered Knowledge Environment.

JISC

Joint Information Systems Committee - a committee funded by the Scottish Higher Education Funding Council, the Higher Education Funding Council for England, the Higher Education Funding Council for Wales and the Department of Education Northern Ireland. Its mission is 'to stimulate and enable the cost effective exploitation of information systems and to provide a high quality national network infrastructure for the UK higher education and research councils communities.'

Kilroy

An OCLC research project building an Internet harvester, full text databases, and metadata databases of Internet resources.

LCSH

Library of Congress Subject Headings.

LDAP

Lightweight Directory Access Protocol.

MALVINE

Manuscripts And Letters Via Integrated Networks in Europe - a project funded by the European Union.

Mantis

A research toolkit developed at OCLC for building Web-based cataloguing systems.

MARC

MAchine Readable Cataloguing. A family of formats based on ISO 2709 for the representation and communication of bibliographic and related information in machine-readable form - e.g. MARC 21 or UKMARC.

MIA

MODELS Information Architecture.

MLO

Music Libraries Online - an UK 'clumps' project funded under Phase 3 of eLib creating a virtual union catalogue for music materials in British libraries, through a Z39.50 gateway.

MODELS

Moving to Distributed Environments for Library Services - an UKOLN initiative supported by JISC (through eLib) and the British Library.

MSC

Mathematics Subject Classification.

NCSTRL

National Computer Science Technical Research Library.

NetFirst

OCLC service giving access to a database of Internet resource descriptions.

NISO

National Information Standards Organization.

NNDP

National Networking Demonstrator Project for Archives - a UK project commissioned by the JISC Non-Formula Funding (NFF) Archives Sub-committee to demonstrate and report on how Z39.50 and ISAD(G) might be successfully employed to provide multi-level cross searching of a range of nominated archival catalogues.

NNTP

Network News Transport Protocol.

NOVAGate

The Nordic Gateway to Information in Forestry, Veterinary and Agricultural Sciences.

NSF

National Science Foundation.

OCLC

Online Computer Library Center.

OMNI

Organising Medical Networked Information - one of the eLib-funded Internet information gateways. Now part of the BIOME RDN Hub.

PHP

An open-source, cross-platform, HTML-embedded scripting language used to create dynamic Web pages.

RDF

Resource Description Framework - a framework for metadata being developed by the World Wide Web Consortium (W3C) for a variety of different application areas, e.g. resource discovery, content ratings and intellectual property rights management.

RDN

Resource Discovery Network.

RDNC

Resource Discovery Network Centre - organisation responsible for co-ordinating the UK Resource Discovery Network, based jointly at UKOLN and King's College, London.

RIDING

An UK 'clumps' project funded under Phase 3 of eLib that aims to support large-scale resource discovery across the Yorkshire and Humberside region by using the Z39.50 protocol to create a distributed union catalogue.

ROADS

Resource Organisation and Discovery in Subject-oriented services - originally an UK project funded by JISC under eLib, ROADS is an open-source software toolkit for Internet subject gateways.

RTP

Real-Time Transport Protocol - designed within the IETF.

Scorpion

OCLC project building tools for automatic subject recognition based on well-known subject classification schemes.

SET

Secure Electronic Transaction - a protocol that facilitates secure payment card transactions over the Internet, promoted by the major credit card companies Visa and MasterCard.

SGML

Standard Generalised Markup Language - an international standard (ISO 8879) for the description of marked-up electronic text.

SOSIG

Social Science Information Gateway - one of the eLib-funded Internet information gateways, now a RDN Hub.

TERENA

Trans-European Research and Education Networking Association.

TF-CHIC

Task Force-Cooperative Hierarchical Indexing Coordination - a TERENA-funded task force concerned with the co-ordination of harvesting and indexing networked resources.

UKMARC

The MARC standard developed and maintained by the British Library National Bibliographic Service (NBS).

UNIverse

EU-funded project - led by Fretwell-Downing Informatics -concerned with developing services for a distributed virtual union library service.

URN

Uniform Resource Name.

USMARC

The MARC standards maintained by the Library of Congress (Network Development and MARC Standards Office) - now harmonised with CAN/MARC (Canadian MARC) as the MARC 21 format.

WHOIS++

A search and retrieve protocol used, for example, by the ROADS software toolkit to ensure cross-searching.

WordSmith

OCLC project intended to improve user access to collections of electronic text by developing ways of identifying and organising clues about their content.

XML

Extensible Markup Language- a lightweight version of SGML developed for use on the Internet.

YAZ

Yet Another Z39.50 Toolkit - a toolkit for implementing Z39.50 developed by Index Data.

Z39.50

An ANSI/NISO protocol for search and retrieval. Version 3 of the protocol has also been accepted as an ISO standard - ISO 23950.

Z39.50 EXPLAIN

A service added in version 3 of the Z39.50 protocol that allows a client to discover information about a server, such as available databases, supported attribute sets and record syntaxes.

ZAP

A search module for the Apache WWW server that utilises the Z39.50 protocol - developed in collaboration between Index Data and the US Geological Survey (USGS).

Zebra

A fielded free-text indexing and retrieval engine with a Z39.50 frontend developed by Index Data.

Michael Day, UKOLN

The object of the Renardus project is to establish an academic subject gateway service in Europe. The pilot system will be based on a generic broker-architecture and data-model that will allow the integrated searching and browsing of distributed resource collections.

For reasons of easy extensibility, it is perceived that the development of a generic broker-architecture for Renardus will need to be based on a review of a variety of currently developed broker models. It is important to ensure that any chosen solution is based on emerging developments rather than being constrained by decisions made by the subset of gateways that are participating in the initial stages of the project.

Most existing broker models have been developed to solve particular solutions or to help provide certain services. For example, the ROADS software used by a number of Internet subject gateways has a model based on the use of the WHOIS++ protocol and the generation of index summaries (centroids) to enable cross-searching between multiple gateways. Other broker models have been developed to handle more complex requirements, including systems that broker access to a variety of different types of service types like Agora.

The broker models considered in this report represent a number of different architecture types:

Generic architectures - e.g. the MODELS Information Architecture. MIA is used as a means of comparing the other architectural models reviewed in this report.

Broker-type architectures developed for specific initiatives and projects - broadly speaking, these architectures broker access to a variety of different resource types, e.g. library catalogues, authentication servers, etc. These include projects like Agora, Aquarelle, EULER, GAIA and UNIverse.

Architectures developed to enable the cross-searching of distributed Internet information gateways - usually based on the same search and retrieve protocol, e.g. WHOIS++ or ANSI/NISO Z39.50 (ISO 23950). Examples include, e.g. the architectures that underlie ROADS cross-searching, the Resource Discovery Network ResourceFinder and the Finnish Virtual Library.

This report attempts to review and evaluate a number of these existing broker architectures to ensure that the Renardus architecture is an example of best practice and that work is not unnecessarily duplicated.

One of the biggest challenges facing those who are attempting to develop digital libraries at the present time is attempting to integrate access to the wide range of distributed and heterogeneous information resources and services that are available. The successful integration of these resources and services is perceived as of being of great benefit to libraries and their end users. Dempsey, Russell and Murray (1999, p. 35) point out that resources are typically differently presented, accessed and structured, and that users, for example, may have to interact with a number of quite different information systems in order to carry out a full search. They suggest the development of an additional service layer - here described as 'middleware' - that would shield the user from any underlying complexity and heterogeneity. This middleware - a broker service - would need to provide "a higher level interface, creating a federated resource from underlying heterogeneity and mediating access to it" (Dempsey, Russell and Murray 1999, p. 38).

Most broker development relates to particular projects or services, e.g. the development of a distributed and heterogeneous mathematics information service in project EULER, or the RDN ResourceFinder. Despite this, several projects and initiatives have tried to address more generic issues. For example, the development of generic broker infrastructure was one of the objectives of the Stanford Digital Library project - funded as part of the original US Digital Libraries Initiative. This project, based at Stanford University, developed a modular testbed infrastructure known as an information bus (or Infobus) based on CORBA (the Common Object Request Broker Architecture) that enabled the integration of a variety of different digital library functions (Baldonado, et al., 1997; Paepke, et al., 1996, Paepke, et al., 1999).

In the UK, much work has been mediated through the MODELS initiative and the development of a generic MODELS Information Architecture.

The MODELS (MOving to Distributed Environments for Library Services) project is an UKOLN initiative that has gained additional support from JISC (through the Electronic Libraries Programme) and the British Library, with Fretwell-Downing Informatics (FDI) as technical consultants. MODELS provides a forum - primarily directed through a series of workshops - that allows relevant stakeholders to explore shared concerns about distributed and heterogeneous resources and services. The initiative has attempted to address design and implementation issues, initiate concerted actions, and work towards a shared view of preferred systems and architectural solutions. It has also played a major role in the development of policy and emerging services in the UK.

MODELS-facilitated deliberations have led to the development of a logical framework for information management in a distributed environment known as the MODELS Information Architecture (MIA). This is not a specification for any particular implementation but a generic model intended to support the discussion and comparison of alternative solutions. Dempsey, Russell and Murray (1999, pp. 38-39) describe the function of MIA in the following way:

The MIA is aligned with wider work that sees the development of 'middleware' or 'broker' services as a central part of how the information environment will develop. It is concerned with the types of function such 'broker' services need to provide as they help project a unified service over a distributed, heterogeneous set of network services. It has a dual focus: as a conceptual heuristic tool for the library community which helps clarify thinking and acts as a lever for development, and as a tool to assist developers as they think about future systems work. The main emphasis has been on the former aspect. The MIA investigates the functional components of viable digital information environments and arranges them in a logical architecture: it does not yet specify how components will be implemented, or concrete interfaces.

The MIA has been described in more detail in a couple of documents produced as part of an MIA Requirements Analysis Study carried out by UKOLN. The first of these describes the MIA's logical architecture, the second its functional model.

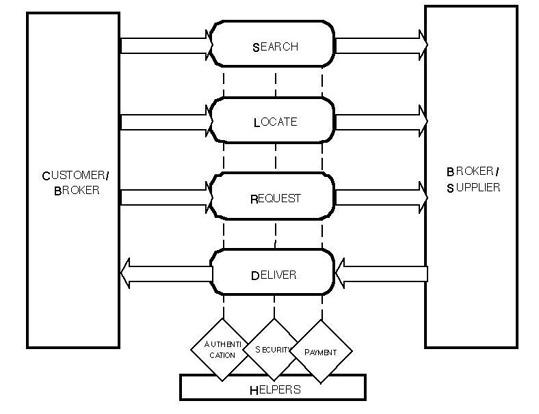

The MIA Functional Model (Gardner, Miller and Russell, 1999b) defines the user functionality of a hybrid information environment. This assumes that the basic behaviour of a hybrid information system is associated with the four MODELS functions:

Discover

Locate

Request

Deliver

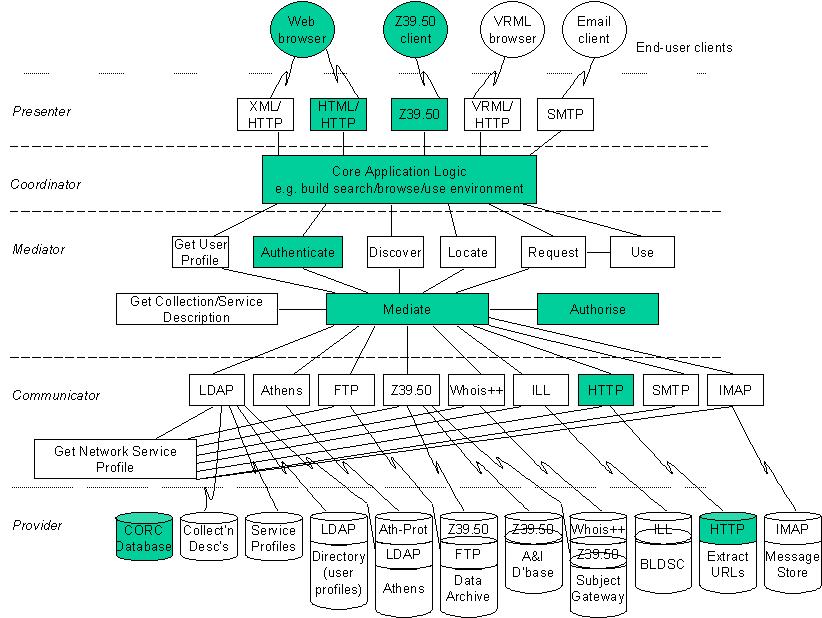

The MIA logical architecture is a layered architecture with five layers. The following descriptions of these layers are adapted from the draft paper by Gardner, Miller and Russell (1999a).

Presenter - This layer is responsible for interacting with users (both human and software), i.e. presenting information to, and accepting input from, the user. The presentation layer may need, for example, to generate HTML, a non-Web GUI interface, a spoken interface, a command-line interface, an email-interface or whatever is required in a particular application. Software clients may also need to be catered for - providing, for example, Z39.50, WHOIS++ and LDAP interfaces to the system. Information entered by the user must be passed on to the Coordinator; this may involve creating 'objects' to pass through agreed APIs or it may involve encoding the data in a particular transport format, e.g. XML.

Coordinator - The Coordinator provides an application layer on top of the Mediator. The Coordinator may provide high-level services built on lower-level services provided by the Mediator appropriate to its user community (e.g. to search for local resources) and may add offer value-added services to its user community, such as maintaining bookmarks and providing services tailored according to user profiles. The Coordinator is responsible for application logic including user profiles (where we include the possibility that the user may be a human or a software agent) and session maintenance (which is concerned with taking into account previous actions within the current session). The Coordinator contextualises requests to the current situation.

Mediator - The Mediator is responsible for understanding the meaning of services (such as search, locate, request and deliver) that may be offered by providers and requested by the Coordinator. The Mediator receives requests from the Coordinator and must determine which service providers (in parallel or in combination) can satisfy the request. Requests may be complex - e.g. a search for a particular book and then to locate libraries that hold the book (and possibly online bookstores that sell it).

Communicator - The Communicator is responsible for communicating with external services, it shields the Mediator from details such as communication protocols and service locations and may also provide basic mapping between metadata vocabularies to achieve a vocabulary understood by the Mediator. In some cases services may directly support communication with the Mediator (they may have been developed to be compatible with it), in such cases the action carried out by the Mediator will be trivial. The Communicator provides a gateway between the Mediator and providers based on a Network Service Profile associated with each service associated with a provider. The Network Service Profile provides details of the location, protocol, query and response formats and metadata vocabularies that required in order to meaningfully access a service.

Provider - The Provider layer contains the external services accessed by the system. The layer most obviously includes the 'primary' services for which the system exists to provide access; for example library catalogues, abstracting services and subject gateways. The provider layer also includes 'secondary' services that the system must access in order to provide primary services, for example, schema registries, authentication services and user profile directories. It is recommended that all services that could be shared with other systems be externalised in this way.

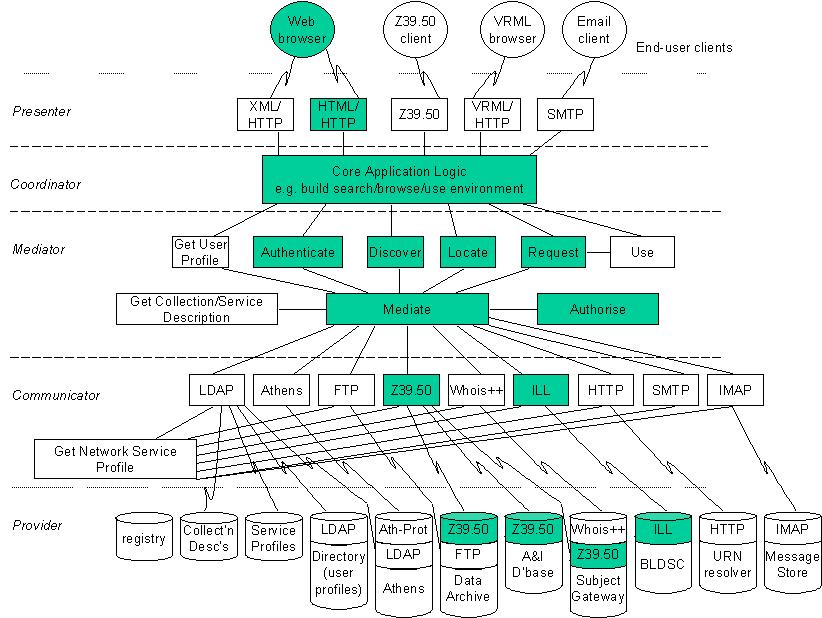

As stated earlier, MIA is not a specification for any particular implementation but a generic model intended to support the discussion and comparison of alternative solutions. The model has, however, been used as the basis for the development of a number of implementations. For example, the UK Arts and Humanities Data Service (AHDS) 'resource discovery' system (Beagrie, 1999) is based on the layered approach to resource discovery developed as part of the MODELS initiative (Russell, 1997), and is broadly based on MIA concepts (Greenstein and Murray, 1997). MIA has also influenced the development of UK hybrid library projects like Agora, services like the Resource Discovery Network. It has also underpinned the JISC's idea of a Distributed National Electronic Resource (DNER). So, for example, a simplified MIA structure has been developed by Powell (1999) to describe DNER services known as DNER Portals (Figure 1.1). It is this structure that has been used as a basis for comparing the different broker models considered in this review.

Figure 1.1: MIA view of a DNER Portal (Powell, 1999)

The 18 broker models described here are reviewed with reference to the MIA. Each broker architecture is briefly introduced and then the Presenter, Coordinator, Mediator, Communicator and Provider levels are described in more detail, sometimes with the help of a diagram based on the MIA view of a DNER Portal (Fig 1.1).

Martin Hamilton of the Department of Computer Science at Loughborough University of Technology (LUT) is carrying out a similar review of broker models for the IMesh Toolkit project - as part of the IMesh Toolkit Architectural Overview deliverable. Some of these reviews (ASF Freeware, CHIC-Pilot, Harvest, ht://Dig, ISAAC Network, JAKE, ROADS) have - with permission - been adapted for inclusion in this report. Other reviews have been provided by Renardus project partners and by Matthew J. Dovey of the University of Oxford Libraries Automation Service (LAS).

Note that broker architectures (including GAIA) have also been evaluated in a report undertaken as part of the EU-funded MALVINE project (Langer, Adametz and Fellien, 1999).

Andy Powell, UKOLN

Agora is a project funded by JISC under phase III of the Electronic Libraries Programme (eLib). The University of East Anglia leads the project, with UKOLN, Fretwell-Downing Informatics (FDI) and the Centre for Research in Library in Information Management (CERLIM) at Manchester Metropolitan University as the other partners.

Agora is a 'hybrid-library' project in that it attempts to integrate the technologies developed for new digital services with those used to give access to traditional library collections. The project builds upon work carried out within MODELS - especially the MIA - and is developing a Hybrid Library Management System (HLMS) that will be an MIA-type broker - a hybrid library demonstrator that provides discover, locate and request functionality across a range of resources. Through this broker, the project is experimenting with providing integrated access to a variety of services that use different protocols and have different interfaces, including library catalogues, Web index services, information gateways and document supply services.

Figure

2.1: Agora related to MIA

The Agora presenter layer provides an HTML/HTTP interface to the Agora 'broker'. A Z39.50 target is expected to be supported in the next version of the Agora presenter layer.

The Agora coordinator, mediator and communicator layers support discover, locate and request functions through underlying use of the Z39.50 and ILL protocols. Authentication, collection description and service profiles are currently stored internally within the Agora system.

Agora expects to provide support for ATHENS-based authentication in the next version of the software.

The Agora provider layer implements a Z39.50 client, through which the mediator communicates with target Z39.50 servers, and an ILL client. It supports several protocols, including ILL and ART, to enable end-users to request delivery of resources (though this is partly not working currently because of the lack of an ILL gateway to the British Library Document Supply Centre).

Collection description is based on the schema developed jointly by several eLib phase 3 projects (including RIDING, Agora and MLO). The schema incorporates and extends the Dublin Core.

Agora supports the Generic Document Interchange (GEDI) standard for electronic delivery of resources.

Z39.50 and the Bib-1 attribute set. The following record types are handled by Agora:

USMARC, UKMARC, Dublin Core, Digital Collections - NNDP Profile, Universe Clusters, GRS-1 generic.

Agora is currently considering implementing support for the California Digital Library protocol.

Fretwell-Downing Informatics (FDI) developed the Agora Hybrid Library Management Systems based on their OLIB VDX system.

Agora project: http://hosted.ukoln.ac.uk/agora/

There is also a short description of Agora in Dempsey, Russell and Murray (1999, pp. 58-59).

Matthew J. Dovey, LAS

Aquarelle was a project funded by the European Commission under its Telematics Applications Programme from 1996-1998 (Michard, 1998). The project was concerned with developing an Information Network on Cultural Heritage. This is a distributed information system that attempts to provide uniform access to the varied collections of data held by museums, art galleries and other cultural heritage-based organisations within Europe.

The Aquarelle project, a European consortium of cultural heritage organisations, IT companies, publishers and research organisations, managed by ERCIM, the European Research Consortium for Informatics and Mathematics. More information is available on the INRIA Web site:

http://aqua.inria.fr/Aquarelle/

Aquarelle is a project designed to provide access to museum collections. As such it is designed to provide a resource discovery system for cultural heritage information. It recognises that archives typically store information in both:

Existing primary material called archive data - e.g.: records, drawings or maps. Typically, these are available in a variety of forms and structures, e.g.: text, images, databases, etc.

Specially created secondary material that consists of structured, hierarchical (e.g. SGML-based) documents that describe, comment on and refer to archive data, etc. Aquarelle developed a mechanism (called folders) for searching and navigating the latter.

The project also provides image-watermarking facilities.

The architecture of Aquarelle is given below. Access to the underlying databases (both archival and folder databases) is provided via a Z39.50 gateway to the underlying database.

Fig.

2.2: The Aquarelle Architecture (Michard, 1998)

Presenter Dempsey, Russell and Murray (1999, p. 64) point out that the Aquarelle system can be readily mapped to the MIA model:

The Aquarelle Access Server is a broker supporting most of the MIA functions. It maintains a database of registered users for authentication and user profiles. The Aquarelle Directory services maintain a database of collection and service descriptions that support the MIA discover and locate functions. The user interface components present the information landscape in terms of subject domains as well as specific databases; they also provide multilingual thesauri to assist in query formulation. Aquarelle supports the MIA request and delivery functions for Aquarelle folders. In addition Aquarelle offers facilities which are not explicit MIA components: folder publishing, persistent link management and multilingual thesauri for query formulation.

Figure

2.3: Aquarelle related to MIA

Figure

2.3: Aquarelle related to MIA

The presenter in the Aquarelle model is the user interface layer. This presents information to the end user via HTML over HTTP. It communicates to the user client server in the Access Server layer of the Aquarelle model using an internal query language AQL (Aquarelle Query Language)

The co-ordinator in the Aquarelle model is provided by the user client server in the Access Server layer. The user client server also provides some mediator facilities such as authentication and user profiling. There is also an addition function provided at this layer in the form of a thesaurus management system and a link management system for navigating structured data (in the form of SGML). More information on the link management system can be found in: E. Fras, Folder Publisher, Link Server and Directory Services, Aquarelle Deliverable No D4.3, 15 Sept. 1997.

The mediator in the Aquarelle model is provided partly by the Access Server API in the Access Server layer, but some facilities as mentioned above are provided by the user client server.

Communications from the mediator to the underlying providers is via the Z39.50 protocol using a profile incorporated into the CIMI profile. Records are returned via GRS.1 syntax encoded SGML records.

The provider needs to be a Z39.50 server. Either this is a dedicated Aquarelle folder server providing access to SGML hypertext documents typically providing information relating to groups of objects and including links to other folders, and to object data known to the Aquarelle system, or to an existing record database. In the case of the latter this may be provided via a Z39.50 gateway for that particular database.

Aquarelle developed a Z39.50 Application Profile based on Draft version 3 of the CIMI profile. This is now merged into the CIMI standard. Records are transmitted as GRS.1 encoded SGML records. The thesaurus management system is based on standards ISO 2788 and ISO 5964.

The communication protocols are Z39.50 and HTTP. There is also an internal query language AQL (Aquarelle Query Language).

Aquarelle: http://aqua.inria.fr/aquarelle/public/EN/home-eng.html

Michard, A., ed., 1998, Final report: IE-2005 Aquarelle: sharing cultural heritage through multimedia telematics. Le Chesnay: INRIA. http://aqua.inria.fr/Aquarelle/Public/EN/final-report.html

Michard, A., Christophides, V., Scholl, M., Stapleton, M., Sutcliffe, D., Vercoustre, A-M., 1998, The Aquarelle resource discovery system. Computer Networks and ISDN Systems, 30(13), 1185-1200.

Z39.50 for Access to Cultural Heritage Information - Aquarelle Profile, Version 0.2, 1997-07: ftp://lcweb.loc.gov/pub/z3950/profiles/aqua.txt

Martin Hamilton, LUT

ASF (Advanced Search Facility) is an interoperability framework for (predominantly) government information. The 'freeware' ASF implementation reviewed here is being developed by a diverse group that was initially funded under the U.S. Information Technology Innovation Program.

Version Reviewed: 1.3.2 (17th March 2000)

Download: http://asf.gils.net/

Status: A number of packages each with their own conflicting copyrights, plus additional code written by the ASF group which has no copyright assignment.

Support: Community support is available via the asf@cni.org mailing list.

Platforms: Development and testing are done using Linux. Some support for other Unix variants (e.g. OpenBSD and NetBSD) is also available.

Prerequisites: Linux, Perl, C/C++ compiler

The ASF freeware distribution consists of:

ASFcrawl - a robot based indexer (the Pavuk package)

ASFserv - a Z39.50 server (built with the IndexData Yaz library)

ASFhttpd - a Z39.50 enabled HTTP server (a custom Apache server with the IndexData 'Zap' Z39.50 client module, which also uses Yaz)

There are also a number of CGI based and X Window admin programs for configuring and controlling the ASF node, and an experimental WHOIS++ server.

The underlying search engine for ASFserv and ASFwhois is the CNIDR Isearch library.

The Zap Apache module

The ASFserv Z39.50 server

The ASFcrawl component of the ASF Freeware package effectively constitutes an additional layer that MIA does not directly address.

The end user interacts via HTTP with an Apache module mod_zap which provides the actual search capabilities. The database being searched is constructed using

ASFcrawl, which performs HTTP based traversal and indexing starting at a nominated URL. The ASF database is normally accessed via the Z39.50 search and retrieval protocol, but experimental WHOIS++ access is also available.

The core protocols used by the ASF Freeware distribution are HTTP and Z39.50, with GILS records encoded in XML as the standard metadata format. Support for WHOIS++ and RFC 1913 centroids - but not the Common Indexing Protocol (RFC 2651, RFC 2652).

No additional software is required to get a server up and running with the ASF Freeware package.

The ASF Freeware package is essentially a Web crawler, like ht://Dig, and so not immediately suitable for use as in the subject gateway context. Unlike many of the other robot based indexing packages, it features a standard metadata format, which would make it possible to introduce records produced by human cataloguers in addition to those generated by the robot based indexing process.

Similarly, use of Z39.50 and WHOIS++ servers means that the ASF Freeware package can be integrated into existing Z39.50 and WHOIS++ based systems.

Since the Z39.50 server sits in between the end user and the system (in normal operation), there may be problems with high volume usage. There is no indication that this has been tested, and no high volume sites are known to be using the ASF Freeware package.

As with early versions of Harvest, complete external packages (Apache, Pavuk, Isearch and Zap) are included - rather than having the installer fetch them separately.

This is unfortunate, since it creates all manner of problems for the ASF software maintainers, and for anyone wishing to extend the software. It is also misleading, since the ASF Freeware developers (in their announcements and release notes) effectively claim credit for this work, which has been done by other people. Only a tiny fraction of the ASF Freeware distribution is actually new code. To their credit, the ASF developers have taken pains to keep their versions of Apache et al. separate and distinct by keeping them within the ASF directory structure.

The use of shell scripts in the ASF CGI based admin system is a major cause for concern on security grounds - but in normal operation this area is only accessible to people with admin privileges on the server. Anyone operating an ASF server should take care who they grant admin privileges to, and the scripts themselves should be rewritten - e.g. in Perl with tainted variable checking.

The ASF Freeware distribution shows us how existing packages may readily be combined to form a complete resource discovery system. However, the major areas of functionality in (for example) ROADS are not represented - e.g. Web based editing, link checking, 'what's new' and subject listing breakdowns.

Martin Hamilton, LUT

TF-CHIC (Task Force- Cooperative Hierarchical Indexing Coordination) was a (TERENA) funded task force concerned with the co-ordination of harvesting and indexing network resources. Work in the task force built upon existing standards and technologies, such as those employed in Harvest and the DESIRE and ROADS projects.

The task force spawned the CHIC-Pilot project that set up a pilot distributed indexing service based on WHOIS++, Harvest, ROADS and Z39.50 technology. The project ran from the end of 1997 to the Summer of 1998, and its results were later fed back into the ROADS software development.

TF-CHIC, a task force co-ordinated and funded by the Trans-European Research and Education Networking Association (TERENA).

The CHIC-Pilot architecture is specifically geared up towards distributed indexing - to the extent that it incorporates a layer of functionality (the Gatherer) specifically for this. By contrast, the MIA model does not concern itself with the origin of the data offered by the Provider to the other layers.

CHIC-Pilot never managed to get to the point of using centroids from the various Indexers included - instead a simple hack was used whereby the chic-search.pl script connected to a WHOIS++ server which returned referrals to each of the WHOIS++ servers (including application level gateways) which it was aware of.

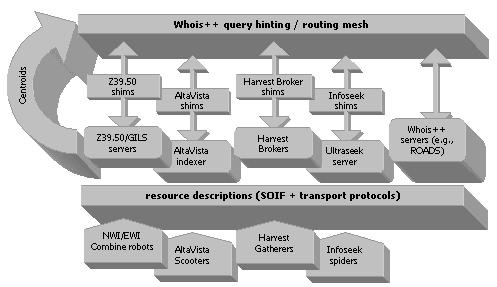

Figure 2.4: CHIC-Pilot overview (Valkenburg, et al. 1998)

The CHIC-Pilot architecture is based on three layers:

Broker (CGI script chic-search.pl) - is responsible for interacting with the end user, sending their queries to the Indexer using WHOIS++ (RFC1835), and collating the results.

Indexer (various) - collects resource descriptions from the Gatherer, indexes them, and makes the indexes available for searching by the Broker. The Indexer is required to implement the WHOIS++ protocol, and may also support WHOIS++ centroids (RFC1913) or Common Indexing Protocol (RFC2651) Tagged Index Objects (RFC2653).

Gatherer (various) - creates resource descriptions by visiting URLs and summarising their contents, and makes these available for fetching by the Indexer. The Gatherer is required to support the SOIF [harvest] metadata format for information exchange with the Indexer.

A fuller description of the CHIC-Pilot architecture is available in a paper which was presented at the 1998 TERENA Networking Conference (Valkenburg, et.al., 1998).

Note that although CHIC-Pilot was conceived for distributed indexing and searching based on `robot' generated metadata, this is not actually a requirement. The metadata that is manipulated could have been created by a human cataloguer rather than by machine parsing.

The top four layers of MIA are essentially compressed into the CHIC-Pilot Broker. This is feasible because of the use of lowest-common-denominator 'standards' in the form of WHOIS++ and SOIF, and means that elaborate mechanisms to incorporate support for multiple standards in these areas are not necessary in the architecture itself. However, as a result it would be non-trivial to replace either of these standards.

Native WHOIS++ servers or gateways to other systems (e.g. UltraSeek)

The CHIC-Pilot architecture is built around HTTP and HTML for interaction with the end user.

WHOIS++ is used for interaction between the Broker and the Indexers, and SOIF for exchanging index information between the Indexer and the Gatherer.

Although no additional software beyond chic-search.pl is required in order to operate the CHIC-Pilot search interface, this pre-supposes that a network of WHOIS++ servers are available to query. To use the WHOIS++ gateways to Harvest, Z39.50 and UltraSeek, the operator must have these servers running behind the scenes.

The script chic-search.pl is open source software distributed under the terms of the GNU General Public License/Perl Artistic License (i.e. the standard Perl Terms and Conditions).

More information on the CHIC-Pilot (and a cross-search demonstration) can be found at: http://www.terena.nl/projects/chic-pilot/

Valkenburg , P., Beckett , D., Hamilton, M., Wilkinson , S., 1998, Standards in the CHIC-Pilot Distributed Indexing Architecture. TERENA Networking Conference '98, Dresden, 7 October. http://www.terena.nl/projects/chic-pilot/tnc/paper.html

Arthur N. Olsen, NetLab

The OCLC Cooperative Online Resource Catalog (CORC) builds on the experience of earlier OCLC initiatives like NetFirst and InterCat. CORC is based on the same philosophy as the main OCLC union catalog (WorldCat). Guest access to CORC is available at the following URL: http://www.oclc.org/oclc/corc/index.htm

The Online Computer Library Center (OCLC)

CORC is primarily a union catalogue of Web-based electronic resource descriptions that parallels WorldCat. The system includes a number of innovative features to assist in cataloguing new Web sites or pages. The system provides harvested documents with suggested subject keywords and classification numbers (DDC). Link checking is provided to ensure the currency of URLs and automated content checking assists in determining the stability of the resource. The participating libraries can use the system to construct and maintain lists of Web resources in specific areas - called Pathfinders in OCLC parlance.

Description in CORC can be done in both MARC21 and DC formats, data can be imported and exported in MARC or RDF/XML format. Internally the bibliographic data is held in ASN1.BER format.

The size of the CORC database is currently (March 2000) about 200,000 records. Background information about CORC has been mostly published in the OCLC Newsletter (OCLC, 1999)

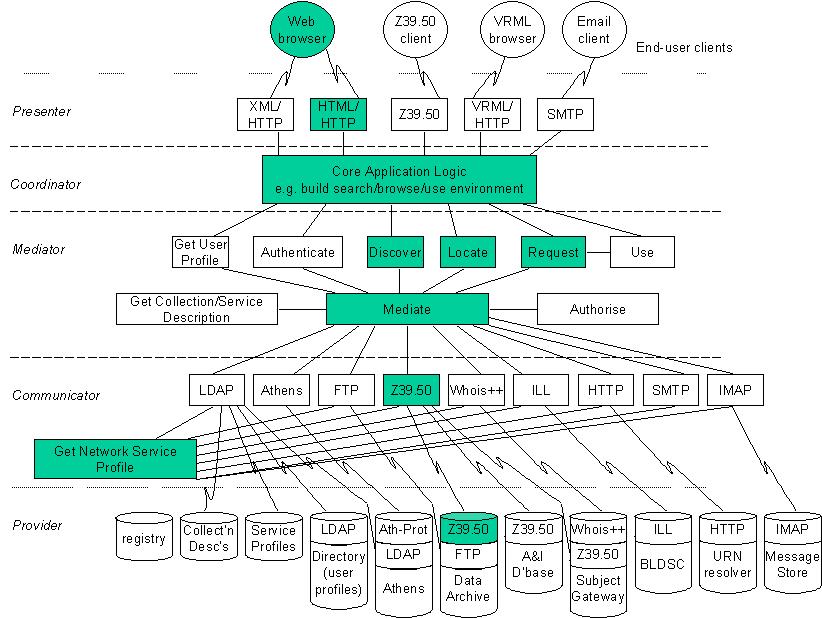

Figure 2.5: CORC related to MIA

User access is with standard Web browsers. Z39.50 access is possible for searching

Core logic for searching, cataloguing and constructing pathfinders. Also functions for automated subject indexing and classification of electronic documents.

Mediator functionality is rather limited. CORC is a union catalogue concept with a large degree of centralisation. It is reasonable to place authorisation in this layer.

The amount of communication with other systems is limited, retrieving resources for description and harvesting is based on HTTP.

The information provided is located in the central CORC database

Metadata in MARC 21 and DC format, export in RDF/XML format is also possible. Internal representations in ASN1.BER format.

Subject description standards based on Dewey Decimal Classification (DDC) and Library of Congress Subject Headings (LCSH).

HTTP and Z39.50 protocols

Most of the software used in the CORC system has been developed at the OCLC Office of Research. The MANTIS toolkit has been used as a basis for constructing CORC. The software tool Kilroy is used for harvesting Internet resources. Two software tools are used for assisted subject indexing of Web documents, Scorpion for Dewey (DDC) numbers and WordSmith for subject keywords. All the software mentioned is proprietary. Licensing Scorpion with access to the Dewey database could be an option regarding provision of a common browsing framework for a future Renardus service.

CORC: http://www.oclc.org/oclc/corc/index.htm

MANTIS: http://orc.rsch.oclc.org:6464/

Kilroy: http://orc.rsch.oclc.org:7080/

Scorpion: http://orc.rsch.oclc.org:6109/

Wordsmith: http://orc.rsch.oclc.org:5061/

Hickey, T.B., 2000, CORC: a system for gateway creation. Online Information Review, 24 (1), 49-53.

OCLC, 1999, CORC Project. OCLC Newsletter, 239, May/June 1999. http://www.oclc.org/oclc/new/n239/index.htm#feature

Anders Ardö, DTV

The Danish Ministry of Culture, the Danish Ministry of Research and the Danish Ministry of Education have decided together to carry out the project 'Denmark's Electronic Research Library' (DEF). The National Budget for 1998 provided a total amount of 200 mil. DKK for the project, distributed over the years 1998-2002.

DEF is established through a network of research libraries, information centres and public libraries with a view to creating Denmark's Electronic Library.

It is important to stress that DEF should emerge as one large, coherent, virtual information system as a result of the network's linking of the research libraries' and other information centres' services, including for example national licence agreements. The overall effect is gained by complying with and following standards: for communication, for search support, for terminology, for registration of document description and representation.

The Liaison Committee works out the framework for DEF and consists of representatives for the three ministries and the Danish National Library Authority as well as the chairman of the Steering Committee. The Steering Committee consists of 10 representatives for relevant user groups. They have the overall responsibility for turning the project into reality. The Danish National Library Authority acts as secretariat to the Steering Committee and is responsible for the actual running of the project.

Denmark's 12 largest and 44 medium-sized research libraries and the Danish National Library Authority are the primary forces in the DEF project. But in due course more than 200 small research libraries and the country's other information suppliers an research institutes will become part of DEF.

There are three main architectural components in DEF:

DEFkey - a global authorisation system that should ensure access to all relevant DEF services, irrespectively of location, through one login action (still under investigation/development).

DEFcat - a virtual union library catalogue of all participating research libraries individual catalogues. It is based searching each catalogue using Z39.50 and the common DanZig profile (in testing phase).

DEFportal - gateway to Internet resources. This component is the most developed and it is (presently) made up of 6 individual services, where all are using similar metadata profiles but covering different subject areas:

Vejviser - Covers resources at Danish research libraries. Based on metadata in Web pages that are harvested to produce a central database once a week (in production since 1999-10-01).

Industrial economics, descriptive statistics - a consortium with 4 members

Virtual music library - a consortium with 5 members

Medical clinical information - a consortium with 4 members

Food technology - a consortium with 4 members

Energy technology - a consortium with 3 members

Most consortia members are research libraries. The subject specific portals (2-6) have just started their work and are planned to go public by the end of 2000. They base their metadata definitions on the basic Vejviser profile.

All of the above (1-6) also provides, in addition to resource descriptions according to the metadata format, a full-text database of harvested Web pages relevant for each of the services.

The basic architecture relies on underlying structured data being provided through a standardized search and retrieval protocol (Z39.50) with a few basic profiles (DanZig, Portal). On top of that user interfaces are/can be built using either Web to Z39.50 gateways or native Z39.50 clients. Discussions and implementations about user interfaces include:

virtual union catalogues

vejviser

each subject specific portal individually

vejviser and all subject portal in a seamless union

the above plus virtual union catalogue

other more advanced integrating more sources like article databases (DADS), Web indexes, etc.

Here is the planned seamless service brokering the 6 portal services is described.

Figure 2.6: DEF related to MIA

The parts Presenter, Coordinator, Mediator,and Communicator are presently being developed. The first version will be ready 2000-07-01. All of these functions are implemented in one component, a Web to Z39.50 gateway called ZAP.

The Web to Z39.50 gateway presents the user with HTML pages and forms generated on the fly from templates.

The HTML templates implement the coordinator functions.

No real mediation takes place, apart from the conversion from HTML forms to valid Z39.50 queries and decoding of returned records into HTML structures.

Based on internal tables reflecting the various Z39.50 profiles of the data providers this part provides Z39.50 access to databases.

Z39.50 databases. Each service provides two separate databases - one with metadata for resources and one with harvested full-text. All using the same basic metadata profile (with local additions).

DEF is based on open standards. All portal services base their metadata definitions on Dublin Core. Furthermore they co-ordinate their definitions and maintain a core set of metadata fields. Each participating service can use local additions.

DEFcat data is based on MARC-records.

Involved profiles and metadata definitions:

Vejviser metadata profile (in Danish)

subject portal metadata profiles (in Danish)

DanZig library catalogue Z39.50 profile

Vejviser Z39.50 profile (in Danish)

The DEF relies on HTTP and Z39.50.

DEF Internet resource toolkit which includes:

ZAP - a Z39.50 to Web gateway

Z'Mbol - a Z39.50 database system

Cataloging user interface

Combine harvester

Import/export utilities

Common integrated configuration utility

The implementation is being done with a mixture of free and commercial software. Within the project a 'DEF Internet resource toolkit' is being implemented as a modular software based on separate components glued together with configuration files. Most components are/will be freely available.

Combine http://www.lub.lu.se/combine/

Danmarks Elektroniske Forskningsbibliotek (DEF): http://www.deflink.dk/english/

DanZig library catalogue Z39.50 profile: http://www.bs.dk/danzig/profil.htm

DEF vejviser: http://www.deff.dk/vejviser/

Subject portal metadata profiles (in Danish): http://dtv239.dtv.dk/fagportaler/metadataprofilerna.htm

Vejviser metadata profile (in Danish): http://dtv239.dtv.dk/format/formatbeskrivelse.htm

Vejviser Z39.50 profile (in Danish): http://dtv239.dtv.dk/lister/indexdata1.html

ZAP: http://www.indexdata.dk/pub/yaz/development/zap.tar.gz

Z'Mbol: http://www.indexdata.dk/zmbol/

Arthur N. Olsen, NetLab

An integrated hybrid digital library system has been developed mainly for the academic sector in the German province of Nordrhein-Westfalen (North Rhine-Westphalia). Guest users can also access the system at the following URL: http://www.digibib-nrw.de/Digibib

The period as a development project is now over and the responsible organisation is now HBZ (Online Utility and Service Center for Academic Libraries in North Rhine-Westphalia). Planning started in February 1998 with Bielefeld University Library as project co-ordinator. Two German companies - IHS technologies and Axion - have been responsible for software integration and development. The digital library system has been largely constructed utilising commercial software packages.

Background information regarding the goals and systems design of the NRW system can be found in two documents dating from 1998:

Konzept (Die Digitale Bibliothek NRW, 1998)

Technishes Konzept (Groos, et al.,1998).

A brief overview of the project and technical description of this digital library system is presented in Habermann and Heidbrink (1999).

The functions that have been implemented are:

Authentication via username and password

Cross-searching of library catalogues, bibliographic databases, Internet resources, electronic periodicals and full text documents. Searching some systems is based on the Z39.50 protocol while in other cases unified searching of proprietary systems is enabled

Integration with various document delivery systems for printed items

Billing and payment procedures

The NRW system also includes support for access to electronic documents published by member organisations. The metadata describing the electronic documents is collected by a robot operated by NRW and loaded into the IR-system BRS after linguistic processing with the MILOS II system for enhanced retrieval.

Figure

2.7: Die Digitale Bibliothek NRW related to MIA

The user interface to the digital library is through standard browsers like Netscape and Internet Explorer

Authorisation, maintenance of user profiles and providing user services tailored to the individual user and site are provided in this layer

Cross-searching and harmonisation of search result provided by the commercial products 'Query builder' and 'WebPAC' can be placed in this layer

Access to some resources is based on the Z39.50 protocol. Link up with ILL services is also provided.

The Portal NRW brokers to a variety of library catalogues, databases and electronic documents both locally at the portal site and remote.

The metadata for electronic documents conforms to the Dublin Core Metadata Element Set. The co-operating libraries have developed a profile for use in the NRW system (Sprick, 1999).

The main protocols involved are HTTP and Z39.50.

As mentioned above the NRW digital library system has been constructed using several software products from commercial companies:

Query Server (Dataware)

WebPAC (Epixtech)

BRS/Search (Dataware)

MILOS II software for verbal indexing based on automated linguistic processing and dictionaries

None of the software products mentioned are available under a General Public Licence. Providing cross-searching capabilities for many heterogeneous European information gateways for the Renardus service could make it necessary to use commercial products such as Query Server.

Digital Library Nordrhein-Westfalen: http://www.digilib-nrw.de/Digibib

IHS technologies: http://www.ihs.de/html/index.htm

Axion: http://www.axion-gmbh.de/

Dataware: http://www.dataware.com/technology/

Epixtech WebPAC: http://www.amlibs.com/product/htmlwebpac.htm

Die Digitale Bibliothek NRW, 1998, Konzept. Bielefeld: Bibliothek der Universität Bielefeld. http://www.ub.uni-bielefeld.de/digibib-nrw/konzept.htm

Groos, M., Hardt, J., Nold, A., Pieper D., Seiffert, F., Summann, F., 1998, Die Digitale Bibliothek NRW -Technishes Konzept. Bielefeld: Bibliothek der Universität Bielefeld. http://www.ub.uni-bielefeld.de/digibib-nrw/techkon.htm

Haberman, M., Heidbrink, S., 1999, Die Digitale Bibliothek NRW - Chronologie, Projektverlauf und Technische Beschreibung. B.I.T. Online, 2/1999. http://www.b-i-t-online.de/archiv/1999-02/nachrich/haberm/artikel.htm

Sprick, A., Tröger, B., Hoffmann, L., Hupfer, G., 1999, Das Metadatenformat der Collect-Datenbank der Digitalen Bibliothek NRW. Cologne: Hochschulbiblothekszentrum des Landes Nordrhein-Westfalen (HBZ). http://www.hbz-nrw.de/DigiBib/dokumente/allg/meta.html

Arthur N. Olsen, NetLab

This is a major project co-ordinated by the European School network and funded by the Information Society Technologies program of the European Commission. The Swedish Ministry of Education / Committee for the European Schoolnet is co-ordinating partner. This new project builds on work done in the earlier project European Universal Classroom and the general collaboration and development work done under the auspices of the European Schoolnet.

The project has just started so the following description is based on the project proposal (from Annex 1: Description of work):

Objective:

"The objective of the project will be to build a Web educational resource Metadata Networking and Quality Processing infrastructure for schools in Europe. This infrastructure aims to link together existing national repositories, encourage new publication, and provide a reliable level of quality and structure. There is no intention of reinventing national initiatives in this area, instead the objective is to add value to these systems while providing an interoperable layer to help teachers and students locate resources Europe wide. The proposal aims to build a simple yet effective distributed "Schoolnet Information Space". The material will be managed and organised according to defined rules in order to easily locate relevant resources. The educational user wants access to all data repositories, whatever indexing method is used or in whatever system they are supplied. The user, even in the world of decentralised, non-homogeneous data pools, demands from the system-developer to ensure that the following information requirements are met: The user should obtain only the most relevant documents, but if possible, also all of the most relevant ones according to his own information needs. This rich information space can form a reserve of educational material classified according to subject and resource type for use by teachers in preparing lessons, and by students for reference and research. It can contain guidelines and best practices for pedagogic reference and other material. An editorial interface aims to ensure a high quality of information and review. The project will enable and encourage trans-cultural and trans-national co-operation and communication and will enable individuals (students, teachers, administrators, parents) and workgroups to produce, handle, retrieve and communicate information in the languages of their choice, and to combine information resources from different regions and countries, and of different levels. It builds on existing or foreseen results of several projects: EUN MM1010, EUC and others. Deeper roots can be found in other projects like DESIRE, GEM and others".

Architecture Elements:

"The key to success will be the ease of use of tools for publishing resources to the information space and for locating relevant information in that space. It is paramount that the development take into account existing national systems and technical infrastructure available within the schools. It is therefore assumed that material is published at the schools or through national or regional repositories. It is the metadata describing the resources, and the multilingual subject classification and thesaurus that underlie the proposed European Treasury Browser system. At the technical level the planned components are as follows.

A Web enabled multilingual educational subject classification and thesaurus to aid accessing and providing content.

An intelligent data-entry system for the end-user including a metadata authoring tool with gateways to existing metadata systems, and a quality assurance procedure

A dynamic metadata network to allow the flow of information across the Internet.

A metadata registry with an intuitive search interface (client).

Architecture elements are identified as follows:

European Subject Thesaurus and Classification

Intelligent Metadata Authoring Tools

Metadata Network Transport.

By harvesting Web sites (PULL)

By searching repositories remotely (Z39.50)

By transferring metadata records to the registry (PUSH)

European Treasury Browser Metadata Registry"

As can be seen from the above material the goals and objectives of the ETB project are in many ways similar to those of Renardus. There is a focus on interoperability, metadata standards, multilingual access and harmonising access through controlled vocabularies. A major difference is the amount of development work that is planned regarding the construction of a new multi-lingual thesaurus. Development of a central ETB metadata registry and search client is envisioned as a first step followed by an expansion to a distributed registry system. Some architectural decisions seem to have been made already in the proposal, one of these is that there will be at least one union catalogue for metadata with the possibility for replication.

In the following the suggested architecture in the proposal will be related to the MODELS Information Architecture (MIA). The diagram bellow places functions from ETB into a framework developed by UKOLN in the document An MIA view of DNER portals (Powell, 1999). Relating the architectural elements of this project proposal to the more abstract MIA model is somewhat difficult. The components of ETB that can be related to this model are shaded.

Figure

2.8: ETB related to MIA

In the framework of ETB this will be purpose-built WWW routines for submission, thesaurus maintenance and searching supplemented by standard Z39.50 clients for searching

Most of the core functionality of the proposed system can be related to this layer:

Metadata Registry Database

Import of metadata into the database through harvesting, metadata authoring tools and network distribution

Editorial control system

Thesaurus module

Searching services

The need for complex mediation services is limited, it seems reasonable to relate the envisioned tools for Cross-lingual information retrieval to this layer

The ETB proposal calls for using the Z39.50, NNTP and HTTP protocols that all can be related to this layer.

The services provided are limited to the local database and remote metadata registries within the realm of the ETB project

The following standards are envisioned as part of the ETB project:

Dublin Core metadata with extensions

Metadata distributed with RDF/XML syntax

The thesaurus that is part of the project will follow the relevant standards from ISO

In addition to HTTP for access via WWW browsers Z39.50 is planned as a mode of access for searching. Metadata sharing, distribution and synchronisation is planned using the NNTP protocol.

The choice of software for this project has not been made at this early date. Reuse, modification and packaging of existing software is planned. Some decisions regarding tools have been made. Gist, an information toolkit developed by one of the patters of the ETB project will be utilised to develop much of the core functionality of the metadata repository. The Combine harvesting robot developed by NetLab will probably also be used. This product which is in the public domain could be utilised for harvesting metadata in the Renardus context.

European Universal Classroom: http://www.medianet.org/euc/

European Schoolnet: http://www.en.eun.org/front/actual

MODELS Information Architecture: http://www.ukoln.ac.uk/dlis/models/

Gist: http://gist.jrc.it/default/

Combine: http://www.lub.lu.se/combine/

Arthur N. Olsen, NetLab

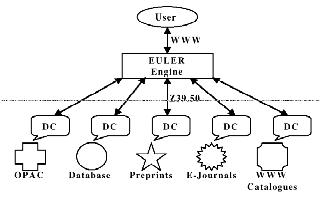

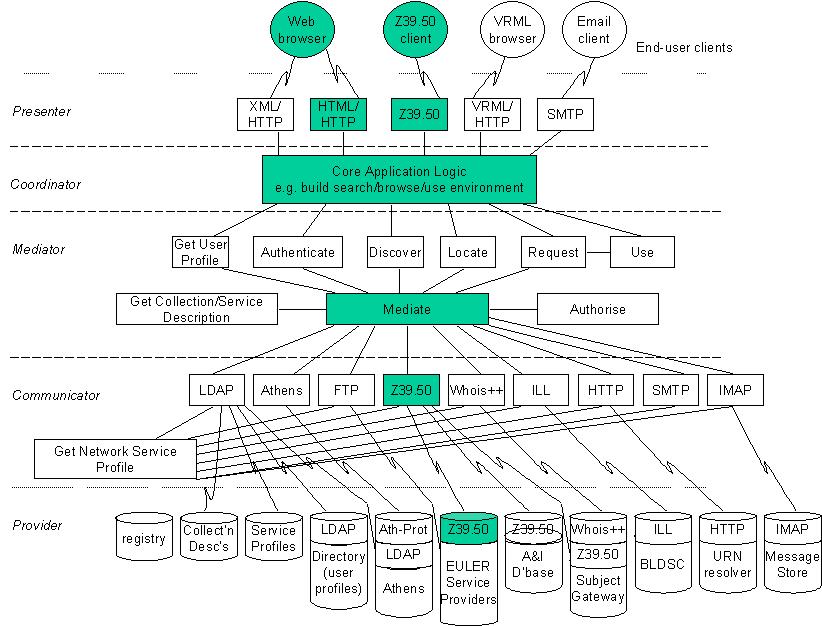

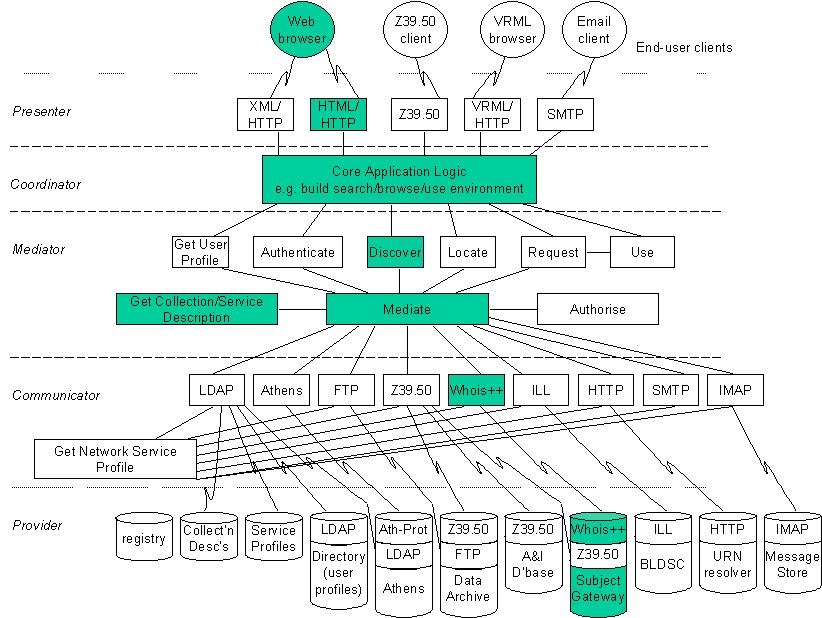

EULER - EUropean Libraries and Electronic Resources in Mathematical Sciences - is a project within the realm of the Telematics for Libraries program of the European Union. The project started in April 1998 and is scheduled to continue until October 2000. FiZ Karlsruhe is the co-ordinator of the project, two of the partners in the EULER project, Netlab and SUB, are also Renardus participants.

The project has proceeded according to plans and a trial service is already accessible at the following URL: http://zaphod.lub.lu.se/euler/engine/engine.html

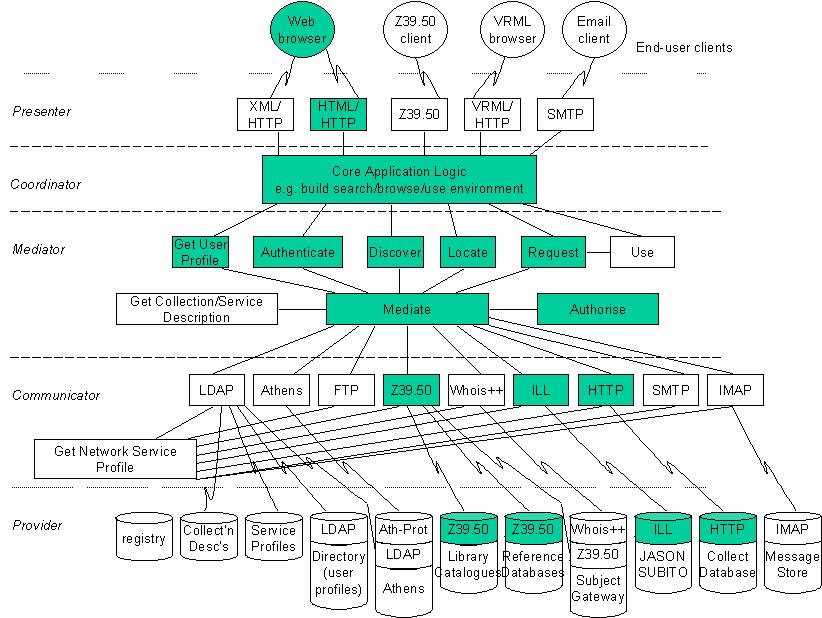

The EULER service intends to offer a "one-stop shopping site'' for users interested in mathematics by integrating bibliographic databases, library online public access catalogues, electronic journals from academic publishers, online archives of pre-prints and grey literature, and indexes of mathematical Internet resources. These will be made interoperable by using common Dublin Core based Metadata descriptions and a common user interface - the EULER Engine - will assist the user in searching for relevant topics in different sources in a single effort.

The focus of EULER is in many ways similar to Renardus, the differences being that EULER will broker to more diverse services in a specific subject realm. The service is based on a distributed architecture utilising Z39.50. The services brokered to represent library catalogues, subject bibliographies, electronic periodicals, pre-prints in full text and Internet resources. All the resources brokered to have been carefully harmonised by exporting and processing metadata to achieve consistency in regard to the common metadata format based on Dublin Core. Identical Z39.50 gateways have been installed at all sites.

As mentioned above the service is still in a test phase. Background and a detailed description of design considerations regarding the project are given in Berggren and Brümmer (1999) , the main project Web page contains reports for 1998 and 1999 and is located at : http://www.emis.de/projects/EULER/

Figure

2.9: EULER related to MIA

Access to the service is through standard Web browsers

The HTTP-Z39.50 gateway for cross-searching can be attributed to this layer, this software also contains functions for elimination of duplicates etc.

Not relevant for EULER

The system is based on the Z39.50 protocol

Currently EULER brokers the services of six different providers. All databases are distributed.